11 Using RDF Graph Server and Query UI

This chapter contains the following sections:

- Introduction to RDF Graph Server and Query UI

The RDF Graph Server and Query UI allows you to run SPARQL queries and perform advanced RDF graph data management operations using a REST API and an Oracle JET based query UI. - RDF Graph Server and Query UI Concepts

Learn the key concepts for using the RDF Graph Server and Query UI. - Oracle RDF Graph Query UI

The Oracle RDF Graph Query UI is an Oracle JET based client that can be used to manage RDF objects from different data sources, and to perform SPARQL queries and updates.

Parent topic: RDF Graph Server and Query UI

11.1 Introduction to RDF Graph Server and Query UI

The RDF Graph Server and Query UI allows you to run SPARQL queries and perform advanced RDF graph data management operations using a REST API and an Oracle JET based query UI.

The RDF Graph Server and Query UI consists of RDF RESTful services and a Java EE client application called RDF Graph Query UI. This client serves as an administrative console for Oracle RDF and can be deployed to a Java EE container.

The RDF Graph Server and RDF RESTful services can be used to create a SPARQL endpoint for RDF graphs in Oracle Database.

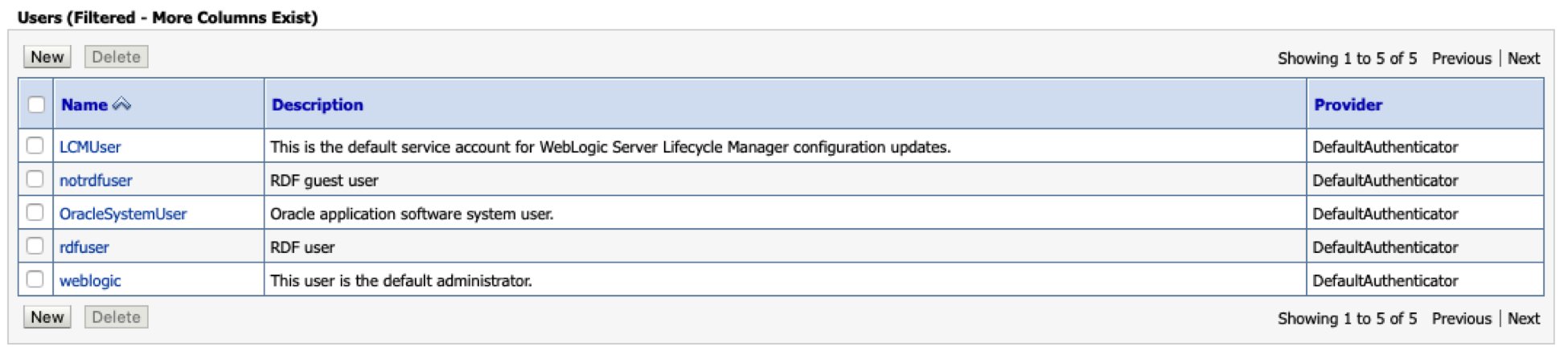

The following figure shows the RDF Graph Server and Query UI architecture:

Figure 11-1 RDF Graph Server and Query UI

The salient features of the RDF Graph Query UI are as follows:

-

Uses RDF RESTful services to communicate with RDF data stores, which can be an Oracle RDF data source or an external RDF data source.

-

Allows you to perform CRUD operations on various RDF objects such as private networks, models, rule bases, entailments, network indexes and data types for Oracle data sources.

-

Allows you to execute SPARQL queries and update RDF data.

-

Provides a graph view of SPARQL query results.

-

Uses Oracle JET for user application web pages.

Parent topic: Using RDF Graph Server and Query UI

11.2 RDF Graph Server and Query UI Concepts

Learn the key concepts for using the RDF Graph Server and Query UI.

- Data Sources

Data sources are repositories of RDF objects. - RDF Datasets

Each RDF data source contains metadata information that describe the avaliable RDF objects. - REST Services

An RDF REST API allows communication between client and backend RDF data stores.

Parent topic: Using RDF Graph Server and Query UI

11.2.1 Data Sources

Data sources are repositories of RDF objects.

A data source can refer to an Oracle database, or to an external RDF service that can be accessed by an endpoint URL such as Dbpedia or Jena Apache Fuseki. The data source can be defined by generic and as well as specific parameters. Some of the generic parameters are name, type, and description. Specific parameters are JDBC properties (for database data sources) and endpoint base URL (for external data sources).

11.2.1.1 Oracle Data Sources

Oracle data sources are defined using JDBC connections. Two types of Oracle JDBC data sources can be defined:

-

A container JDBC data source that can be defined inside the application Server (WebLogic, Tomcat, or others)

-

An Oracle wallet data source that contains the files needed to make the database connection

The parameters that define an Oracle database data source include:

-

name: a generic name of the data source.

-

type: the data source type. For databases must be ‘DATABASE’.

-

description (optional): a generic description of the data source.

-

properties: specific mapping parameters with values for data source properties:

-

For a container data source: JNDI name: Java naming and directory interface (JNDI) name.

-

For a wallet data source: wallet service: a string describing the wallet.

For a cloud wallet it is usually an alias name stored in the tnsnames.ora file, but for a simple wallet it contains the host, port, and service name information.

-

The following example shows the JSON representation of a container data source:

{

"name": "rdfuser_ds_ct",

"type": "DATABASE",

"description": "Database Container connection",

"properties": {

"jndiName": "jdbc/RDFUSER193c"

}

}

The following example shows the JSON representation of a wallet data source:

{

"name": "rdfuser_ds_wallet",

"type": "DATABASE",

"description": "Database wallet connection",

"properties": {

"walletService": "db202002041627_medium"

}

}

Parent topic: Data Sources

11.2.1.2 Endpoint URL Data Sources

External RDF data sources are defined using an endpoint URL. In general, each RDF store has a generic URL that accepts SPARQL queries and SPARQL updates. Depending on the RDF store service, it may also provide some capabilities request to retrieve available datasets.

Table 11-1 External Data source Parameters

| Parameters | Description |

|---|---|

|

name |

A generic name of the data source. |

|

type |

The type of the data source. For external data sources, the type must be ‘ENDPOINT’. |

|

description |

A generic description of the data source. |

| properties |

Specific mapping parameters with values for data source properties:

|

The following example shows the JSON representation of a Dbpedia external data source :

{

"name": "dbpedia",

"type": "ENDPOINT",

"description": "Dbpedia RDF data - Dbpedia.org",

"properties": {

"baseUrl": "http://dbpedia.org/sparql",

"provider": "Dbpedia"

}

}The following example shows the JSON representation of a Apache Jena Fuseki external data source. The ${DATASET} is a parameter that is replaced at run time with the Fuseki dataset name:

{

"name": "Fuseki",

"type": "ENDPOINT",

"description": "Jena Fuseki server",

"properties": {

"queryUrl": "http://localhost:8080/fuseki/${DATASET}/query",

"baseUrl": "http://localhost:8080/fuseki",

"capabilities": {

"getUrl": "http://localhost:8080/fuseki/$/server",

"datasetsParam": "datasets",

"datasetNameParam": "ds.name"

},

"provider": "Apache",

"updateUrl": "http://localhost:8080/fuseki/${DATASET}/update"

}

}Parent topic: Data Sources

11.2.2 RDF Datasets

Each RDF data source contains metadata information that describe the avaliable RDF objects.

The following describes the metadata information defined by each provider.

-

Oracle RDF data sources: The RDF metadata includes information about the following RDF objects: private networks, models (real, virtual, view), rulebases, entailments, network indexes and datatypes.

-

External RDF providers: For Apache Jena Fuseki, the metadata includes dataset names. Other external providers may not have a metadata concept, in which case the base URL points to generic (default) metadata.

RDF datasets point to one or more RDF objects available in the RDF data source. A dataset definition is used in SPARQL query requests. Each provider has its own set of properties to describe the RDF dataset.

The following are a few examples of a JSON representation of a dataset.

Oracle RDF dataset definition:

[

{

"networkOwner": "RDFUSER",

"networkName": "MYNET",

"models": ["M1"]

}

]Apache RDF Jena Fuseki dataset definition:

[

{

"name": "dataset1"

}

]For RDF stores that do not have a specific dataset, a simple JSON {} or a 'Default' name as shown for Apache Jena Fuseki in the above example can be used.

Parent topic: RDF Graph Server and Query UI Concepts

11.2.3 REST Services

An RDF REST API allows communication between client and backend RDF data stores.

The REST services can be divided into the following groups:

-

Server generic services: allows access to available data sources, and configuration settings for general, proxy, and logging parameters.

-

Oracle RDF services: allows CRUD operations on Oracle RDF objects.

-

SPARQL services: allows execution of SPARQL queries and updates on the data sources.

Assuming the deployment of RDF web application with context-root set to

orardf, on localhost machine and port number

7101, the base URL for REST requests is

http://localhost:7101/orardf/api/v1.

Note:

The examples in this section and throughout this chapter references host machine aslocalhost and

port number as 7101. These values can vary depending on your

application deployment.

The following are some RDF REST examples:

- Get the server information:

The following is a public endpoint URL. It can be used to test if the server is up and running.

http://localhost:7101/orardf/api/v1/utils/version - Get a list of data sources:

http://localhost:7101/orardf/api/v1/datasources - Get general configuration parameters:

http://localhost:7101/orardf/api/v1/configurations/general - Get a list of RDF semantic networks for Oracle RDF:

http://localhost:7101/orardf/api/v1/networks?datasource=rdfuser_ds_193c - Get a list of all Oracle RDF models for MDSYS network:

http://localhost:7101/orardf/api/v1/models?datasource=rdfuser_ds_193c - Get a list of all Oracle RDF real models for a private semantic network (applies

from 19c databases):

http://localhost:7101/orardf/api/v1/models?datasource=rdfuser_ds_193c&networkOwner=RDFUSER&networkName=LOCALNET&type=real - Post request for SPARQL query:

http://localhost:7101/orardf/api/v1/datasets/query?datasource=rdfuser_ds_193c&datasetDef={"metadata":[ {"networkOwner":"RDFUSER", "networkName":"LOCALNET","models":["UNIV_BENCH"]} ] }Query Payload:

select ?s ?p ?o where { ?s ?p ?o} limit 10 - Get request for SPARQL query:

http://localhost:7101/orardf/api/v1/datasets/query?datasource=rdfuser_ds_193c&query=select ?s ?p ?o where { ?s ?p ?o} limit 10&datasetDef={"metadata":[ {"networkOwner":"RDFUSER", "networkName":"LOCALNET","models":["UNIV_BENCH"]} ] } - Put request to publish an RDF

model:

http://localhost:7101/orardf/api/v1/datasets/publish/DSETNAME?datasource=rdfuser_ds_193c&datasetDef={"metadata":[ {"networkOwner":"RDFUSER", "networkName":"LOCALNET" "models":["UNIV_BENCH"]} ]}Default SPARQL Query Payload:

select ?s ?p ?o where { ?s ?p ?o} limit 10This default SPARQL can be overwritten when requesting the contents of a published dataset.

- Get request for a published dataset:

The following is a public endpoint URL. It is using the default parameters (SPARQL query, output format, and others) that are stored in dataset definition. However, these default parameters can be overwritten in REST request by passing new parameter values.

http://localhost:7101/orardf/api/v1/datasets/query/published/DSETNAME?datasource=rdfuser_ds_193c

A detailed list of available REST services can be found in the Swagger json file, orardf_swagger.json, which is packaged in the application documentation directory.

Parent topic: RDF Graph Server and Query UI Concepts

11.3 Oracle RDF Graph Query UI

The Oracle RDF Graph Query UI is an Oracle JET based client that can be used to manage RDF objects from different data sources, and to perform SPARQL queries and updates.

This Java EE application helps to build application webpages that query and display RDF graphs. It supports queries across multiple data sources.

- Installing RDF Graph Query UI

- Managing User Roles for RDF Graph Query UI

- Getting Started with RDF Graph Query UI

- Accessibility

Parent topic: Using RDF Graph Server and Query UI

11.3.1 Installing RDF Graph Query UI

In order to get started on Oracle Graph Query UI, you must download and install the application.

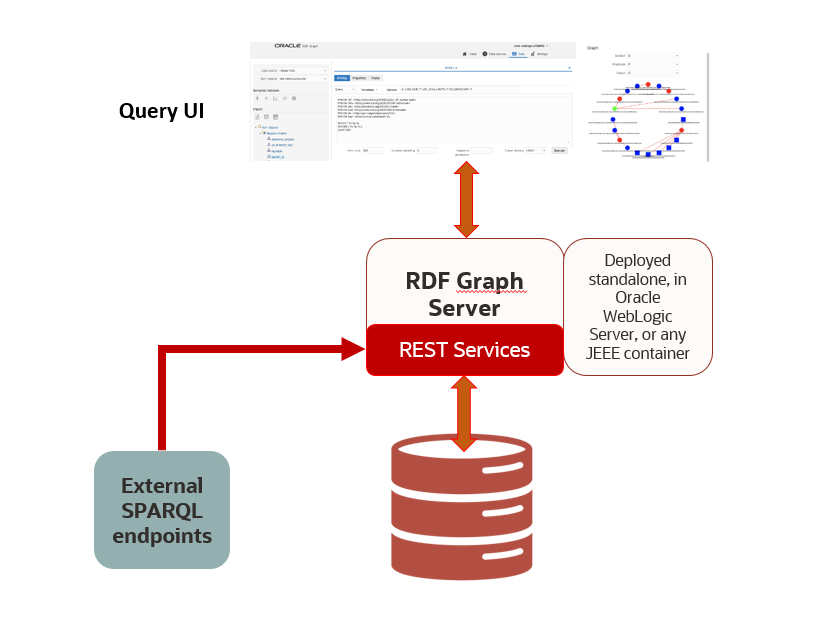

Go to Oracle Graph Server and Client downloads page and download Oracle Graph Webapps.

The deployment contains the files as shown in the following figure:

Figure 11-2 Oracle Graph Webapps deployment

The deployment of the RDF .war file provides the Oracle RDF Graph Query UI console.

The rdf-doc folder contains the User Guide documentation.

This deployment also includes the REST API running on the application server to handle communication between users and backend RDF data stores.

Parent topic: Oracle RDF Graph Query UI

11.3.2 Managing User Roles for RDF Graph Query UI

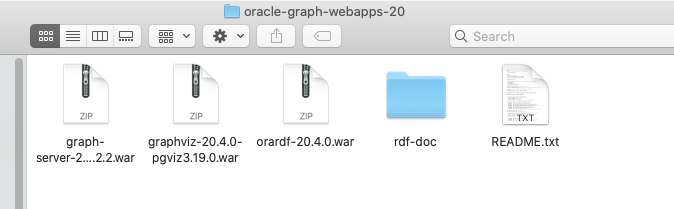

Users will have access to the application resources based on their role level. In order to access the Query UI application, you need to enable a role for the user.

The following describes the different user roles and their privileges:

-

Administrator: An administrator has full access to the Query UI application and can update configuration files, manage RDF objects and can execute SPARQL queries and SPARQL updates.

-

RDF: A RDF user can read or write Oracle RDF objects and can execute SPARQL queries and SPARQL updates. But, cannot modify configuration files.

-

Guest: A guest user can only read Oracle RDF objects and can only execute SPARQL queries.

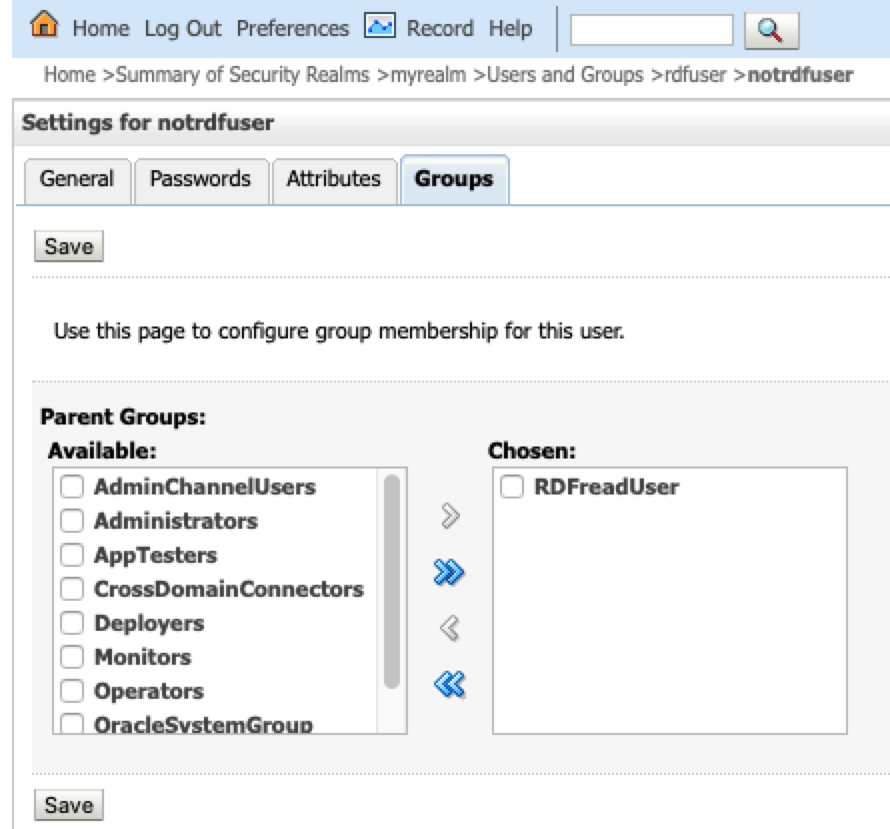

Figure 11-3 User Roles for RDF Graph Query

Application servers, such as WebLogic Server, Tomcat, and others, allow you to define and assign users to user groups. Administrators are set up at the time of the RDF Graph server installation, but the RDF and guest users must be created to access the application console.

Parent topic: Oracle RDF Graph Query UI

11.3.2.1 Managing Groups and Users in WebLogic Server

The security realms in WebLogic Server ensures that the user information entered as a part of installation is added by default to the Administrators group. Any user assigned to this group will have full access to the RDF Graph Query UI application.

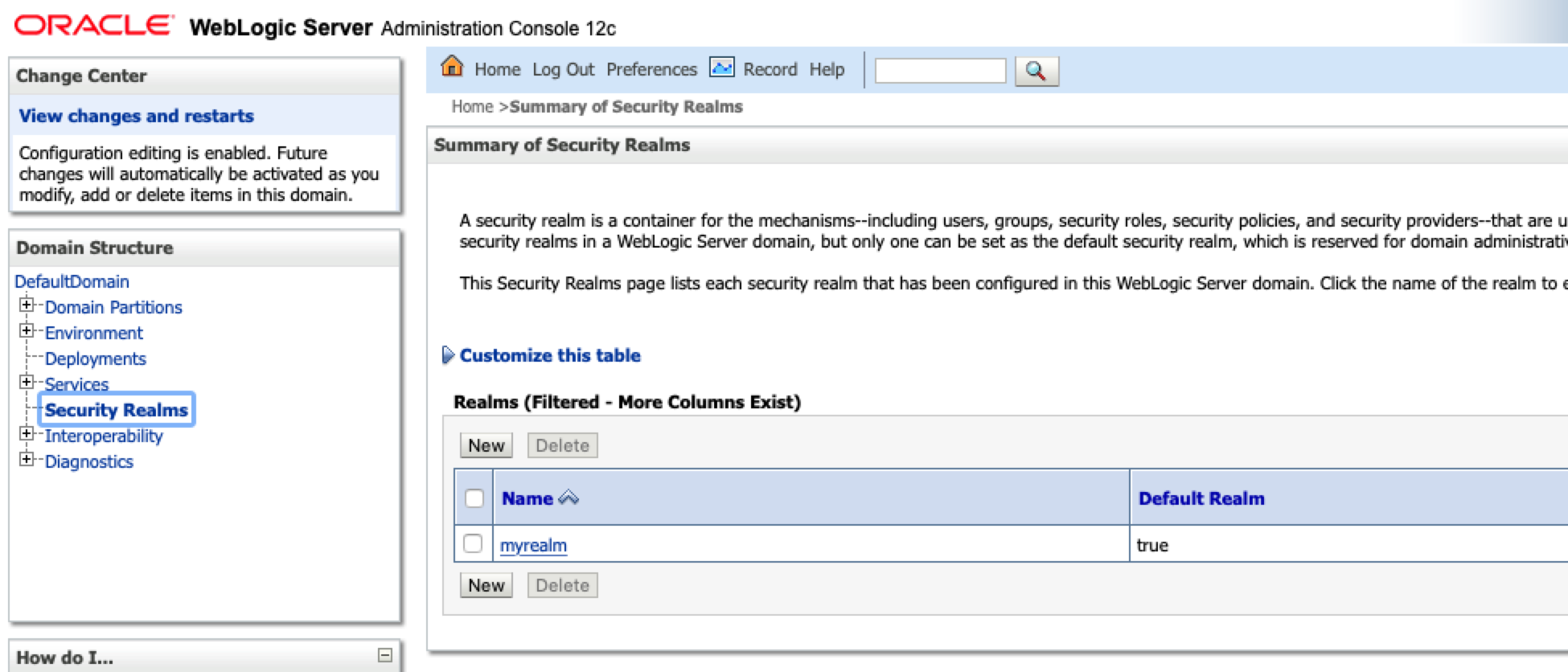

To open the WebLogic Server Administration Console, enter http://localhost:7101/console in your browser and logon using your administrative credentials. Click on Security Realms as shown in the following figure:

Figure 11-4 WebLogic Server Administration Console

Parent topic: Managing User Roles for RDF Graph Query UI

11.3.2.1.1 Creating User Groups in WebLogic Server

To create new user groups in WebLogic Server:

-

Select the security realm from the listed Realms in Figure 11-4.

-

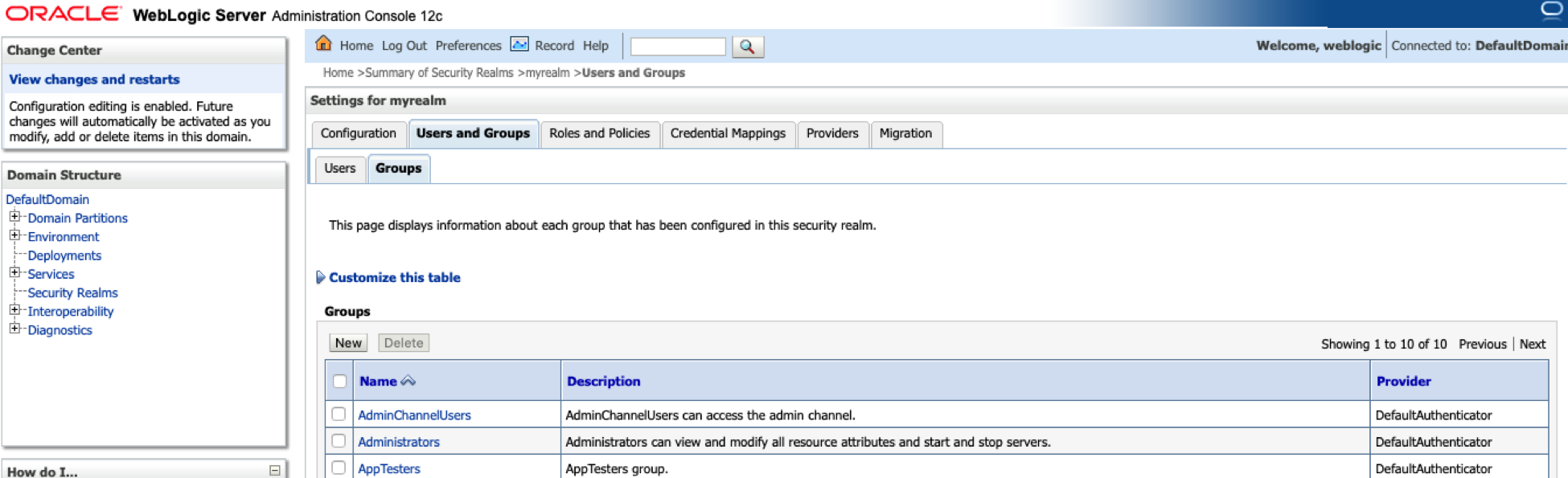

Click Users and Groups and then Groups.

-

Click New to create new RDF user groups in Weblogic as shown below:

Figure 11-5 Creating new user groups in WebLogic Server

The following example creates the following two user groups:

-

RDFreadUser: for guest users with just read access to application.

-

RDFreadwriteUser: for users with read and write access to RDF objects.

Figure 11-6 Created User Groups in WebLogic Server

Parent topic: Managing Groups and Users in WebLogic Server

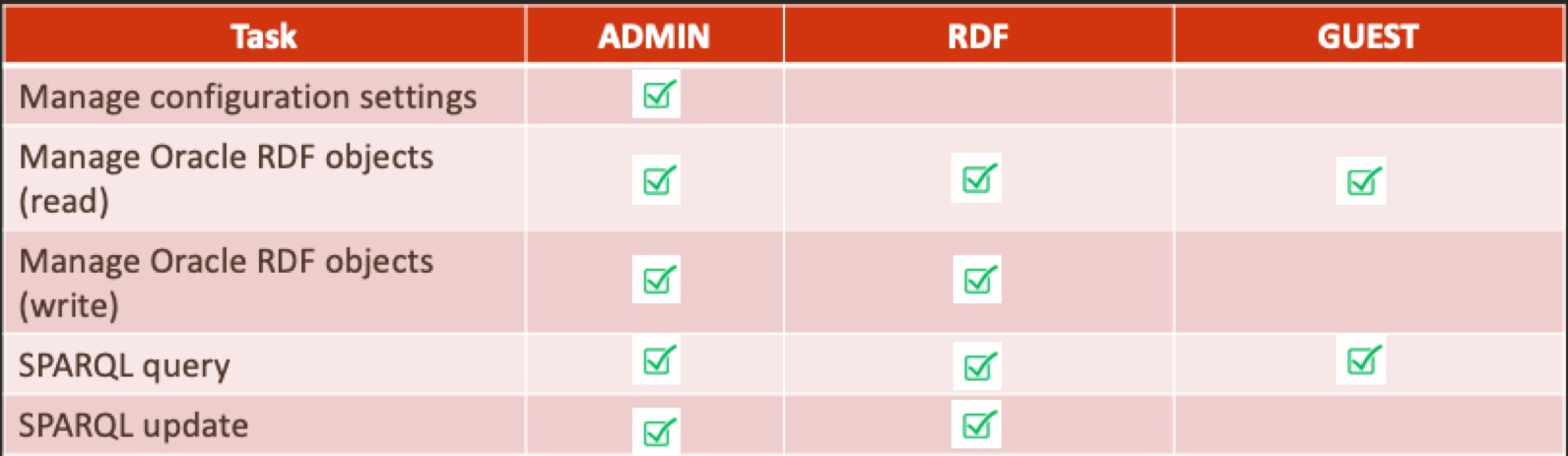

11.3.2.1.2 Creating RDF and Guest Users in WebLogic Server

In order to have RDF and guest users in the user groups you must first create the RDF and guest users and then assign them to their respective groups.

To create new RDF and guest users in WebLogic server:

Prerequisites: RDF and guest users groups must be available or they must be created. See Creating User Groups in WebLogic Server for creating user groups.

-

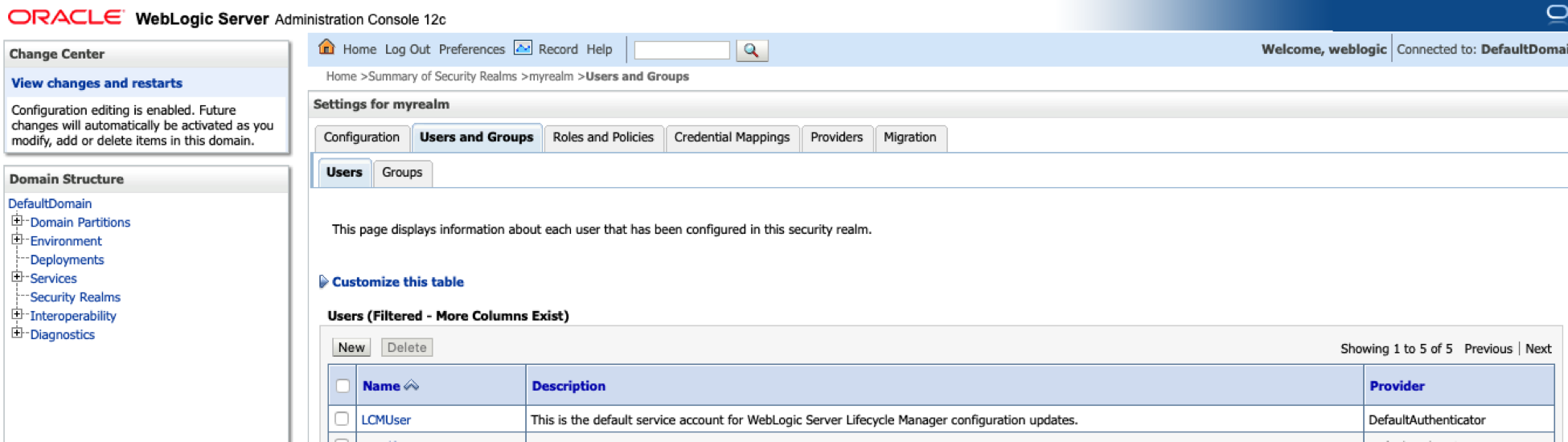

Select the security realm from the listed Realms as seen in Figure 11-4

-

Click Users and Groups tab and then Users.

-

Click New to create the RDF and guest users.

Figure 11-7 Create new users in WebLogic Server

The following example creates two new users :

-

rdfuser: user to be assigned to group with read and write privileges.

-

nonrdfuser: guest user to be assigned to group with just read privileges.

-

-

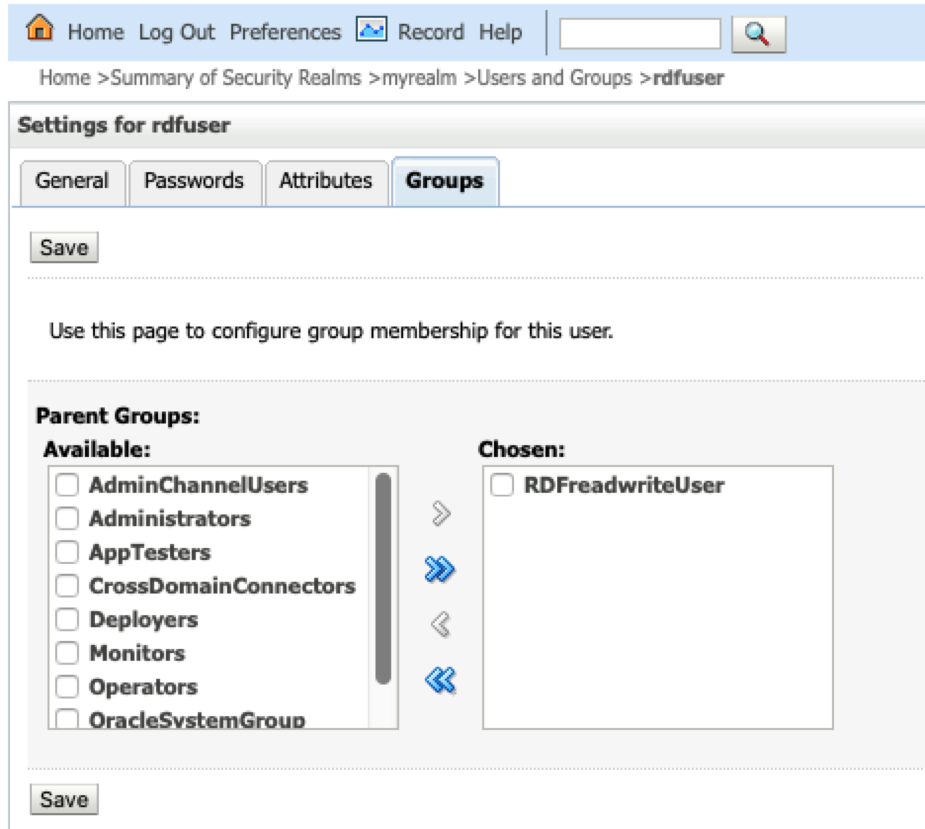

Select a user name and click Groups to assign the user to a specific group.

-

Assign rdfuser to RDFreadwriteUser group.

Figure 11-9 RDF User

-

Assign nonrdfuser to RDFreadUser group.

Figure 11-10 RDF Guest User

Parent topic: Managing Groups and Users in WebLogic Server

11.3.2.2 Managing Users and Roles in Tomcat Server

For Apache Tomcat, edit the Tomcat users file conf/tomcat-users.xml to include the RDF user roles. For example:

<tomcat-users xmlns="http://tomcat.apache.org/xml" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" version="1.0" xsi:schemaLocation="http://tomcat.apache.org/xml tomcat-users.xsd">

<role rolename="rdf-admin-user"/>

<role rolename="rdf-read-user"/>

<role rolename="rdf-readwrite-user"/>

<user password=adminpassword roles="manager-script,admin,rdf-admin-user" username="admin"/>

<user password=rdfuserpassword roles="rdf-readwrite-user" username="rdfuser"/>

<user password=notrdfuserpassword roles="rdf-read-user" username="notrdfuser"/>

</tomcat-users>Parent topic: Managing User Roles for RDF Graph Query UI

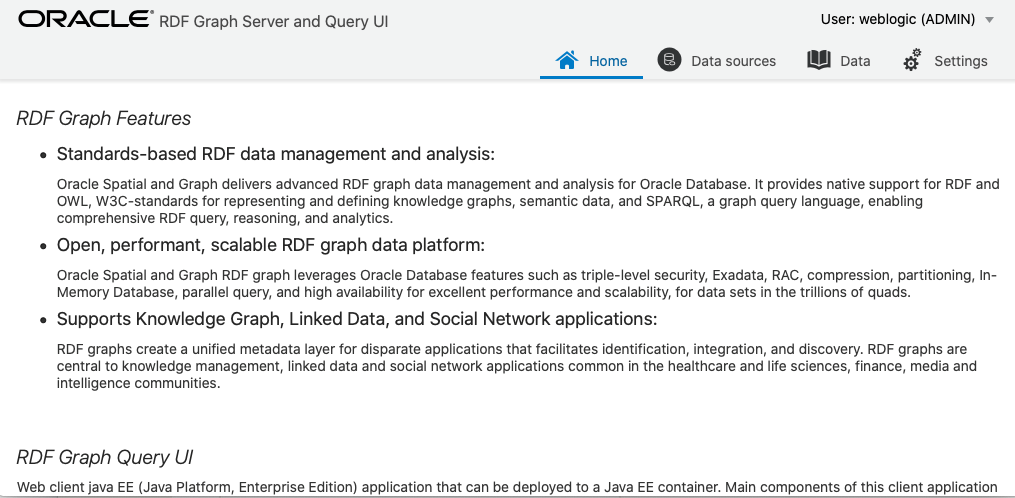

11.3.3 Getting Started with RDF Graph Query UI

The Oracle Graph Query UI contains a main page with RDF graph feature details and links to get started.

Figure 11-11 Query UI Main Page

The main page includes the following:

-

Home: Get an overview of the Oracle RDF Graph features.

-

Data sources: Manage your data sources.

-

Data: Manage, query or update RDF objects.

-

Settings: Set your configuration parameters.

Parent topic: Oracle RDF Graph Query UI

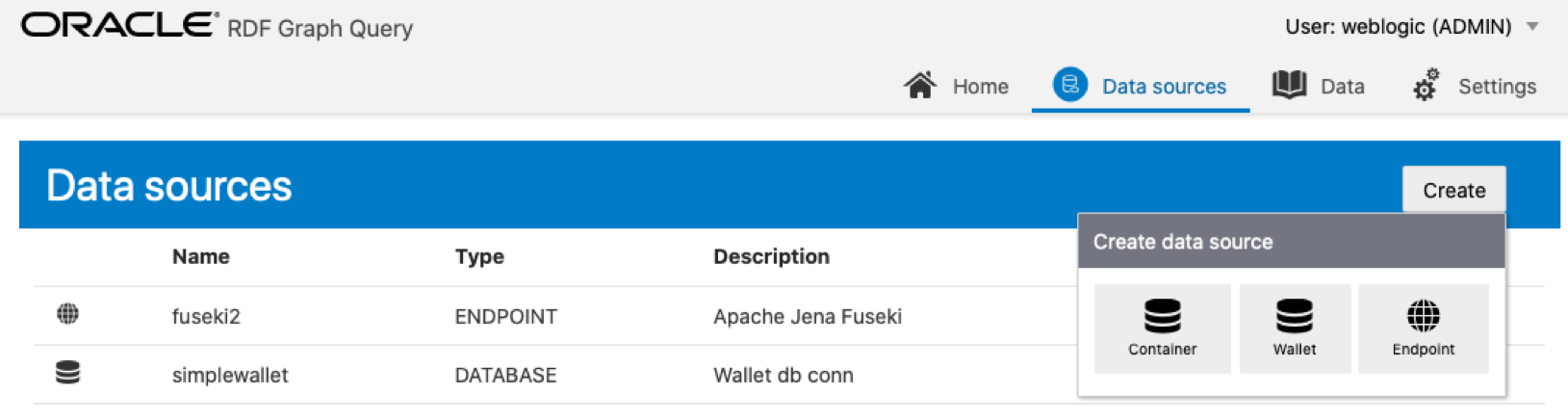

11.3.3.1 Data Sources Page

The Data Sources page allows you to create different types of data sources. Only administrator users can manage data sources. The RDF store can be linked to an Oracle Database or to an external RDF data provider. For Oracle data sources, there are two types of connections:

- JDBC data source defined on an application server

- Oracle wallet connection defined in a zip file

These database connections must be available in order to link the RDF web application to the data source.

To create a data source, click Data Sources, then Create.

Figure 11-12 Data Sources Page

11.3.3.1.1 Oracle Container

In order to create a container data source for the UI , the JDBC data source must exist in the application server.

- Creating a JDBC Data Source in WebLogic Server

- Creating a JDBC Data Source in Tomcat

- Creating an Oracle Container Data Source

Parent topic: Data Sources Page

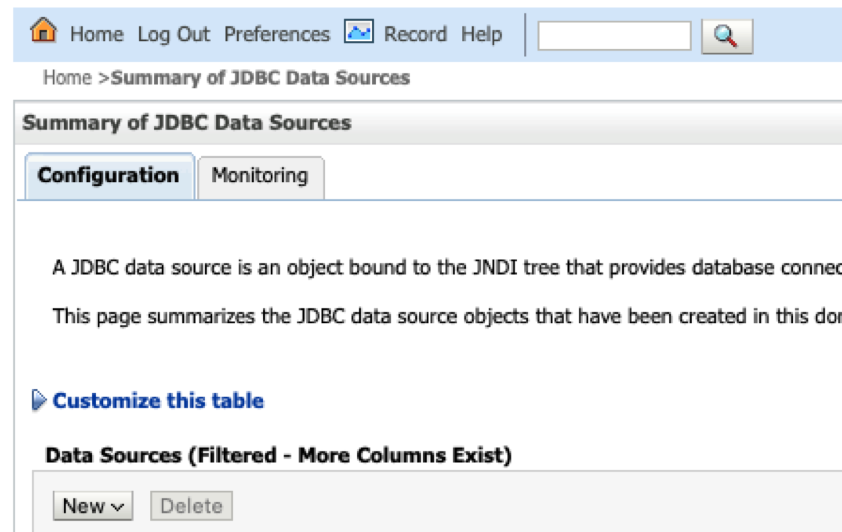

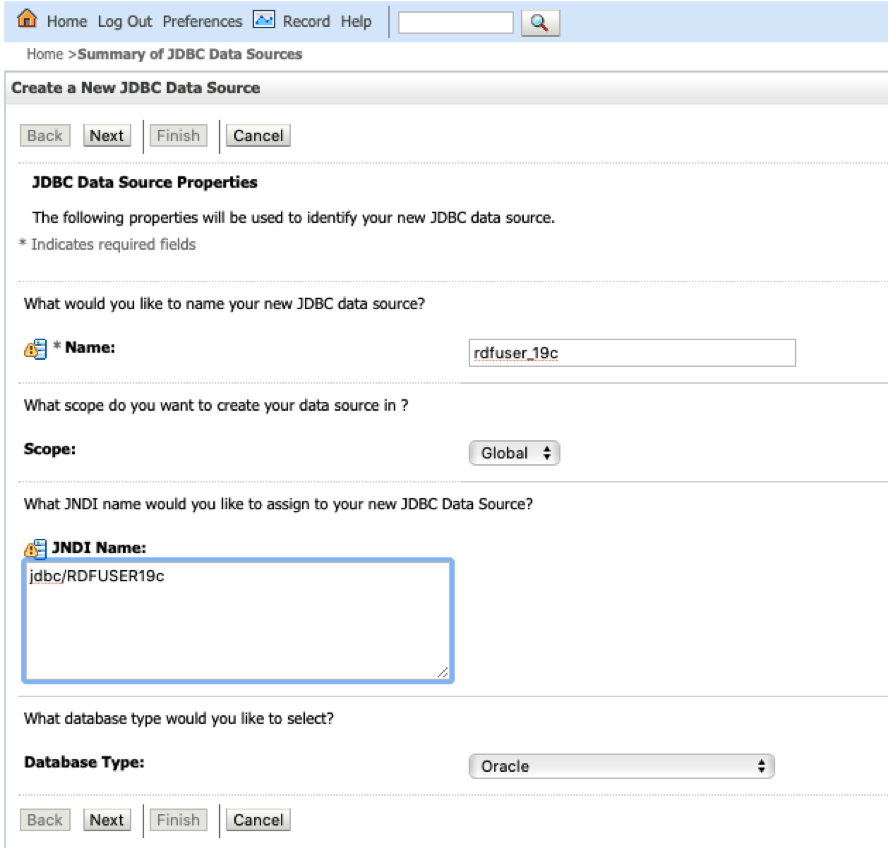

11.3.3.1.1.1 Creating a JDBC Data Source in WebLogic Server

To create a JDBC data source in WebLogic Server:

-

Log in to the WebLogic administration console as an administrator:

http://localhost:7101/console. -

Click Services, then JDBC Data sources.

-

Click New and select the Generic data source menu option to create a JDBC data source.

Figure 11-13 Generic Data Source

-

Enter the JDBC data source information (name and JNDI name), then click Next.

Figure 11-14 JDBC Data Source and JNDI

-

Accept the defaults on the next two pages.

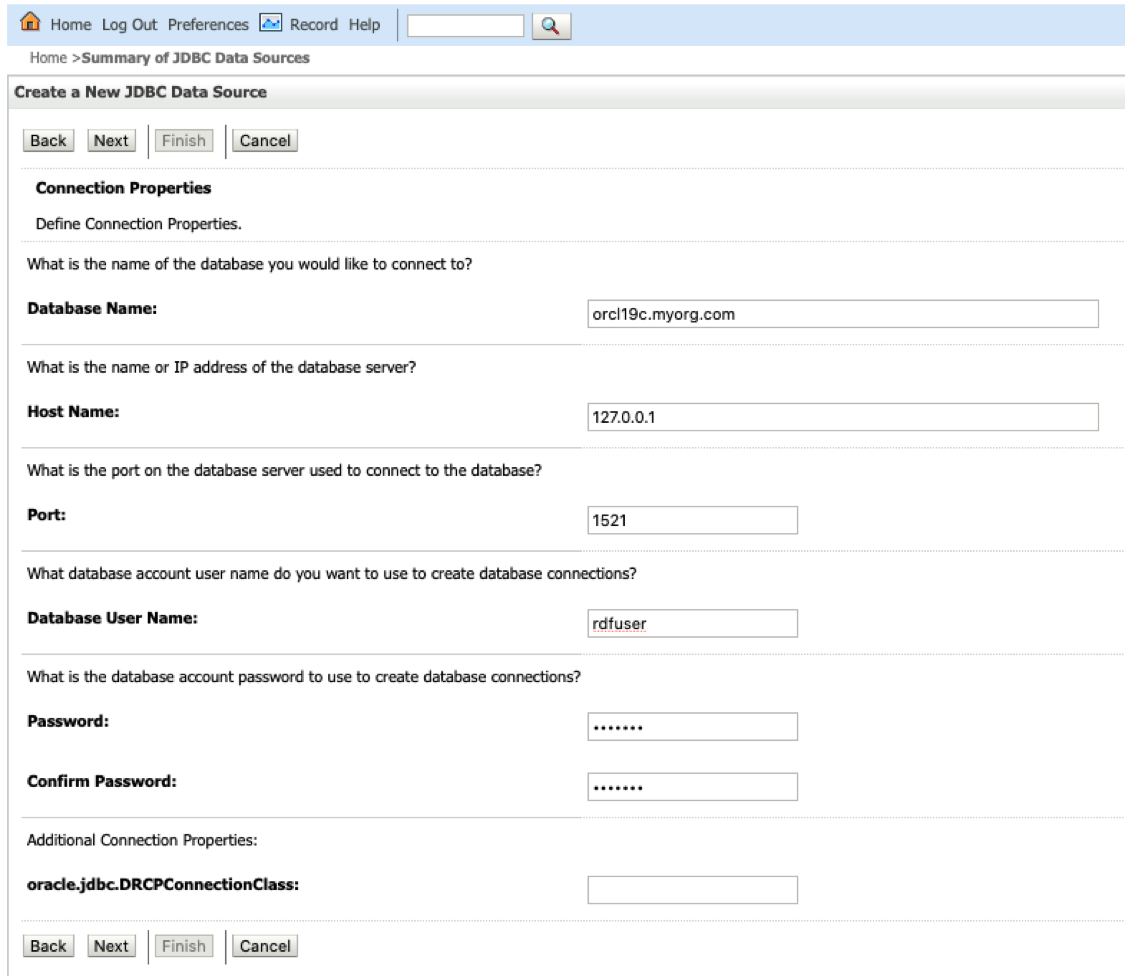

-

Enter the database connection information: service name, host, port, and user credentials.

Figure 11-15 Create JDBC Data Source

-

Click Next to continue.

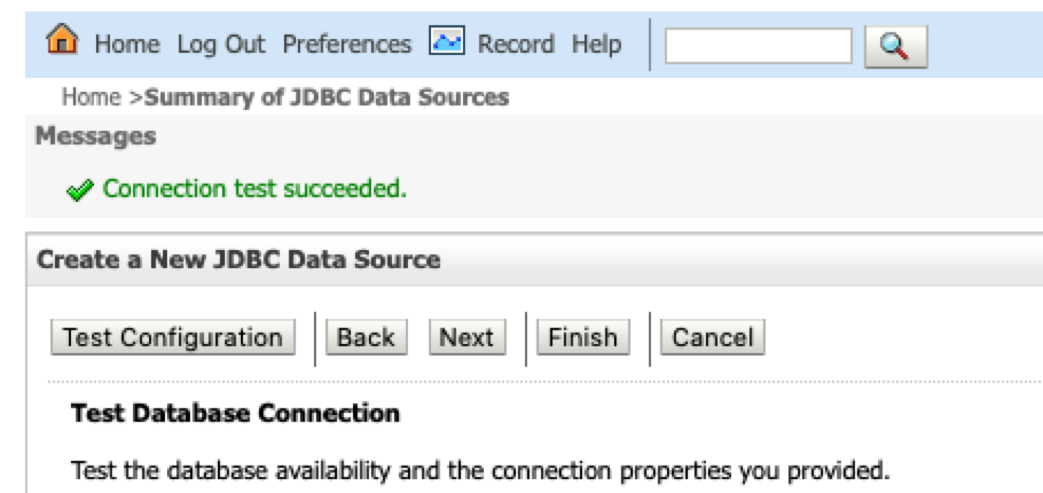

-

Click the Test Configuration button to validate the connection and click Next to continue.

Figure 11-16 Validate connection

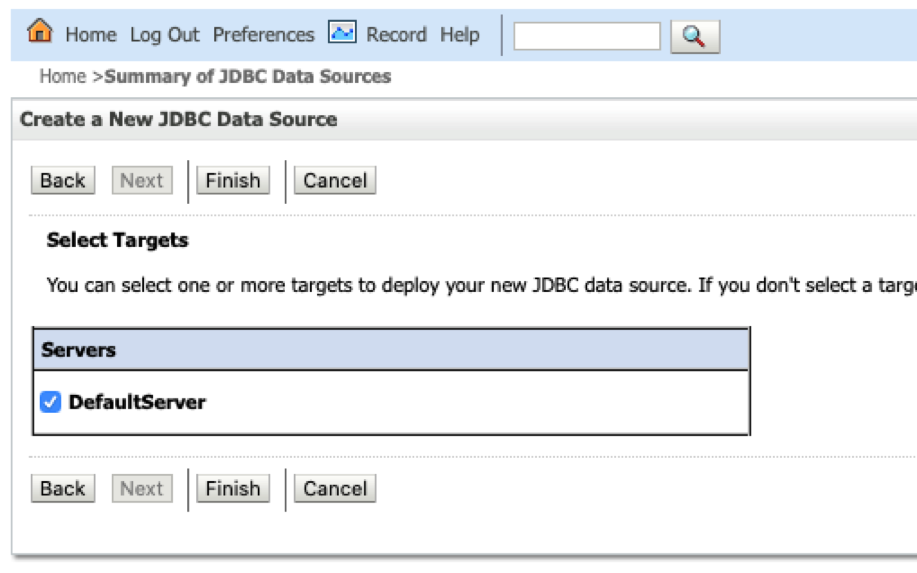

-

Select the server target and click Finish.

Figure 11-17 Create JDBC Data Source

The JDBC data gets added to the data source table and the JNDI name is added to the combo box list in the create container dialog.

Parent topic: Oracle Container

11.3.3.1.1.2 Creating a JDBC Data Source in Tomcat

There are different ways to create a JDBC data source in Tomcat. See Tomcat documentaion for more details.

conf/server.xml and conf/content.xml.

-

Add global JNDI resources on

conf/server.xml.<GlobalNamingResources> <Resource name="jdbc/RDFUSER19c" auth="Container" global="jdbc/RDFUSER19c" type="javax.sql.DataSource" driverClassName="oracle.jdbc.driver.OracleDriver" url="jdbc:oracle:thin:@host.name:db_port_number:db_sid" username="rdfuser" password="rdfuserpwd" maxTotal="20" maxIdle="10" maxWaitMillis="-1"/> </GlobalNamingResources> -

Add the resource link to global JNDI’s on

conf/context.xml:<Context> <ResourceLink name="jdbc/RDFUSER19c" global="jdbc/RDFUSER19c" auth="Container" type="javax.sql.DataSource" /> </Context>

Parent topic: Oracle Container

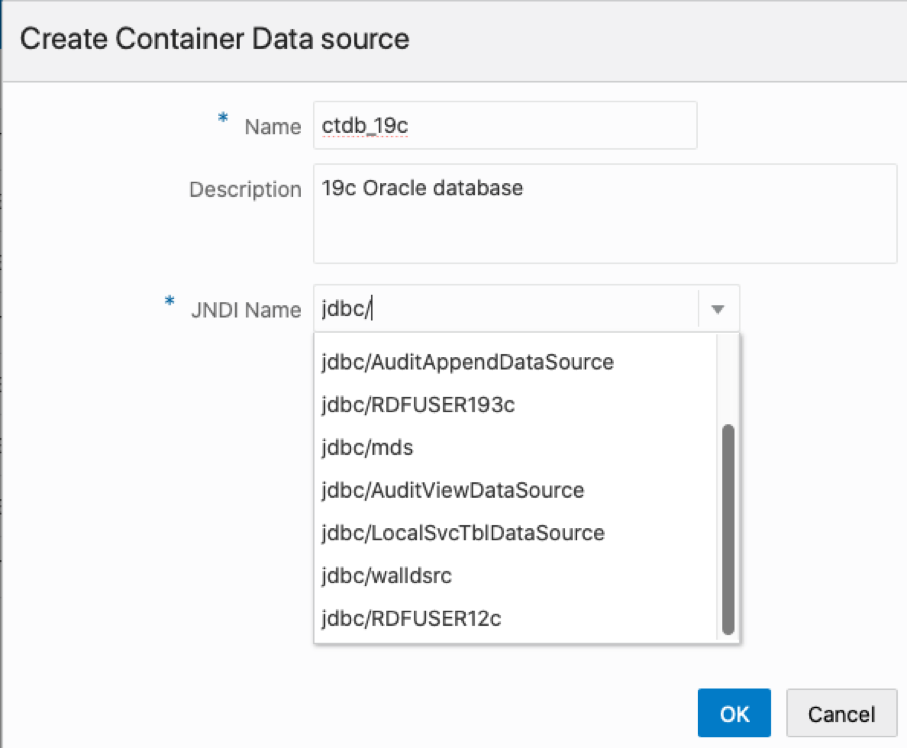

11.3.3.1.1.3 Creating an Oracle Container Data Source

To create a Oracle Container data source in the application server containing JDBC data sources:

- Click the Container button

- Enter the required Data Source Name, and select the JDBC data source JNDI name that exists on the Application Server.

Figure 11-18 Create Container Data Source

Parent topic: Oracle Container

11.3.3.1.2 Oracle Wallet

Oracle Wallet provides a simple and easy method to manage database credentials across multiple domains. It lets you update database credentials by updating the wallet instead of having to change individual data source definitions. This is accomplished by using a database connection string in the data source definition that is resolved by an entry in the wallet.

The wallet can be a simple wallet for storing the SSO and PKI files, or a cloud wallet that also contains TNS information and other files.

To create a wallet data source in the Oracle Graph Query UI application, you must create a wallet zip file that stores user credentials for each service. Ensure that the file is stored in a safe location for security reasons. The wallet files can be created using some Oracle utilities such as mkstore or orapki, or using the Oracle Wallet Manager application

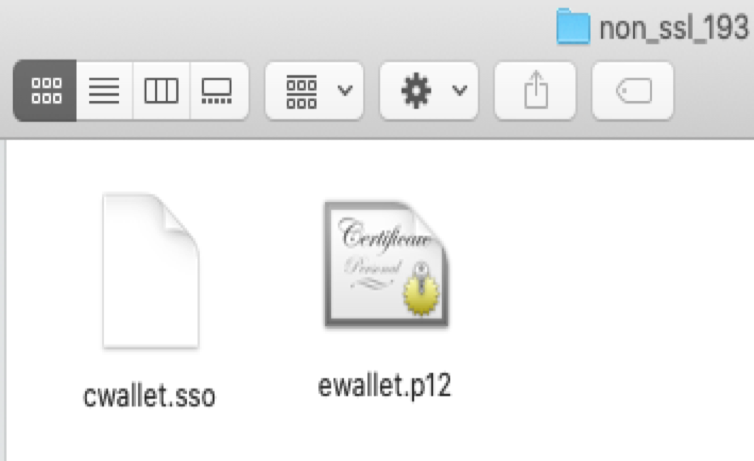

Creating a Simple Wallet

The following are the steps to create a Simple Wallet:

-

Create the wallet directory:

mkdir /tmp/wallet

-

Create the wallet files using mkstore utility. You will be prompted for a password. Save the password to the wallet:

${ORACLE_HOME}/bin/mkstore –wrl /tmp/wallet –create -

Add a database connection with user credentials. The wallet service in this case will be a string with host, port, and service name information

${ORACLE_HOME}/bin/mkstore –wrl /tmp/wallet –createCredential host:port/serviceName username password -

Zip the wallet directory to make it available for use in web application

Figure 11-19 Simple Wallet

The created Simple wallet directory will contain the cwallet.sso and ewallet.p12 files.

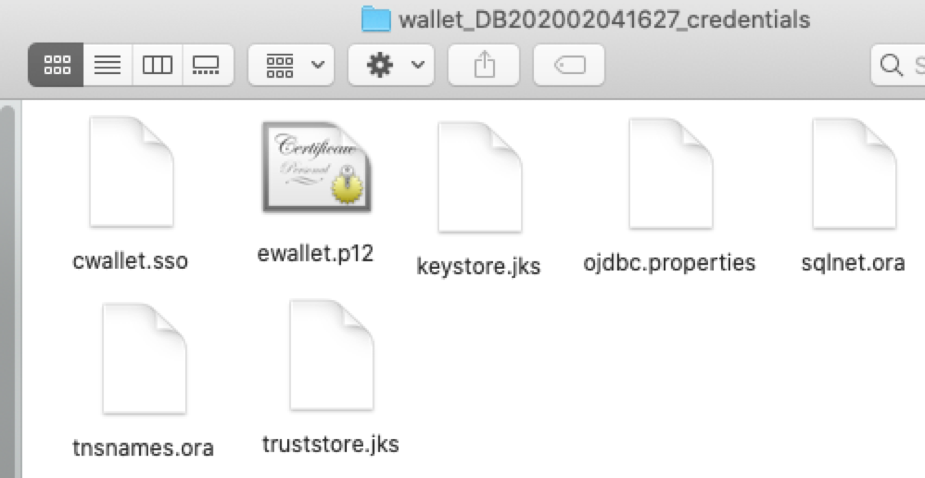

Creating an Oracle Cloud Wallet

The following are the steps to create a Oracle Cloud Wallet:

-

Navigate to the Autonomous Database details page.

-

Click DB Connection.

-

On the Database Connection page, select the Wallet Type.

-

Click Download.

-

Enter the password information and download the file ( default filename is

Wallet_databasename.zip).

The cloud zip file contains the files displayed in Figure 11-20 . The tnsnames.ora file contains the wallet service alias names, and TCPS information. However, it does not contain the user credentials for each service. To use the wallet with the Oracle RDF Graph Query web application for creating a data source, you must store the credentials in the wallet file. Execute the following steps to add credentials to the wallet zip file:

-

Unzip the cloud wallet zip file in a temporary directory.

-

Use the service name alias in the tnsnames.ora to store credentials.

For example, if the service name alias is db202002041627_medium:

${ORACLE_HOME}/bin/mkstore –wrl /tmp/cloudwallet –createCredential db202002041627_mediumusername password -

Zip the cloud wallet files into a new zip file.

Figure 11-20 Cloud Wallet

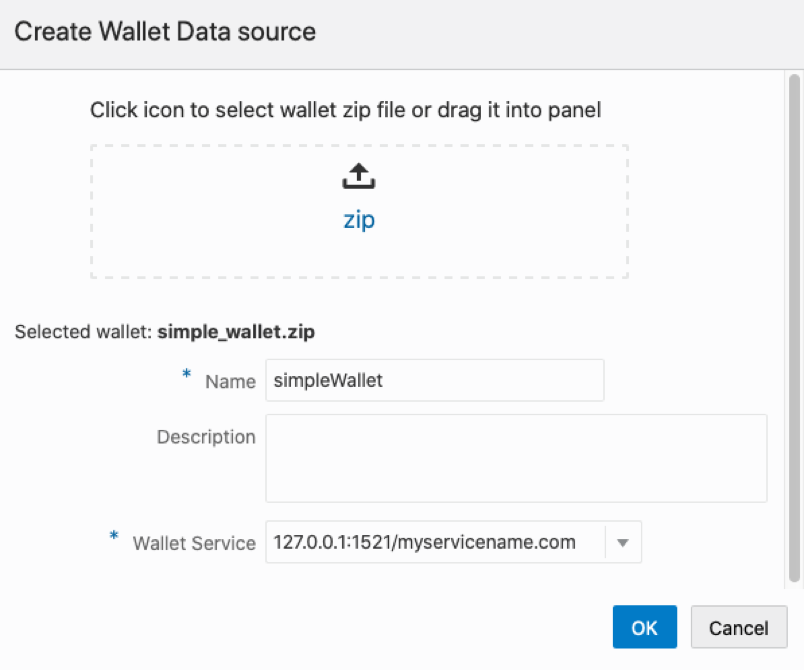

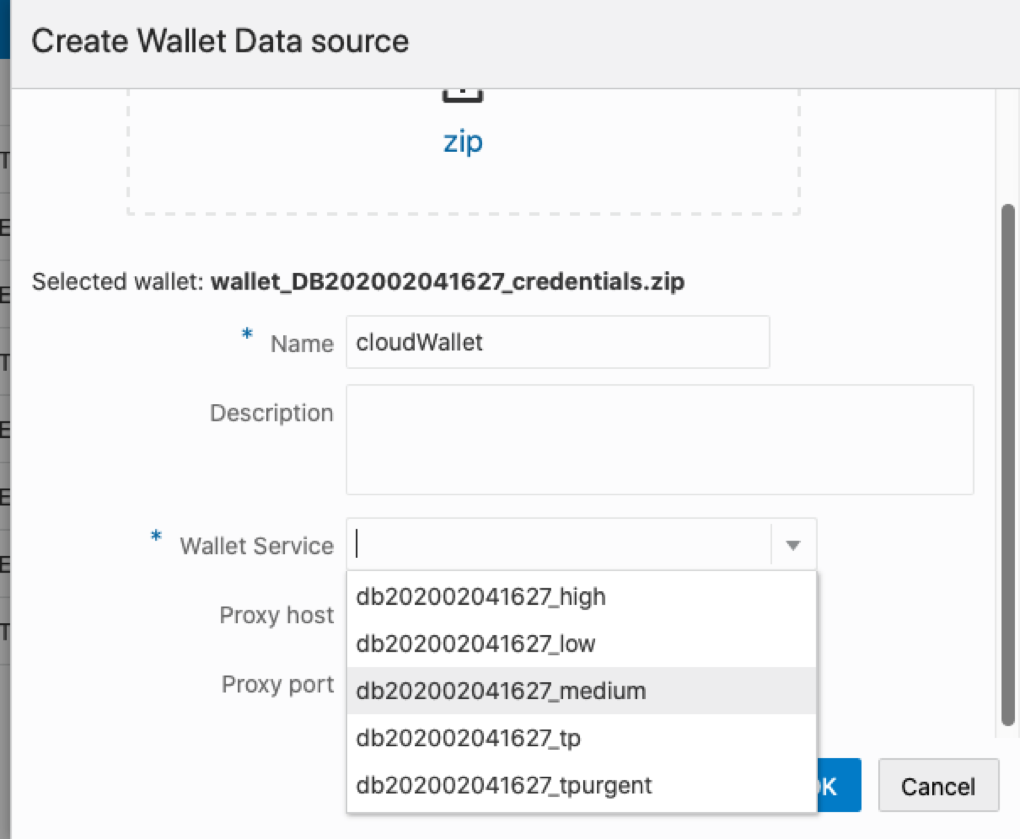

Creating a Wallet Data Source

Using the wallet zip file, you can create a Wallet data source in the Oracle RDF Graph Query web application. Click the Wallet button to display the wallet dialog, and perform the following steps:

-

Click on the upload button, and select the wallet zip file.

The zip file gets uploaded to the server.

-

Enter the required data source name.

-

Enter the optional data source description.

-

Define the wallet service:

-

For simple wallet, enter the wallet service string stored in the wallet file.

-

For cloud wallet, select the service name from the combo box seen in Figure 11-22

.

-

Figure 11-21 Wallet Data Source from simple zip

Figure 11-22 Wallet Data Source from cloud zip

Parent topic: Data Sources Page

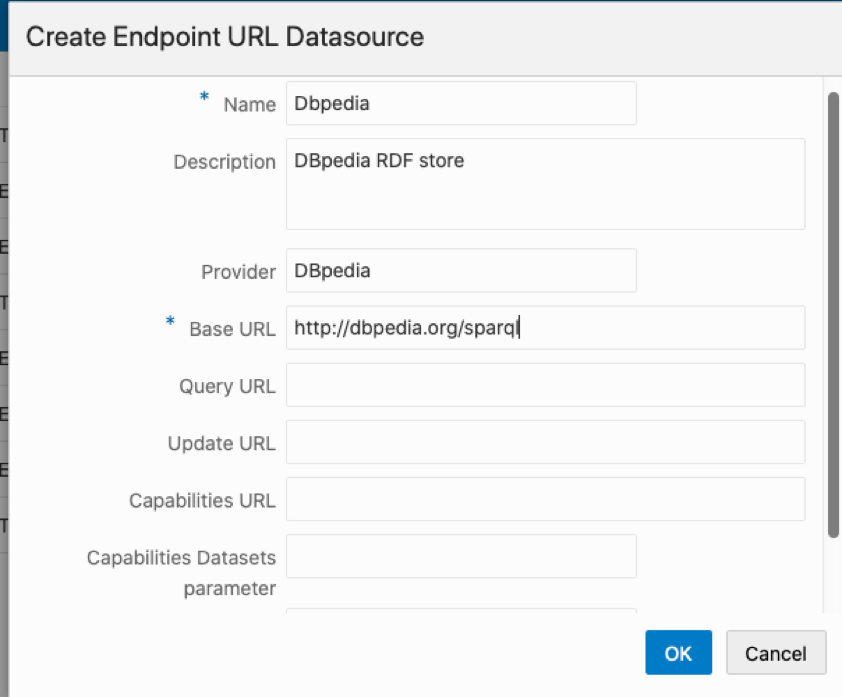

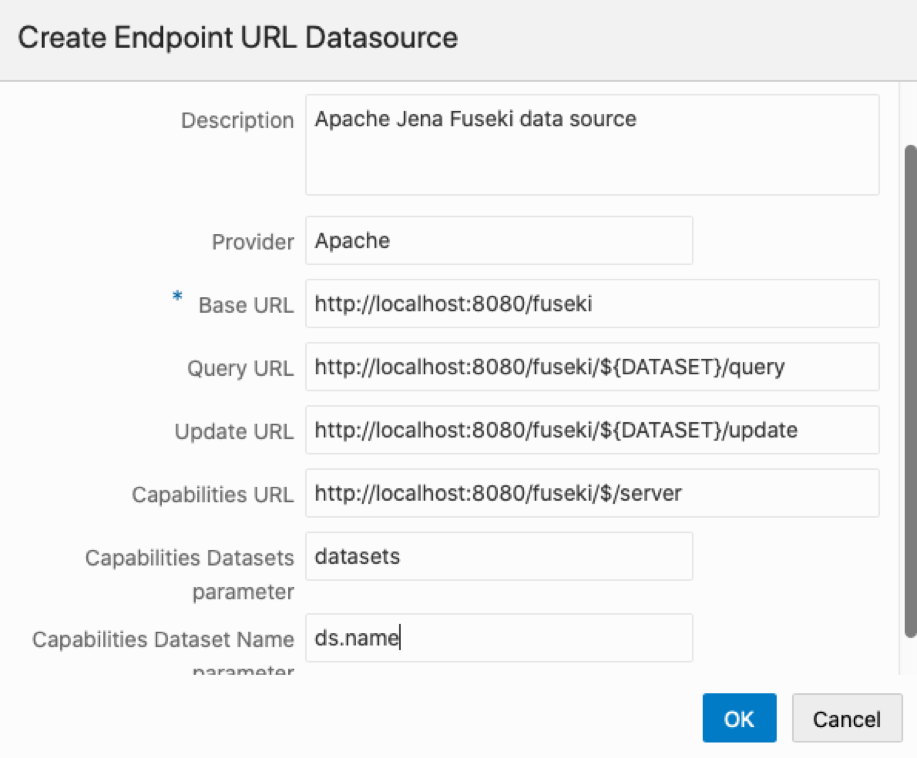

11.3.3.1.3 Endpoint URL

External data sources are connected to the RDF data store using the endpoint URL.

You can execute SPARQL queries and updates to the RDF data store using a base URL. In some cases, such as Apache Jena Fuseki, there are specific URLs based on the dataset name. For example:

-

DBpedia Base URL: http://dbpedia.org/sparql

-

Apache Jena Fuseki (assuming a dataset name

dset):-

Query URL:

http://localhost:8080/fuseki/dset/query -

Update URL:

http://localhost:8080/fuseki/dset/update

-

The RDF web application issues SPARQL queries to RDF datasets. These datasets can be retrieved from provider if a get capabilities request is available. For DBpedia, there is a single base URL to be used, and therefore a default single dataset is handled in application. For Apache Jena Fuseki, there is a request that returns the available RDF datasets in server: http://localhost:8080/fuseki/$/server. Using this request, the list of available datasets can be retrieved for specific use in an application.

To create an external RDF data source:

- Click the Endpoint button.

- Define the following parameters and then click OK:

- Name: the data source name.

- Description: optional description about data source.

- Provider: optional provider name.

- Base URL: base URL to access RDF service.

- Query URL: optional URL to execute SPARQL queries (if not defined, the base URL is used).

- Update URL: optional URL to execute SPARQL updates (if not defined, the base URL is used).

- Capabilities parameters: properties to retrieve dataset information from RDF server.

- Get URL: URL address that should return a JSON response with information about the dataset.

- Datasets parameter: the property in JSON response that contains the dataset information.

- Dataset name parameter: the property in datasets parameter that contains the dataset name.

Note:

For Jena Fuseki, the expression ${DATASET} will be replaced by the dataset name at runtime when SPARQL queries or SPARQL updates are being executed.

The following figures show an example for creating a DBpedia and Apache Jena Fuseki data sources.

Figure 11-23 DBpedia Data Source

Figure 11-24 Apache Jena Fuseki Data Source

Parent topic: Data Sources Page

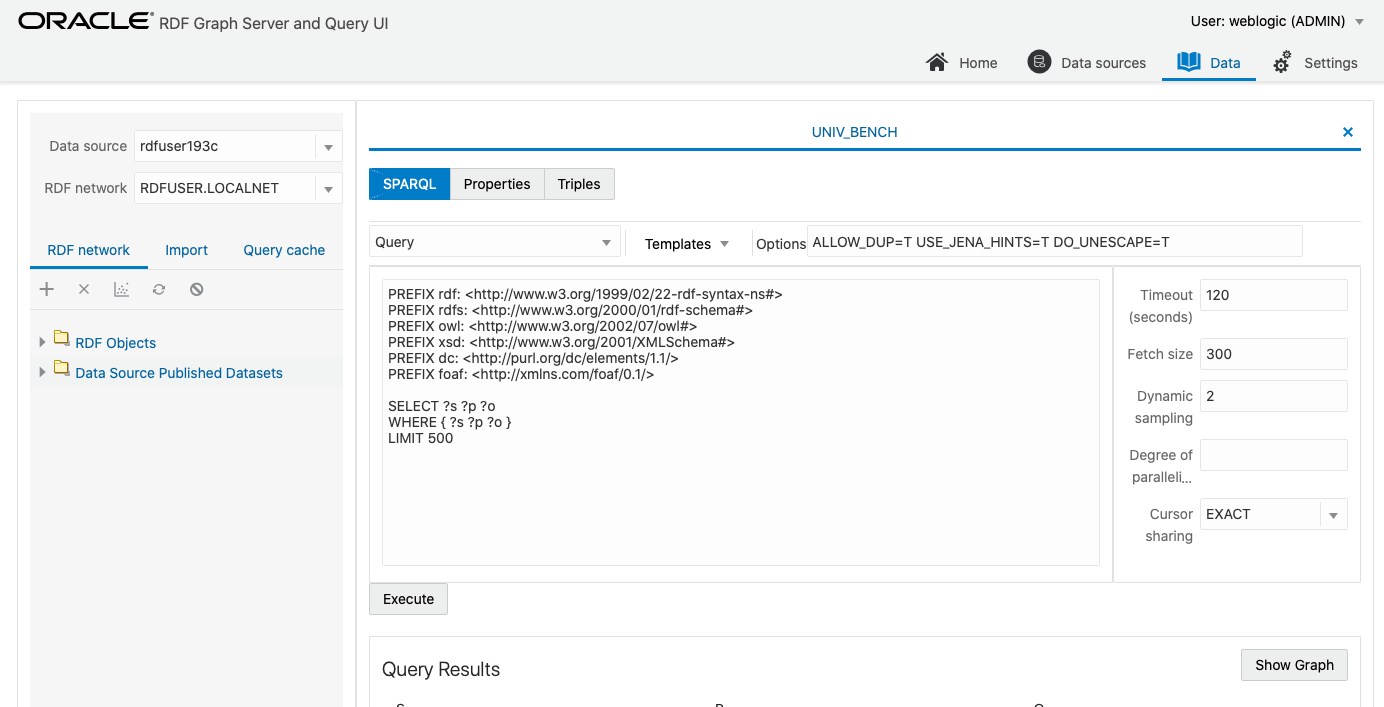

11.3.3.2 RDF Data Page

You can manage and query RDF objects in the RDF Data page.

Figure 11-25 RDF Data Page

The left panel contains information on the available RDF data in the data source. The right panel is used for opening properties of a RDF object. Depending on the property type, SPARQL queries and SPARQL updates can be executed.

- Data Source Selection

- Semantic Network Actions

- Importing Data

- SPARQL Query Cache Manager

- RDF Objects Navigator

- Performing SPARQL Query and SPARQL Update Operations

- Publishing Oracle RDF Models

Parent topic: Getting Started with RDF Graph Query UI

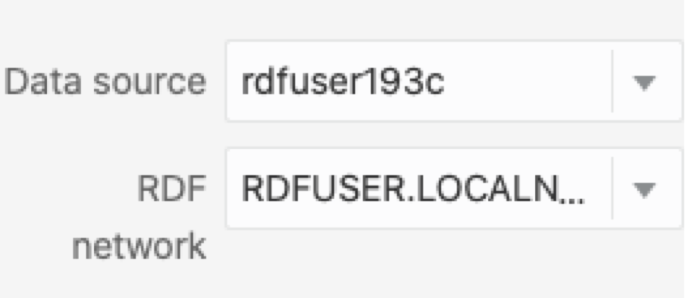

11.3.3.2.1 Data Source Selection

The data source can be selected from the list of available data sources present in the Figure 11-25.

Figure 11-26 RDF Network

Note:

Before Release 19, all semantic networks were stored on MDSYS schema. From Release 19 onwards, private networks are being supported.Parent topic: RDF Data Page

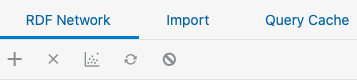

11.3.3.2.2 Semantic Network Actions

You can execute the following semantic network actions:

Figure 11-27 RDF Semantic Network Actions

- Create a semantic network.

- Delete a semantic network.

- Gather statistics for a network.

- Refresh network indexes.

- Purge values not in use.

Parent topic: RDF Data Page

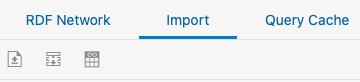

11.3.3.2.3 Importing Data

For Oracle semantic networks, the process of importing data into a RDF model is generally done by bulk loading the RDF triples that are available on staging table.

Figure 11-28 RDF Import Data Actions

The available actions include:

-

Upload one or more RDF files into an Oracle RDF staging table. This staging table can be reused in other bulk load operations. Files with extensions

.nt(N-triples),.nq(N-quads),.ttl(Turtle), and.trig(TriG) are supported for import. There is a limit of file size to be imported, which can be tuned by administrator.Also, zip files can be used to import multiple files at once. However, the zip file is validated first, and will be rejected if any of the following conditions occur:

- Zip file contains directories

- Zip entry name extension is not a known RDF format (.nt, .nq, .ttl, .trig)

- Zip entry size or compressed size is undefined

- Zip entry size does exceed maximum unzipped entry size

- Inflate ratio between compressed size and file size is lower than minimum inflate ratio

- Zip entries total size does exceed maximum unzipped total size

-

Bulk load the staging table records into an Oracle RDF model.

-

View the status of bulk load events.

Parent topic: RDF Data Page

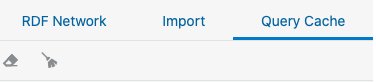

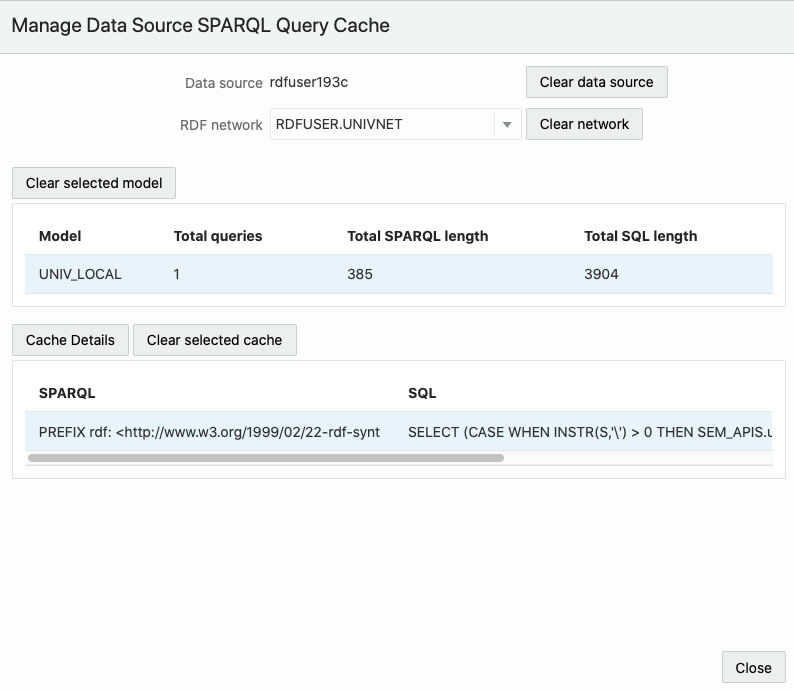

11.3.3.2.4 SPARQL Query Cache Manager

SPARQL queries are cached data source, and they apply to Oracle data sources. The translations of the SPARQL queries into SQL expressions are cached for Oracle RDF network models. Each model can stores up to 64 different SPARQL queries translations. The Query Cache Manager dialog, allows user to browse data source network cache for queries executed in models.

Figure 11-29 SPARQL Query Cache Manager

You can clear cache at different levels. The following describes the cache cleared against each level:

- Data source: All network caches are cleared.

- Network: All model caches are cleared.

- Model: All cached queries for model are cleared.

- Model Cache Identifier: Selected cache identifier is cleared.

Figure 11-30 Manage SPARQL Query Cache

Parent topic: RDF Data Page

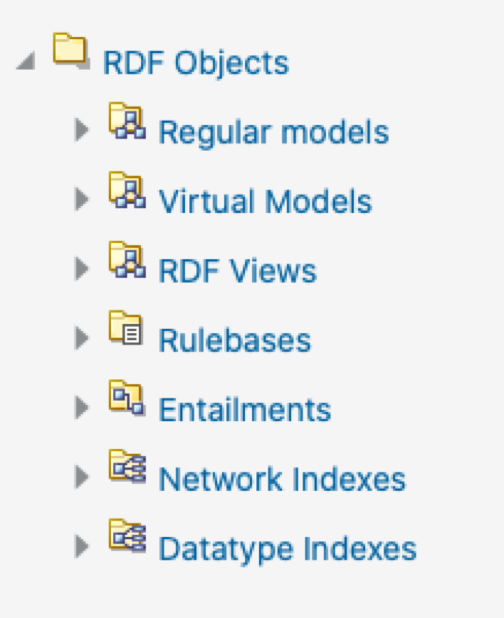

11.3.3.2.5 RDF Objects Navigator

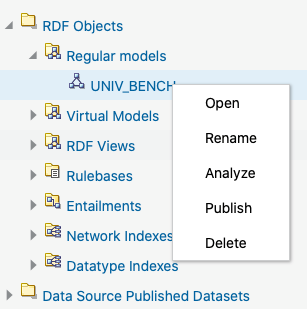

The navigator tree shows the available RDF objects for the selected data source.

-

For Oracle data sources, it will contain the different concept types like models, virtual models, view models, RDF view models, rule bases, entailments, network indexes, and datatype indexes.

Figure 11-31 RDF Objects for Oracle Data Source

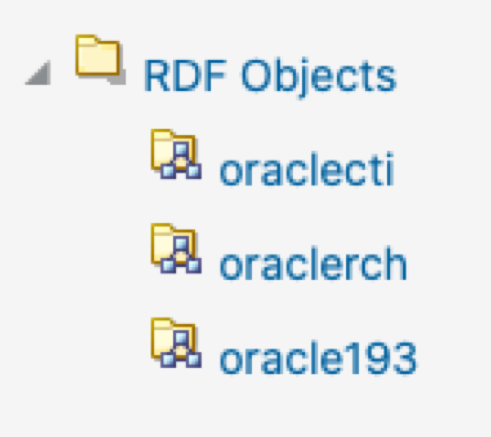

-

For endpoint RDF data sources, the RDF navigator will have a list of names representing the available RDF datasets in the RDF store.

Figure 11-32 RDF Objects from capabilities

-

If an external RDF data source does not have a capabilities URL, then just a default dataset is shown.

Figure 11-33 Default RDF Object

To execute SPARQL queries and SPARQL updates, open the selected RDF object in the RDF objects navigator. For Oracle RDF objects, SPARQL queries are available for models (regular models, virtual models, and view models).

Different actions can be performed on the navigator tree nodes. Right-clicking on a node under RDF objects will bring the context menu options (such as Open, Rename, Analyze, Publish, Delete) for that specific node. Guest users cannot perform actions that require a write privilege.

Figure 11-34 RDF Navigator - Context Menu

Parent topic: RDF Data Page

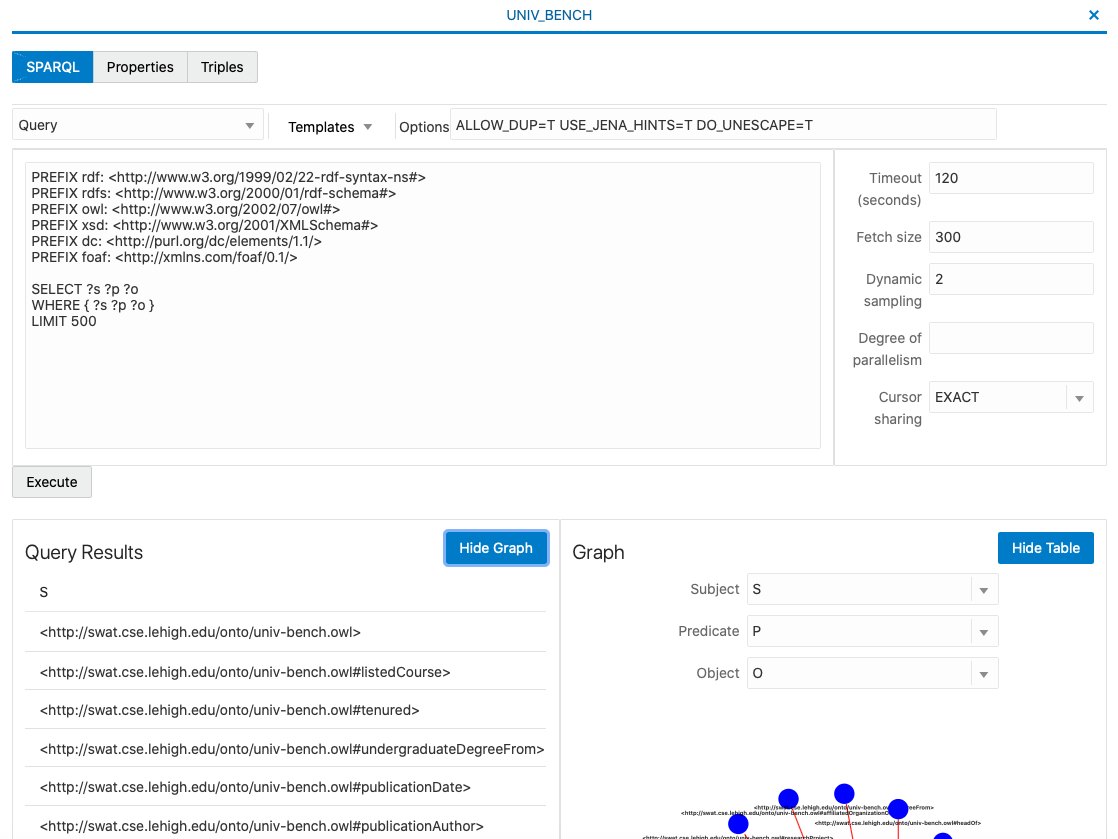

11.3.3.2.6 Performing SPARQL Query and SPARQL Update Operations

To execute SPARQL queries and updates, open the selected RDF object in the RDF objects navigator. For Oracle RDF objects, SPARQL queries are available for regular models, virtual models, and view models.

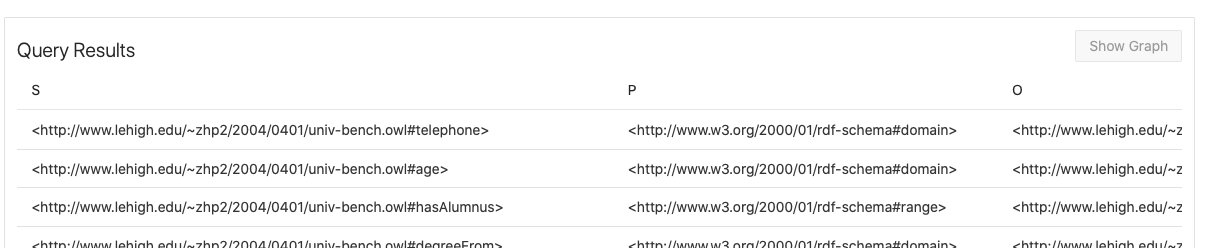

The following figure shows the SPARQL query page, containing the graph view.

Figure 11-35 SPARQL Query Page

The number of results on the SPARQL query is determined by the limit parameter in SPARQL string, or by the maximum number of rows that can be fetched from server. As an administrator you can set the maximum number of rows to be fetched in the settings page.

A graph view can be displayed for the query results. On the graph view, you must map the columns for the triple values (subject, predicate, and object).

Parent topic: RDF Data Page

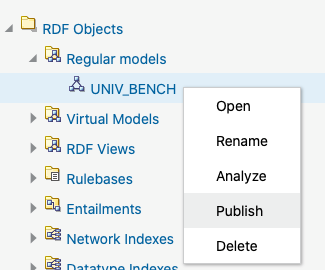

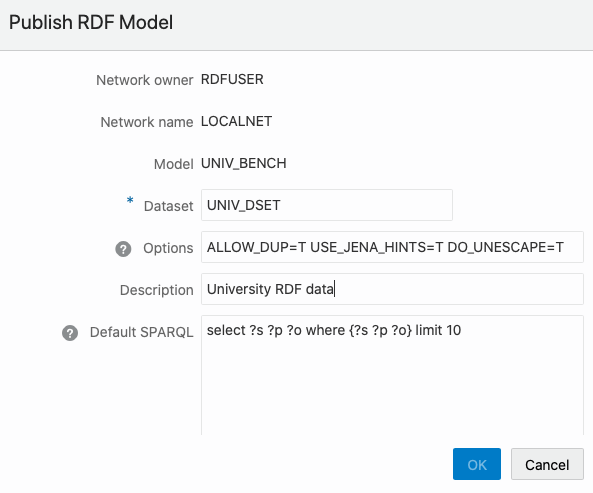

11.3.3.2.7 Publishing Oracle RDF Models

Oracle RDF models can be published as datasets which then become available through a public REST endpoint for SPARQL queries. This endpoint URL can be used directly in applications without entering credentials.

To publish an Oracle RDF model as a dataset:

- Right click the RDF model and select Publish from the menu as shown:

Figure 11-36 Publish Menu

- Enter the Dataset name (mandatory), Description, and Default

SPARQL. This default SPARQL can be overwritten on the REST request.

Figure 11-37 Publish RDF Model

- Click OK.

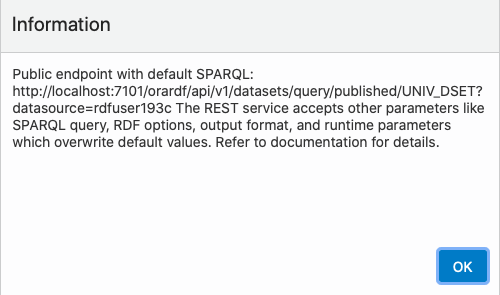

The public endpoint GET URL for the dataset is displayed:

Figure 11-38 GET URL Endpoint

This URL uses the default values defined for the dataset and follows the pattern shown:

http://${hostname}:${port_number}/orardf/api/v1/datasets/query/published/${dataset_name}?datasource=${datasource_name}You can override the default parameters stored in the dataset by modifying the URL to include one or more of the following parameters:- query: SPARQL query

- format: output format (

json,xml,csv,tsv,n-triples) - options: string with Oracle RDF options

- params: JSON string with runtime parameters

(

timeout,fetchSize, and others)

The following shows the general pattern of the REST request to query published datasets (assuming the context root as

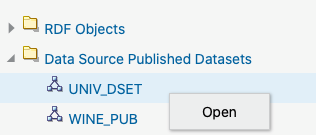

orardf):http://${hostname}:${port_number}/orardf/api/v1/datasets/query/published/${dataset_name}?datasource=${datasource_name}&query=${sparql}&format=${format}&options=${rdf_options}¶ms=${runtime_params}In order to modify the default parameters, you must open the RDF dataset definition by selecting Open from the menu options shown in the following figure or by double clicking the published dataset:Figure 11-39 Open an RDF Dataset Definition

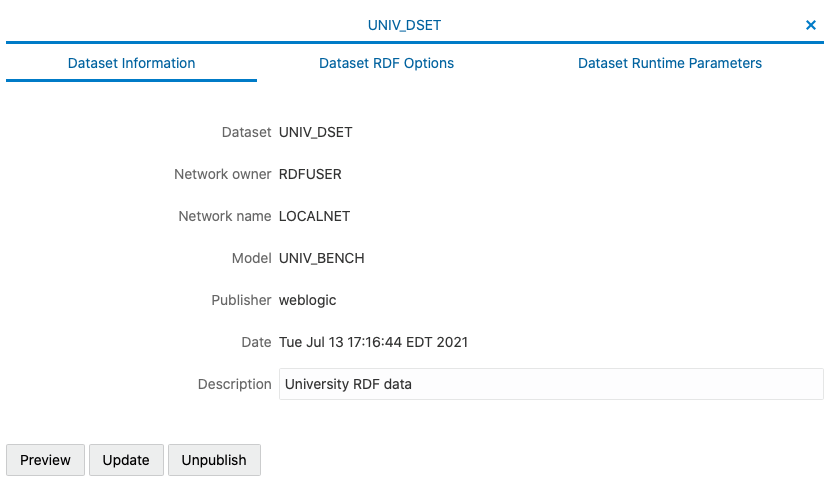

The RDF dataset definition for the selected published dataset opens as shown:

The RDF dataset definition for the selected published dataset opens as shown:Figure 11-40 RDF Dataset Definition

You can update the default parameters and preview the results.

Note:

- RDF user with administrator privileges can update and unpublish any dataset.

- RDF user with read and write privileges can only manage the datasets that the user created.

- RDF user with read privileges can only query the dataset.

Parent topic: RDF Data Page

11.3.3.3 Configuration Files for RDF Server and Client

The Graph Query UI application settings are determined by the JSON files that are included in the RDF Server and Client installation.

-

datasources.json: files with data sources information (general and access properties). -

general.json: general configuration parameters. -

proxy.json: proxy server parameters. -

logging.json: logging settings.

On the server side, the directory WEB-INF/workspace is the default directory to store configuration information, logs, and temporary files. The configuration files are stored by default in WEB-INF/workspace/config.

Note:

If the RDF Graph Query application is deployed from an unexploded

.war file, and if no JVM parameter is defined for the workspace

folder location, then the default workspace location for the application is

WEB-INF/workspace. However, any updates to the configuration,

log, and temp files done by the application may be lost if the application is

redeployed. Also, wallet data source files, and published dataset files can be

lost.

To overcome this, you must start the application server, such as Weblogic or Tomcat,

with the JVM parameter oracle.rdf.workspace.dir set. For example:

=Doracle.rdf.workspace.dir=/rdf/server/workspace. The workspace

folder must exist on the file system. Otherwise, the workspace folder defaults to

WEB-INF/workspace.

It is recommended to have a backup of the workspace folder, in case of redeploying the application on a different location. Copying the workspace folder contents to the location of the JVM parameter, allows to restore all configurations in new deployment.

- Data Sources JSON Configuration File

- General JSON configuration file

- Proxy JSON Configuration File

- Logging JSON Configuration File

Parent topic: Getting Started with RDF Graph Query UI

11.3.3.3.1 Data Sources JSON Configuration File

The JSON file for data sources stores the general attributes of a data source, including specific properties associated with data source.

The following example shows a data source JSON file with two data sources: one an Oracle container data source defined on the application server, and the other an external data source.

{

"datasources" : [

{

"name" : "rdfuser193c",

"type" : "DATABASE",

"description" : "19.3 Oracle database",

"properties" : {

"jndiName" : "jdbc/RDFUSER193c"

}

},

{

"name" : "dbpedia",

"type" : "ENDPOINT",

"description" : "Dbpedia RDF data - Dbpedia.org",

"properties" : {

"baseUrl" : "http://dbpedia.org/sparql",

"provider" : "Dbpedia"

}

}

]

}Parent topic: Configuration Files for RDF Server and Client

11.3.3.3.2 General JSON configuration file

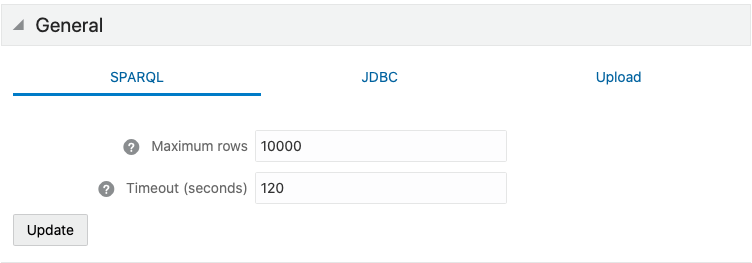

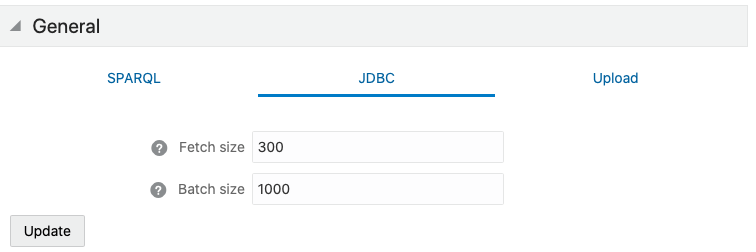

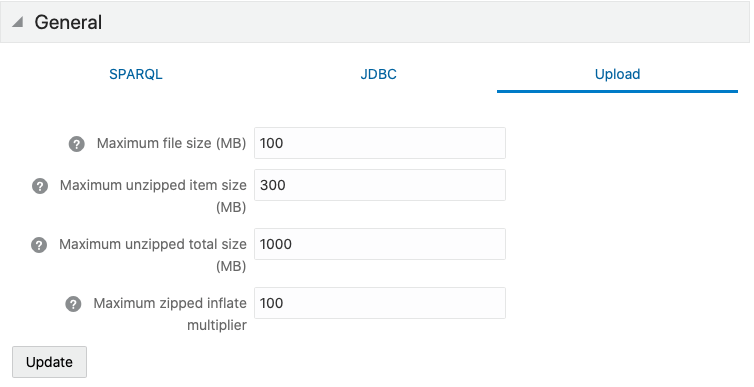

The general JSON configuration file stores information related to SPARQL queries, JBDC parameters and upload parameters.

The JSON file includes the following parameters:

- Maximum SPARQL rows: defines the limit of rows to be fetched for a SPARQL query. If a query returns more than this limit, the fetching process is stopped.

- SPARQL Query Timeout: defines the time in seconds to wait for a query to complete.

- JDBC Fetch size: the fetch size parameter for JDBC queries.

- JDBC Batch size: the batch parameter for JDBC updates.

- Maximum file size to upload: the maximum file size to be uploaded into server.

- Maximum unzipped item size: the maximum size for an item in a zip file.

- Maximum unzipped total size: the size limit for all entries in a zip file.

- Maximum zip inflate multiplier: maximum allowed multiplier when inflating files.

These parameters can be updated as shown in the following figures

Figure 11-41 General SPARQL Parameters

Figure 11-42 General JDBC Parameters

Figure 11-43 General File Upload Parameters

Parent topic: Configuration Files for RDF Server and Client

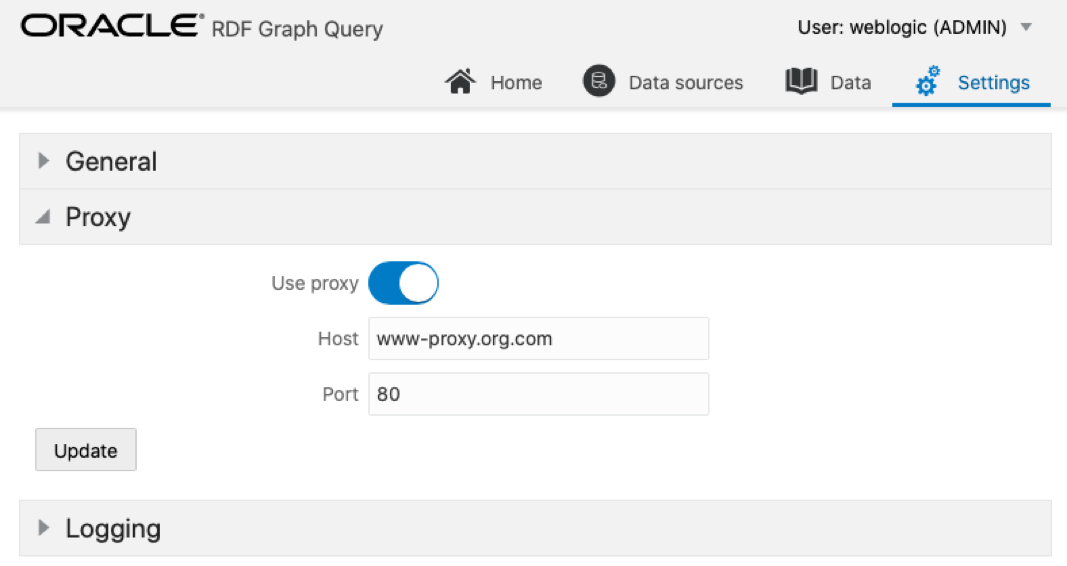

11.3.3.3.3 Proxy JSON Configuration File

The Proxy JSON configuration file contains proxy information for your enterprise network.

Figure 11-44 Proxy JSON Configuration File

The file includes the following parameters:

- Use proxy: flag to define if proxy parameters should be used.

- Host: proxy host value.

- Port: proxy port value.

Parent topic: Configuration Files for RDF Server and Client

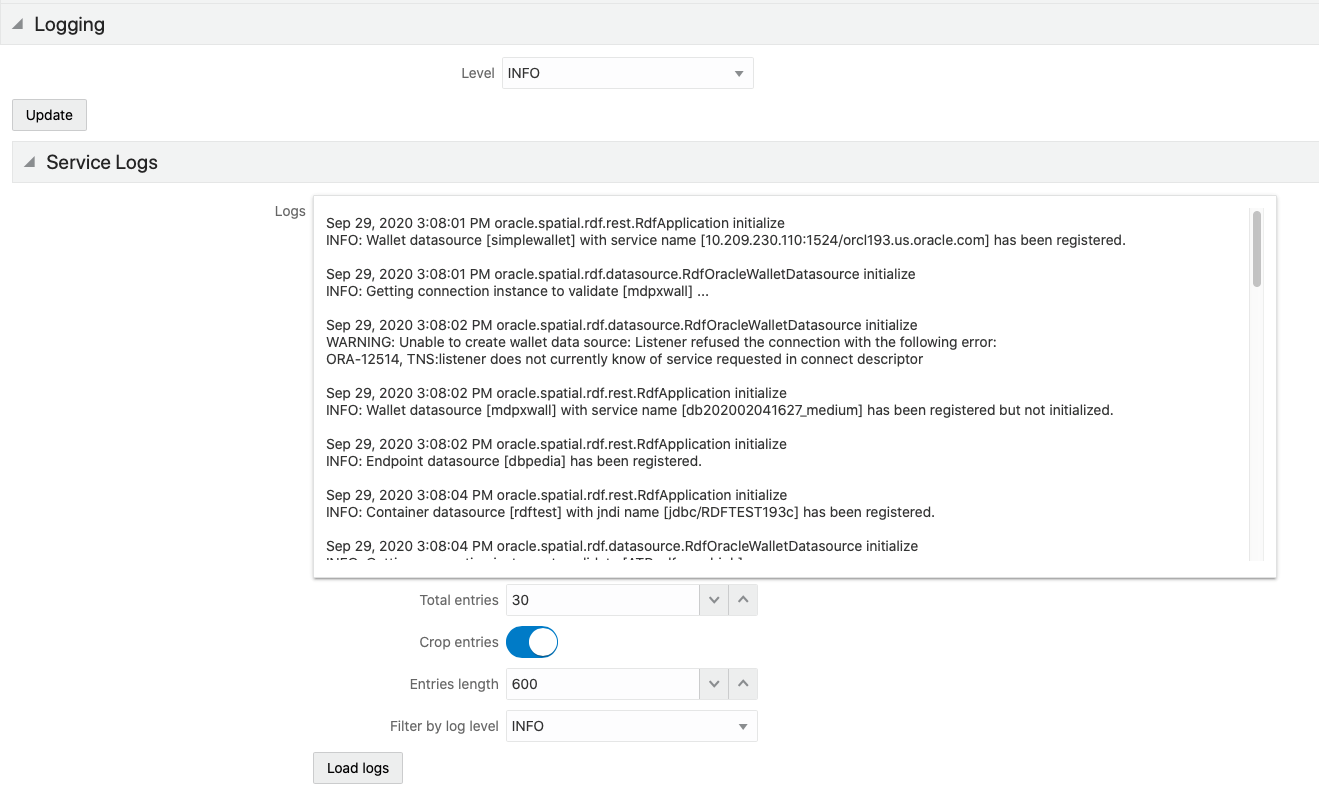

11.3.3.3.4 Logging JSON Configuration File

The Logging JSON configuration file contains the logging settings. You can specify the logging level.

For Administrators and RDF users, it is also possible to load the logs for further analysis.

Figure 11-45 Logging JSON Configuration File

Parent topic: Configuration Files for RDF Server and Client

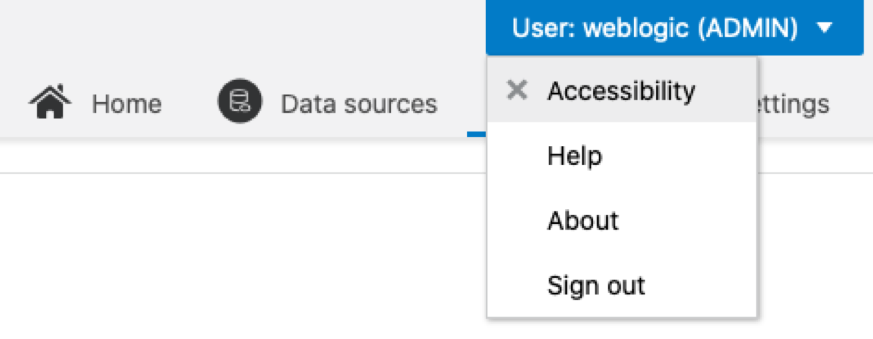

11.3.4 Accessibility

You can turned on or off the accessibility during the user session.

Figure 11-46 Disabled Accessibility

Figure 11-47 Enabled Accessibility

When accessibility is turned on, the graph view of SPARQL queries is disabled.

Figure 11-48 Disabled Graph View

Parent topic: Oracle RDF Graph Query UI