2 Using Property Graphs in an Oracle Database Environment

This chapter provides conceptual and usage information about creating, storing, and working with property graph data in an Oracle Database environment.

- About Property Graphs

Property graphs allow an easy association of properties (key-value pairs) with graph vertices and edges, and they enable analytical operations based on relationships across a massive set of data. - About Property Graph Data Formats

Several graph formats are supported for property graph data. - Property Graph Schema Objects for Oracle Database

The property graph PL/SQL and Java APIs use special Oracle Database schema objects. - Getting Started with Property Graphs

Follow these steps to get started with property graphs. - Using Java APIs for Property Graph Data

Creating a property graph involves using the Java APIs to create the property graph and objects in it. - Managing Text Indexing for Property Graph Data

Indexes in Oracle Spatial and Graph property graph support allow fast retrieval of elements by a particular key/value or key/text pair. These indexes are created based on an element type (vertices or edges), a set of keys (and values), and an index type. - Access Control for Property Graph Data (Graph-Level and OLS)

The property graph feature in Oracle Spatial and Graph supports two access control and security models: graph level access control, and fine-grained security through integration with Oracle Label Security (OLS). - Using the Groovy Shell with Property Graph Data

The Oracle Spatial and Graph property graph support includes a built-in Groovy shell (based on the original Gremlin Groovy shell script). With this command-line shell interface, you can explore the Java APIs. - Using the In-Memory Analyst Zeppelin Interpreter with Oracle Database

The in-memory analyst provides an interpreter implementation for Apache Zeppelin. This tutorial topic explains how to install the in-memory analyst interpreter into your local Zeppelin installation and to perform some simple operations. - REST Support for Oracle Database Property Graph Data

A set of RESTful APIs exposes the Data Access Layer Java APIs through HTTP/REST protocols. - Creating Property Graph Views on an RDF Graph

With Oracle Spatial and Graph, you can view RDF data as a property graph to execute graph analytics operations by creating property graph views over an RDF graph stored in Oracle Database. - Handling Property Graphs Using a Two-Tables Schema

For property graphs with relatively fixed, simple data structures, where you do not need the flexibility of<graph_name>VT$and<graph_name>GE$key/value data tables for vertices and edges, you can use a two-tables schema to achieve better run-time performance. - Oracle Flat File Format Definition

A property graph can be defined in two flat files, specifically description files for the vertices and edges. - Example Python User Interface

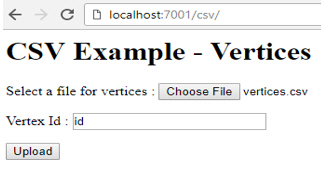

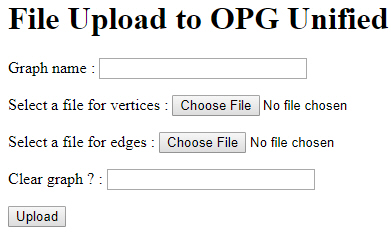

The example Python scripts in$ORACLE_HOME/md/property_graph/pyopg/can used with Oracle Spatial and Graph Property Graph, and you may want to change and enhance them (or copies of them) to suit your needs.

2.1 About Property Graphs

Property graphs allow an easy association of properties (key-value pairs) with graph vertices and edges, and they enable analytical operations based on relationships across a massive set of data.

Parent topic: Using Property Graphs in an Oracle Database Environment

2.1.1 What Are Property Graphs?

A property graph consists of a set of objects or vertices, and a set of arrows or edges connecting the objects. Vertices and edges can have multiple properties, which are represented as key-value pairs.

Each vertex has a unique identifier and can have:

-

A set of outgoing edges

-

A set of incoming edges

-

A collection of properties

Each edge has a unique identifier and can have:

-

An outgoing vertex

-

An incoming vertex

-

A text label that describes the relationship between the two vertices

-

A collection of properties

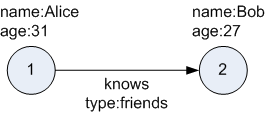

The following figure illustrates a very simple property graph with two vertices and one edge. The two vertices have identifiers 1 and 2. Both vertices have properties name and age. The edge is from the outgoing vertex 1 to the incoming vertex 2. The edge has a text label knows and a property type identifying the type of relationship between vertices 1 and 2.

Standards are not available for Big Data Spatial and Graph property graph data model, but it is similar to the W3C standards-based Resource Description Framework (RDF) graph data model. The property graph data model is simpler and much less precise than RDF. These differences make it a good candidate for use cases such as these:

-

Identifying influencers in a social network

-

Predicting trends and customer behavior

-

Discovering relationships based on pattern matching

-

Identifying clusters to customize campaigns

Note:

The property graph data model that Oracle supports at the database side does not allow labels for vertices. However, you can treat the value of a designated vertex property as one or more labels.

Related Topics

Parent topic: About Property Graphs

2.1.2 What Is Oracle Database Support for Property Graphs?

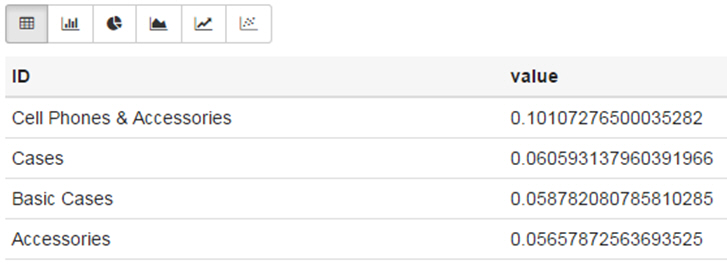

Property graphs are supported in Oracle Database, in addition to being supported for Big Data in Hadoop. This support consists of a set of PL/SQL packages, a data access layer, and an analytics layer.

The following figure provides an overview of the Oracle property graph architecture.

Figure 2-2 Oracle Property Graph Architecture

Description of "Figure 2-2 Oracle Property Graph Architecture"

2.1.2.1 In-Memory Analyst

The in-memory analyst layer enables you to analyze property graphs using parallel in-memory execution. It provides over 35 analytic functions, including path calculation, ranking, community detection, and recommendations.

Parent topic: What Is Oracle Database Support for Property Graphs?

2.1.2.2 Data Access Layer

The data access layer provides a set of Java APIs that you can use to create and drop property graphs, add and remove vertices and edges, search for vertices and edges using key-value pairs, create text indexes, and perform other manipulations. The Java APIs include an implementation of TinkerPop Blueprints graph interfaces for the property graph data model. The Java and PL/SQL APIs also integrate with the Apache Lucene and Apache SolrCloud, which are widely-adopted open-source text indexing and search engines. (Apache SolrCloud must be separately downloaded and configured, as explained in Property Graph Prerequisites.).

For more information, see:

-

Property Graph Schema Objects for Oracle Database (PL/SQL and Java APIs) and OPG_APIS Package Subprograms (PL/SQL API).

Parent topic: What Is Oracle Database Support for Property Graphs?

2.1.2.3 Storage Management

Property graphs are stored in Oracle Database. Tables are used internally to model the vertices and edges of property graphs.

Parent topic: What Is Oracle Database Support for Property Graphs?

2.2 About Property Graph Data Formats

Several graph formats are supported for property graph data.

Parent topic: Using Property Graphs in an Oracle Database Environment

2.2.1 GraphML Data Format

The GraphML file format uses XML to describe graphs. The example in this topic shows a GraphML description of the property graph shown in What Are Property Graphs?.

Example 2-1 GraphML Description of a Simple Property Graph

<?xml version="1.0" encoding="UTF-8"?>

<graphml xmlns="http://graphml.graphdrawing.org/xmlns">

<key id="name" for="node" attr.name="name" attr.type="string"/>

<key id="age" for="node" attr.name="age" attr.type="int"/>

<key id="type" for="edge" attr.name="type" attr.type="string"/>

<graph id="PG" edgedefault="directed">

<node id="1">

<data key="name">Alice</data>

<data key="age">31</data>

</node>

<node id="2">

<data key="name">Bob</data>

<data key="age">27</data>

</node>

<edge id="3" source="1" target="2" label="knows">

<data key="type">friends</data>

</edge>

</graph>

</graphml>

Related Topics

Parent topic: About Property Graph Data Formats

2.2.2 GraphSON Data Format

The GraphSON file format is based on JavaScript Object Notation (JSON) for describing graphs. The example in this topic shows a GraphSON description of the property graph shown in What Are Property Graphs?.

Example 2-2 GraphSON Description of a Simple Property Graph

{

"graph": {

"mode":"NORMAL",

"vertices": [

{

"name": "Alice",

"age": 31,

"_id": "1",

"_type": "vertex"

},

{

"name": "Bob",

"age": 27,

"_id": "2",

"_type": "vertex"

}

],

"edges": [

{

"type": "friends",

"_id": "3",

"_type": "edge",

"_outV": "1",

"_inV": "2",

"_label": "knows"

}

]

}

}Related Topics

Parent topic: About Property Graph Data Formats

2.2.3 GML Data Format

The Graph Modeling Language (GML) file format uses ASCII to describe graphs. The example in this topic shows a GML description of the property graph shown in What Are Property Graphs?.

Example 2-3 GML Description of a Simple Property Graph

graph [

comment "Simple property graph"

directed 1

IsPlanar 1

node [

id 1

label "1"

name "Alice"

age 31

]

node [

id 2

label "2"

name "Bob"

age 27

]

edge [

source 1

target 2

label "knows"

type "friends"

]

]Related Topics

Parent topic: About Property Graph Data Formats

2.2.4 Oracle Flat File Format

The Oracle flat file format exclusively describes property graphs. It is more concise and provides better data type support than the other file formats. The Oracle flat file format uses two files for a graph description, one for the vertices and one for edges. Commas separate the fields of the records.

Example 2-4 Oracle Flat File Description of a Simple Property Graph

The following shows the Oracle flat files that describe the simple property graph example shown in What Are Property Graphs?.

Vertex file:

1,name,1,Alice,, 1,age,2,,31, 2,name,1,Bob,, 2,age,2,,27,

Edge file:

1,1,2,knows,type,1,friends,,

Related Topics

Parent topic: About Property Graph Data Formats

2.3 Property Graph Schema Objects for Oracle Database

The property graph PL/SQL and Java APIs use special Oracle Database schema objects.

This topic describes objects related to the property graph schema approach to working with graph data. It is a more flexible approach than the two-tables schema approach described in Handling Property Graphs Using a Two-Tables Schema, which has limitations.

Oracle Spatial and Graph lets you store, query, manipulate, and query property graph data in Oracle Database. For example, to create a property graph named myGraph, you can use either the Java APIs (oracle.pg.rdbms.OraclePropertyGraph) or the PL/SQL APIs (MDSYS.OPG_APIS package).

Note:

An Oracle Partitioning license is required if you use the property graph schema. For performance and scalability, both VT$ and GE$ tables are hash partitioned based on IDs, and the number of partitions is customizable. The number of partitions should be a value that is power of 2 (2, 4, 8, 16, and so on). The partitions are named sequentially starting from "p1", so for a property graph created with 8 partitions, the set of partitions will be "p1", "p2", ..., "p8".

With the PL/SQL API:

BEGIN

opg_apis.create_pg(

'myGraph',

dop => 4, -- degree of parallelism

num_hash_ptns => 8, -- number of hash partitions used to store the graph

tbs => 'USERS', -- tablespace

options => 'COMPRESS=T'

);

END;

/

With the Java API:

cfg = GraphConfigBuilder

.forPropertyGraphRdbms()

.setJdbcUrl("jdbc:oracle:thin:@127.0.0.1:1521:orcl")

.setUsername("<your_user_name>")

.setPassword("<your_password>")

.setName("myGraph")

.setMaxNumConnections(8)

.setLoadEdgeLabel(false)

.build();

OraclePropertyGraph opg = OraclePropertyGraph.getInstance(cfg);

After the property graph myGraph is established in the database, several tables are created automatically in the user's schema, with the graph name as the prefix and VT$ or GE$ as the suffix. For example, for a graph named myGraph, table myGraphVT$ is created to store vertices and their properties (K/V pairs), and table myGraphGE$ is created to store edges and their properties.

For simplicity, only simple graph names are allowed, and they are case insensitive.

Additional internal tables are created with SS$, IT$, and GT$ suffixes, to store graph snapshots, text index metadata, and graph skeleton (topological structure), respectively.

The definitions of tables myGraphVT$ and myGraphGE$ are as follows. They are important for SQL-based analytics and SQL-based property graph query. In both the VT$ and GE$ tables, VTS, VTE, and FE are reserved columns; column SL is for the security label; and columns K, T, V, VN, and VT together store all information about a property (K/V pair) of a graph element. In the VT$ table, VID is a long integer for storing the vertex ID. In the GE$ table, EID, SVID, and DVID are long integer columns for storing edge ID, source (from) vertex ID, and destination (to) vertex ID, respectively.

SQL> describe myGraphVT$

Name Null? Type

----------------------------------------- -------- ----------------------------

VID NOT NULL NUMBER

K NVARCHAR2(3100)

T NUMBER(38)

V NVARCHAR2(15000)

VN NUMBER

VT TIMESTAMP(6) WITH TIME ZONE

SL NUMBER

VTS DATE

VTE DATE

FE NVARCHAR2(4000)

SQL> describe myGraphGE$

Name Null? Type

----------------------------------------- -------- ----------------------------

EID NOT NULL NUMBER

SVID NOT NULL NUMBER

DVID NOT NULL NUMBER

EL NVARCHAR2(3100)

K NVARCHAR2(3100)

T NUMBER(38)

V NVARCHAR2(15000)

VN NUMBER

VT TIMESTAMP(6) WITH TIME ZONE

SL NUMBER

VTS DATE

VTE DATE

FE NVARCHAR2(4000)

In the property graph schema design, a property value is stored in the VN column if the value has numeric data type (long, int, double, float, and so on), in the VT column if the value is a timestamp, or in the V column for Strings, boolean and other serializable data types. For better Oracle Text query support, a literal representation of the property value is saved in the V column even if the data type is numeric or timestamp. To differentiate all the supported data types, an integer ID is saved in the T column. (The possible T column integer ID values are those listed for the value_type field in the table in Vertex File.)

The K column in both VT$ and GE$ tables stores the property key. Each edge must have a label of String type, and the labels are stored in the EL column of the GE$ table.

The T column in both VT$ and GE$ tables is a number representing the data type of the value of the property it describes. For example 1 means the value is a string, 2 means the value is an integer, and so on. Some T column possible values and associated data types are as follows:

-

1: STRING

-

2: INTEGER

-

3: FLOAT

-

4: DOUBLE

-

5: DATE

-

6: BOOLEAN

-

7: LONG

-

8: SHORT

-

9: BYTE

-

10: CHAR

-

20: Spatial data (see Representing Spatial Data in a Property Graph)

To support international characters, NVARCHAR columns are used in VT$ and GE$ tables. Oracle highly recommends UTF8 as the default database character set. In addition, the V column has a size of 15000, which requires the enabling of 32K VARCHAR (MAX_STRING_SIZE = EXTENDED).

Example 2-5 Inserting Rows into a VT$ Table

This example inserts rows into a table named CONNECTIONSVT$. It includes T column values 1 through 10 (representing various data types).

INSERT INTO connectionsvt$(vid,k,t,v,vn,vt) VALUES (2001, '1-STRING', 1, 'Some String', NULL, NULL);

INSERT INTO connectionsvt$(vid,k,t,v,vn,vt) VALUES (2001, '2-INTEGER', 2, NULL, 21, NULL);

INSERT INTO connectionsvt$(vid,k,t,v,vn,vt) VALUES (2001, '3-FLOAT', 3, NULL, 21.5, NULL);

INSERT INTO connectionsvt$(vid,k,t,v,vn,vt) VALUES (2001, '4-DOUBLE', 4, NULL, 21.5, NULL);

INSERT INTO connectionsvt$(vid,k,t,v,vn,vt) VALUES (2001, '5-DATE', 5, NULL, NULL, timestamp'2018-07-20 15:32:53.991000');

INSERT INTO connectionsvt$(vid,k,t,v,vn,vt) VALUES (2001, '6-BOOLEAN', 6, 'Y', NULL, NULL);

INSERT INTO connectionsvt$(vid,k,t,v,vn,vt) VALUES (2001, '7-LONG', 7, NULL, 42, NULL);

INSERT INTO connectionsvt$(vid,k,t,v,vn,vt) VALUES (2001, '8-SHORT', 8, NULL, 10, NULL);

INSERT INTO connectionsvt$(vid,k,t,v,vn,vt) VALUES (2001, '9-BYTE', 9, NULL, 10, NULL);

INSERT INTO connectionsvt$(vid,k,t,v,vn,vt) VALUES (2001, '10-CHAR', 10, 'A', NULL, NULL);

...

UPDATE connectionsVT$ SET V = coalesce(v,to_nchar(vn),to_nchar(vt)) WHERE vid=2001;

COMMIT;

Parent topic: Using Property Graphs in an Oracle Database Environment

2.3.1 Default Indexes on Vertex (VT$) and Edge (GE$) Tables

For query performance, several indexes on property graph tables are created by default. The index names follow the same convention as the table names, including using the graph name as the prefix. For example, for the property graph myGraph, the following local indexes are created:

-

A unique index

myGraphXQV$on myGraphVT$(VID, K) -

A unique index

myGraphXQE$onmyGraphGE$(EID, K) -

An index

myGraphXSE$onmyGraphGE$(SVID, DVID, EID, VN) -

An index

myGraphXDE$onmyGraphGE$(DVID, SVID, EID, VN)

Parent topic: Property Graph Schema Objects for Oracle Database

2.3.2 Flexibility in the Property Graph Schema

The property graph schema design does not use a catalog or centralized repository of any kind. Each property graph is separately stored and managed by a schema of user's choice. A user's schema may have one or more property graphs.

This design provides considerable flexibility to users. For example:

-

Additional indexes can be added on demand.

-

Different property graphs can have a different set of indexes or compression options for the base tables.

-

Different property graphs can have different numbers of hash partitions.

-

You can even drop the XSE$ or XDE$ index for a property graph; however, for integrity you should keep the unique constraints.

Parent topic: Property Graph Schema Objects for Oracle Database

2.4 Getting Started with Property Graphs

Follow these steps to get started with property graphs.

- The first time you use property graphs, ensure that the software is installed and operational.

- Create your Java programs, using the classes provided in the Java API.

Related Topics

Parent topic: Using Property Graphs in an Oracle Database Environment

2.5 Using Java APIs for Property Graph Data

Creating a property graph involves using the Java APIs to create the property graph and objects in it.

- Overview of the Java APIs

- Parallel Loading of Graph Data

- Parallel Retrieval of Graph Data

- Using an Element Filter Callback for Subgraph Extraction

- Using Optimization Flags on Reads over Property Graph Data

- Adding and Removing Attributes of a Property Graph Subgraph

- Getting Property Graph Metadata

- Merging New Data into an Existing Property Graph

- Opening and Closing a Property Graph Instance

- Creating Vertices

- Creating Edges

- Deleting Vertices and Edges

- Reading a Graph from a Database into an Embedded In-Memory Analyst

- Specifying Labels for Vertices

- Building an In-Memory Graph

- Dropping a Property Graph

- Executing PGQL Queries

Parent topic: Using Property Graphs in an Oracle Database Environment

2.5.1 Overview of the Java APIs

The Java APIs that you can use for property graphs include the following:

- Oracle Spatial and Graph Property Graph Java APIs

- TinkerPop Java APIs

- Oracle Database Property Graph Java APIs

Parent topic: Using Java APIs for Property Graph Data

2.5.1.1 Oracle Spatial and Graph Property Graph Java APIs

Oracle Spatial and Graph property graph support provides database-specific APIs for Oracle Database. The data access layer API (oracle.pg.*) implements TinkerPop Blueprints APIs, text search, and indexing for property graphs stored in Oracle Database.

To use the Oracle Spatial and Graph API, import the classes into your Java program:

import oracle.pg.common.*; import oracle.pg.text.*; import oracle.pg.rdbms.*; import oracle.pgx.config.*; import oracle.pgx.common.types.*;

Also include TinkerPop Java APIs.

Parent topic: Overview of the Java APIs

2.5.1.2 TinkerPop Java APIs

Apache TinkerPop supports the property graph data model. The API provides utilities for manipulating graphs, which you use primarily through the Spatial and Graph property graph data access layer Java APIs.

To use the TinkerPop APIs, import the classes into your Java program:

import org.apache.tinkerpop.gremlin.structure.Vertex; import org.apache.tinkerpop.gremlin.structure.Edge;

Related Topics

Parent topic: Overview of the Java APIs

2.5.1.3 Oracle Database Property Graph Java APIs

The Oracle Database property graph Java APIs enable you to create and populate a property graph stored in Oracle Database.

To use these Java APIs, import the classes into your Java program. For example:

import oracle.pg.rdbms.*; import java.sql.*;

Parent topic: Overview of the Java APIs

2.5.2 Parallel Loading of Graph Data

A Java API is provided for performing parallel loading of graph data.

Oracle Spatial and Graph supports loading graph data into Oracle Database. Graph data can be loaded into the property graph using the following approaches:

-

Vertices and/or edges can be added incrementally using the

graph.addVertex(Object id)/graph.addEdge(Object id)APIs. -

Graph data can be loaded from a file in Oracle flat-File format in parallel using the

OraclePropertyGraphDataLoaderAPI. -

A property graph in GraphML, GML, or GraphSON can be loaded using

GMLReader,GraphMLReader, andGraphSONReader, respectively.

This topic focuses on the parallel loading of a property graph in Oracle-defined flat file format.

Parallel data loading provides an optimized solution to data loading where the vertices (or edges) input streams are split into multiple chunks and loaded into Oracle Database in parallel. This operation involves two main overlapping phases:

-

Splitting. The vertices and edges input streams are split into multiple chunks and saved into a temporary input stream. The number of chunks is determined by the degree of parallelism specified

-

Graph loading. For each chunk, a loader thread is created to process information about the vertices (or edges) information and to load the data into the property graph tables.

OraclePropertyGraphDataLoader supports parallel data loading using several different options:

Parent topic: Using Java APIs for Property Graph Data

2.5.2.1 JDBC-Based Data Loading

JDBC-based data loading uses Java Database Connectivity (JDBC) APIs to load the graph data into Oracle Database. In this option, the vertices (or edges) in the given input stream will be spread among multiple chunks by the splitter thread. Each chunk will be processed by a different loader thread that inserts all the elements in the chunk into a temporary work table using JDBC batching. The number of splitter and loader threads used is determined by the degree of parallelism (DOP) specified by the user.

After all the graph data is loaded into the temporary work tables, all the data stored in the temporary work tables is loaded into the property graph VT$ and GE$ tables.

The following example loads the graph data from a vertex and edge files in Oracle-defined flat-file format using a JDBC-based parallel data loading with a degree of parallelism of 48.

String szOPVFile = "../../data/connections.opv";

String szOPEFile = "../../data/connections.ope";

OraclePropertyGraph opg = OraclePropertyGraph.getInstance( args, szGraphName);

opgdl = OraclePropertyGraphDataLoader.getInstance();

opgdl.loadData(opg, szOPVFile, szOPEFile, 48 /* DOP */, 1000 /* batch size */, true /* rebuild index flag */, "pddl=t,pdml=t" /* options */);

);

To optimize the performance of the data loading operations, a set of flags and hints can be specified when calling the JDBC-based data loading. These hints include:

-

DOP: The degree of parallelism to use when loading the data. This parameter determines the number of chunks to generate when splitting the file as well as the number of loader threads to use when loading the data into the property graph VT$ and GE$ tables.

-

Batch Size: An integer specifying the batch size to use for Oracle update statements in batching mode. The default batch size used in the JDBC-based data loading is 1000.

-

Rebuild index: If this flag is set to

true, the data loader will disable all the indexes and constraints defined over the property graph where the data will be loaded. After all the data is loaded into the property graph, all the indexes and constraints will be rebuilt. -

Load options: An option (or multiple options delimited by commas) to optimize the data loading operations. These options include:

-

NO_DUP=T: Assumes the input data does not have invalid duplicates. In a valid property graph, each vertex (edge) can at most have one value for a given property key. In an invalid property graph, a vertex (edge) may have two or more values for a particular key. As an example, a vertex, v, has two key/value pairs: name/"John" and name/"Johnny" and they share the same key.

-

PDML=T: Enables parallel execution for DML operations for the database session used in the data loader. This hint is used to improve the performance of long-running batching jobs.

-

PDDL=T: Enables parallel execution for DDL operations for the database session used in the data loader. This hint is used to improve the performance of long-running batching jobs.

-

KEEP_WORK_TABS=T: Skips cleaning and deleting the working tables after the data loading is complete. This is for debugging use only.

-

KEEP_TMP_FILES=T: Skips removing the temporary splitter files after the data loading is complete. This is for debug only.

-

-

Splitter Flag: An integer value defining the type of files or streams used in the splitting phase to generate the data chunks used in the graph loading phase. The temporary files can be created as regular files (0), named pipes (1), or piped streams (2). By default, JDBC-based data loading uses

Piped streams to handle intermediate data chunksPiped streams are for JDBC-based loader only. They are purely in-memory and efficient, and do not require any files created on the operating system.

Regular files consume space on the local operating system, while named pipes appear as empty files on the local operating system. Note that not every operating system has support for named pipes.

-

Split File Prefix: The prefix used for the temporary files or pipes created when the splitting phase is generating the data chunks for the graph loading. By default, the prefix “OPG_Chunk” is used for regular files and “OPG_Pipe” is used for named pipes.

-

Tablespace: The name of the tablespace where all the temporary work tables will be created.

Subtopics:

-

JDBC-Based Data Loading with Multiple Files

-

JDBC-Based Data Loading with Partitions

-

JDBC-based Parallel Data Loading Using Fine-Tuning

JDBC-Based Data Loading with Multiple Files

JDBC-based data loading also supports loading vertices and edges from multiple files or input streams into the database. The following code fragment loads multiple vertex and edge files using the parallel data loading APIs. In the example, two string arrays szOPVFiles and szOPEFiles are used to hold the input files.

String[] szOPVFiles = new String[] {"../../data/connections-p1.opv",

"../../data/connections-p2.opv"};

String[] szOPEFiles = new String[] {"../../data/connections-p1.ope",

"../../data/connections-p2.ope"};

OraclePropertyGraph opg = OraclePropertyGraph.getInstance( args, szGraphName);

opgdl = OraclePropertyGraphDataLoader.getInstance();

opgdl.loadData(opg, szOPVFiles, szOPEFiles, 48 /* DOP */,

1000 /* batch size */,

true /* rebuild index flag */,

"pddl=t,pdml=t" /* options */);

JDBC-Based Data Loading with Partitions

When dealing with graph data from thousands to hundreds of thousands elements, the JDBC-based data loading API allows loading the graph data in Oracle Flat file format into Oracle Database using logical partitioning.

Each partition represents a subset of vertices (or edges) in the graph data file of size is approximately the number of distinct element IDs in the file divided by the number of partitions. Each partition is identified by an integer ID in the range of [0, Number of partitions – 1].

To use parallel data loading with partitions, you must specify the total number of logical partitions to use and the partition offset (start ID) in addition to the base parameters used in the loadData API. To fully load a graph data file or input stream into the database, you must execute the data loading operation as many times as the defined number of partitions. For example, to load the graph data from a file using two partitions, there should be two data loading API calls using an offset of 0 and 1. Each call to the data loader can be processed using multiple threads or a separate Java client on a single system or multiple systems.

Note that this approach is intended to be used with a single vertex file (or input stream) and a single edge file (or input stream). Additionally, this option requires disabling the indices and constraints on vertices and edges. These indices and constraints must be rebuilt after all partitions have been loaded.

The following example loads the graph data using two partitions. Each partition is loaded by one Java process DataLoaderWorker. To coordinate multiple workers, a coordinator process named DataLoaderCoordinator is used. This example does the following

-

Disables all indexes and constraints,

-

Creates a temporary working table, loaderProgress, that records the data loading progress (that is, how many workers have finished their work. All

DataLoaderWorkerprocesses start loading data after the working table is created. -

Increments the progress by 1.

-

Keeps polling (using the

DataLoaderCoordinatorprocess) the progress until allDataLoaderWorkerprocesses are done. -

Rebuilds all indexes and constraints.

Note: In DataLoaderWorker, the flag SKIP_INDEX should be set to true and the flag rebuildIndx should be set to false.

// start DataLoaderCoordinator, set dop = 8 and number of partitions = 2

java DataLoaderCoordinator jdbcUrl user password pg 8 2

// start the first DataLoaderWorker, set dop = 8, number of partitions = 2, partition offset = 0

java DataLoaderWorker jdbcUrl user password pg 8 2 0

// start the first DataLoaderWorker, set dop = 8, number of partitions = 2, partition offset = 1

java DataLoaderWorker jdbcUrl user password pg 8 2 1

The DataLoaderCoordinator first disables all indexes and constraints. It then creates a table named loaderProgress and inserts one row with column progress = 0.

public class DataLoaderCoordinator {

public static void main(String[] szArgs) {

String jdbcUrl = szArgs[0];

String user = szArgs[1];

String password = szArgs[2];

String graphName = szArgs[3];

int dop = Integer.parseInt(szArgs[4]);

int numLoaders = Integer.parseInt(szArgs[5]);

Oracle oracle = null;

OraclePropertyGraph opg = null;

try {

oracle = new Oracle(jdbcUrl, user, password);

OraclePropertyGraphUtils.dropPropertyGraph(oracle, graphName);

opg = OraclePropertyGraph.getInstance(oracle, graphName);

List<String> vIndices = opg.disableVertexTableIndices();

List<String> vConstraints = opg.disableVertexTableConstraints();

List<String> eIndices = opg.disableEdgeTableIndices();

List<String> eConstraints = opg.disableEdgeTableConstraints();

String szStmt = null;

try {

szStmt = "drop table loaderProgress";

opg.getOracle().executeUpdate(szStmt);

}

catch (SQLException ex) {

if (ex.getErrorCode() == 942) {

// table does not exist. ignore

}

else {

throw new OraclePropertyGraphException(ex);

}

}

szStmt = "create table loaderProgress (progress integer)";

opg.getOracle().executeUpdate(szStmt);

szStmt = "insert into loaderProgress (progress) values (0)";

opg.getOracle().executeUpdate(szStmt);

opg.getOracle().getConnection().commit();

while (true) {

if (checkLoaderProgress(oracle) == numLoaders) {

break;

} else {

Thread.sleep(1000);

}

}

opg.rebuildVertexTableIndices(vIndices, dop, null);

opg.rebuildVertexTableConstraints(vConstraints, dop, null);

opg.rebuildEdgeTableIndices(eIndices, dop, null);

opg.rebuildEdgeTableConstraints(eConstraints, dop, null);

}

catch (IOException ex) {

throw new OraclePropertyGraphException(ex);

}

catch (SQLException ex) {

throw new OraclePropertyGraphException(ex);

}

catch (InterruptedException ex) {

throw new OraclePropertyGraphException(ex);

}

catch (Exception ex) {

throw new OraclePropertyGraphException(ex);

}

finally {

try {

if (opg != null) {

opg.shutdown();

}

if (oracle != null) {

oracle.dispose();

}

}

catch (Throwable t) {

System.out.println(t);

}

}

}

private static int checkLoaderProgress(Oracle oracle) {

int result = 0;

ResultSet rs = null;

try {

String szStmt = "select progress from loaderProgress";

rs = oracle.executeQuery(szStmt);

if (rs.next()) {

result = rs.getInt(1);

}

}

catch (Exception ex) {

throw new OraclePropertyGraphException(ex);

}

finally {

try {

if (rs != null) {

rs.close();

}

}

catch (Throwable t) {

System.out.println(t);

}

}

return result;

}

}

public class DataLoaderWorker {

public static void main(String[] szArgs) {

String jdbcUrl = szArgs[0];

String user = szArgs[1];

String password = szArgs[2];

String graphName = szArgs[3];

int dop = Integer.parseInt(szArgs[4]);

int numLoaders = Integer.parseInt(szArgs[5]);

int offset = Integer.parseInt(szArgs[6]);

Oracle oracle = null;

OraclePropertyGraph opg = null;

try {

oracle = new Oracle(jdbcUrl, user, password);

opg = OraclePropertyGraph.getInstance(oracle, graphName, 8, dop, null/*tbs*/, ",SKIP_INDEX=T,");

OraclePropertyGraphDataLoader opgdal = OraclePropertyGraphDataLoader.getInstance();

while (true) {

if (checkLoaderProgress(oracle) == 1) {

break;

} else {

Thread.sleep(1000);

}

}

String opvFile = "../../../data/connections.opv";

String opeFile = "../../../data/connections.ope";

opgdal.loadData(opg, opvFile, opeFile, dop, numLoaders, offset, 1000, false, null, "pddl=t,pdml=t");

updateLoaderProgress(oracle);

}

catch (SQLException ex) {

throw new OraclePropertyGraphException(ex);

}

catch (InterruptedException ex) {

throw new OraclePropertyGraphException(ex);

}

finally {

try {

if (opg != null) {

opg.shutdown();

}

if (oracle != null) {

oracle.dispose();

}

}

catch (Throwable t) {

System.out.println(t);

}

}

}

private static int checkLoaderProgress(Oracle oracle) {

int result = 0;

ResultSet rs = null;

try {

String szStmt = "select count(*) from loaderProgress";

rs = oracle.executeQuery(szStmt);

if (rs.next()) {

result = rs.getInt(1);

}

}

catch (SQLException ex) {

if (ex.getErrorCode() == 942) {

// table does not exist. ignore

} else {

throw new OraclePropertyGraphException(ex);

}

}

finally {

try {

if (rs != null) {

rs.close();

}

}

catch (Throwable t) {

System.out.println(t);

}

}

return result;

}

private static void updateLoaderProgress(Oracle oracle) {

ResultSet rs = null;

try {

String szStmt = "update loaderProgress set progress = progress + 1";

oracle.executeUpdate(szStmt);

oracle.getConnection().commit();

}

catch (Exception ex) {

throw new OraclePropertyGraphException(ex);

}

finally {

try {

if (rs != null) {

rs.close();

}

}

catch (Throwable t) {

System.out.println(t);

}

}

}

}JDBC-based Parallel Data Loading Using Fine-Tuning

JDBC-based data loading supports fine-tuning the subset of data from a line to be loaded, as well as the ID offset to use when loading the elements into the property graph instance. You can specify the subset of data to load from a file by specifying the maximum number of lines to read from the file and the offset line number (start position) for both vertices and edges. This way, data will be loaded from the offset line number until the maximum number of lines has been read. IIf the maximum line number is -1, the loading process will scan the data until reaching the end of file.

Because multiple graph data files may have some ID collisions or overlap, the JDBC-based data loading allows you to define a vertex and edge ID offset. This way, the ID of each loaded vertex will be the sum of the original vertex ID and the given vertex ID offset. Similarly, the ID of each loaded edge will be generated from the sum of the original edge ID and the given edge ID offset. Note that the vertices and edge files must be correlated, because the in/out vertex ID for the loaded edges will be modified with respect to the specified vertex ID offset. This operation is supported only in data loading using a single logical partition.

The following code fragment loads the first 100 vertices and edges lines from the given graph data file. In this example, an ID offset 0 is used, which indicates no ID adjustment is performed.

String szOPVFile = "../../data/connections.opv";

String szOPEFile = "../../data/connections.ope";

// Run the data loading using fine tuning

long lVertexOffsetlines = 0;

long lEdgeOffsetlines = 0;

long lVertexMaxlines = 100;

long lEdgeMaxlines = 100;

long lVIDOffset = 0;

long lEIDOffset = 0;

OraclePropertyGraph opg = OraclePropertyGraph.getInstance( args, szGraphName);

OraclePropertyGraphDataLoader opgdl = OraclePropertyGraphDataLoader.getInstance();

opgdl.loadData(opg, szOPVFile, szOPEFile,

lVertexOffsetlines /* offset of lines to start loading from

partition, default 0 */,

lEdgeOffsetlines /* offset of lines to start loading from

partition, default 0 */,

lVertexMaxlines /* maximum number of lines to start loading from

partition, default -1 (all lines in partition) */,

lEdgeMaxlines /* maximum number of lines to start loading from

partition, default -1 (all lines in partition) */,

lVIDOffset /* vertex ID offset: the vertex ID will be original

vertex ID + offset, default 0 */,

lEIDOffset /* edge ID offset: the edge ID will be original edge ID

+ offset, default 0 */,

4 /* DOP */,

1 /* Total number of partitions, default 1 */,

0 /* Partition to load: from 0 to totalPartitions - 1, default 0 */,

OraclePropertyGraphDataLoader.PIPEDSTREAM /* splitter flag */,

"chunkPrefix" /* prefix: the prefix used to generate split chunks

for regular files or named pipes */,

1000 /* batch size: batch size of Oracle update in batching mode.

Default value is 1000 */,

true /* rebuild index */,

null /* table space name*/,

"pddl=t,pdml=t" /* options: enable parallel DDL and DML */);

Parent topic: Parallel Loading of Graph Data

2.5.2.2 External Table-Based Data Loading

External table-based data loading uses an external table to load the graph data into Oracle Database. External table loading allows users to access the data in external sources as if it were in a regular relational table in the database. In this case, the vertices (or edges) in the given input stream will be spread among multiple chunks by the splitter thread. Each chunk will be processed by a different loader thread that is in charge of passing all the elements in the chunk to Oracle Database. The number of splitter and loader threads used is determined by the degree of parallelism (DOP) specified by the user.

After the external tables are automatically created by the data loading logic, the loader will read from the external tables and load all the data into the property graph schema tables (VT$ and GE$).

External-table based data loading requires a directory object where the files read by the external tables will be stored. This directory can be created by running the following scripts in a SQL*Plus environment:

create or replace directory tmp_dir as '/tmppath/';

grant read, write on directory tmp_dir to public;

The following code fragment loads the graph data from a vertex and edge files in Oracle Flat-file format using an external table-based parallel data loading with a degree of parallelism of 48.

String szOPVFile = "../../data/connections.opv";

String szOPEFile = "../../data/connections.ope";

String szExtDir = "tmp_dir";

OraclePropertyGraph opg = OraclePropertyGraph.getInstance( args, szGraphName);

opgdl = OraclePropertyGraphDataLoader.getInstance();

opgdl.loadDataWithExtTab(opg, szOPVFile, szOPEFile, 48 /*DOP*/,

true /*named pipe flag: setting the flag to true will use

named pipe based splitting; otherwise, regular file

based splitting would be used*/,

szExtDir /* database directory object */,

true /*rebuild index */,

"pddl=t,pdml=t,NO_DUP=T" /*options */);

To optimize the performance of the data loading operations, a set of flags and hints can be specified when calling the External table-based data loading. These hints include:

-

DOP: The degree of parallelism to use when loading the data. This parameter determines the number of chunks to generate when splitting the file, as well as the number of loader threads to use when loading the data into the property graph VT$ and GE$ tables.

-

Rebuild index: If this flag is set to

true, the data loader will disable all the indexes and constraints defined over the property graph where the data will be loaded. After all the data is loaded into the property graph, all the indexes and constraints will be rebuilt. -

Load options: An option (or multiple options delimited by commas) to optimize the data loading operations. These options include:

-

NO_DUP=T: Chooses a faster way to load the data into the property graph tables as no validation for duplicate Key/value pairs will be conducted.

-

PDML=T: Enables parallel execution for DML operations for the database session used in the data loader. This hint is used to improve the performance of long-running batching jobs.

-

PDDL=T: Enables parallel execution for DDL operations for the database session used in the data loader. This hint is used to improve the performance of long-running batching jobs.

-

KEEP_WORK_TABS=T: Skips cleaning and deleting the working tables after the data loading is complete. This is for debugging use only.

-

KEEP_TMP_FILES=T: Skips removing the temporary splitter files after the data loading is complete. This is for debugging use only.

-

-

Splitter Flag: An integer value defining the type of files or streams used in the splitting phase to generate the data chunks used in the graph loading phase. The temporary files can be created as regular files (0) or named pipes (1).

By default, External table-based data loading uses regular files to handle temporary files for data chunks. Named pipes can only be used on operating system that supports them. It is generally a good practice to use regular files together with DBFS.

-

Split File Prefix: The prefix used for the temporary files or pipes created when the splitting phase is generating the data chunks for the graph loading. By default, the prefix “Chunk” is used for regular files and “Pipe” is used for named pipes.

-

Tablespace: The name of the tablespace where all the temporary work tables will be created.

As with the JDBC-based data loading, external table-based data loading supports parallel data loading using a single file, multiple files, partitions, and fine-tuning.

Subtopics:

-

External Table-Based Data Loading with Multiple Files

-

External table-based Data Loading with Partitions

-

External Table-Based Parallel Data Loading Using Fine-Tuning

External Table-Based Data Loading with Multiple Files

External table-based data loading also supports loading vertices and edges from multiple files or input streams into the database. The following code fragment loads multiple vertex and edge files using the parallel data loading APIs. In the example, two string arrays szOPVFiles and szOPEFiles are used to hold the input files.

String szOPVFile = "../../data/connections.opv";

String szOPEFile = "../../data/connections.ope";

String szExtDir = "tmp_dir";

OraclePropertyGraph opg = OraclePropertyGraph.getInstance( args, szGraphName);

opgdl = OraclePropertyGraphDataLoader.getInstance();

opgdl.loadDataWithExtTab(opg, szOPVFile, szOPEFile, 48 /* DOP */,

true /* named pipe flag */,

szExtDir /* database directory object */,

true /* rebuild index flag */,

"pddl=t,pdml=t" /* options */);

External table-based Data Loading with Partitions

When dealing with a very large property graph, the external table-based data loading API allows loading the graph data in Oracle flat file format into Oracle Database using logical partitioning. Each partition represents a subset of vertices (or edges) in the graph data file of size that is approximately the number of distinct element IDs in the file divided by the number of partitions. Each partition is identified by an integer ID in the range of [0, Number of partitions – 1].

To use parallel data loading with partitions, you must specify the total number of partitions to use and the partition offset besides the base parameters used in the loadDataWithExtTab API. To fully load a graph data file or input stream into the database, you must execute the data loading operation as many times as the defined number of partitions. For example, to load the graph data from a file using two partitions, there should be two data loading API calls using an offset of 0 and 1. Each call to the data loader can be processed using multiple threads or a separate Java client on a single system or multiple systems.

Note that this approach is intended to be used with a single vertex file (or input stream) and a single edge file (or input stream). Additionally, this option requires disabling the indexes and constraints on vertices and edges. These indices and constraints must be rebuilt after all partitions have been loaded.

The example for JDBC-based data loading with partitions can be easily migrated to work as external-table based loading with partitions. The only needed changes are to replace API loadData() with loadDataWithExtTab(), and supply some additional input parameters such as the database directory object.

External Table-Based Parallel Data Loading Using Fine-Tuning

External table-based data loading also supports fine-tuning the subset of data from a line to be loaded, as well as the ID offset to use when loading the elements into the property graph instance. You can specify the subset of data to load from a file by specifying the maximum number of lines to read from the file as well as the offset line number for both vertices and edges. This way, data will be loaded from the offset line number until the maximum number of lines has been read. If the maximum line number is -1, the loading process will scan the data until reaching the end of file.

Because graph data files may have some ID collisions, the external table-based data loading allows you to define a vertex and edge ID offset. This way, the ID of each loaded vertex will be obtained from the sum of the original vertex ID with the given vertex ID offset. Similarly, the ID of each loaded edge will be generated from the sum of the original edge ID with the given edge ID offset. Note that the vertices and edge files must be correlated, because the in/out vertex ID for the loaded edges will be modified with respect to the specified vertex ID offset. This operation is supported only in a data loading using a single partition.

The following code fragment loads the first 100 vertices and edges from the given graph data file. In this example, no ID offset is provided.

String szOPVFile = "../../data/connections.opv";

String szOPEFile = "../../data/connections.ope";

// Run the data loading using fine tuning

long lVertexOffsetlines = 0;

long lEdgeOffsetlines = 0;

long lVertexMaxlines = 100;

long lEdgeMaxlines = 100;

long lVIDOffset = 0;

long lEIDOffset = 0;

String szExtDir = "tmp_dir";

OraclePropertyGraph opg = OraclePropertyGraph.getInstance( args, szGraphName);

OraclePropertyGraphDataLoader opgdl = OraclePropertyGraphDataLoader.getInstance();

opgdl.loadDataWithExtTab(opg, szOPVFile, szOPEFile,

lVertexOffsetlines /* offset of lines to start loading

from partition, default 0 */,

lEdgeOffsetlines /* offset of lines to start loading from

partition, default 0 */,

lVertexMaxlines /* maximum number of lines to start

loading from partition, default -1

(all lines in partition) */,

lEdgeMaxlines /* maximum number of lines to start loading

from partition, default -1 (all lines in

partition) */,

lVIDOffset /* vertex ID offset: the vertex ID will be

original vertex ID + offset, default 0 */,

lEIDOffset /* edge ID offset: the edge ID will be

original edge ID + offset, default 0 */,

4 /* DOP */,

1 /* Total number of partitions, default 1 */,

0 /* Partition to load (from 0 to totalPartitions - 1,

default 0) */,

OraclePropertyGraphDataLoader.NAMEDPIPE

/* splitter flag */,

"chunkPrefix" /* prefix */,

szExtDir /* database directory object */,

true /* rebuild index flag */,

"pddl=t,pdml=t" /* options */);

Parent topic: Parallel Loading of Graph Data

2.5.2.3 SQL*Loader-Based Data Loading

SQL*Loader-based data loading uses Oracle SQL*Loader to load the graph data into Oracle Database. SQL*Loader loads data from external files into Oracle Database tables. In this case, the vertices (or edges) in the given input stream will be spread among multiple chunks by the splitter thread. Each chunk will be processed by a different loader thread that inserts all the elements in the chunk into a temporary work table using SQL*Loader. The number of splitter and loader threads used is determined by the degree of parallelism (DOP) specified by the user.

After all the graph data is loaded into the temporary work table, the graph loader will load all the data stored in the temporary work tables into the property graph VT$ and GE$ tables.

The following code fragment loads the graph data from a vertex and edge files in Oracle flat-file format using a SQL-based parallel data loading with a degree of parallelism of 48. To use the APIs, the path to the SQL*Loader must be specified.

String szUser = "username";

String szPassword = "password";

String szDbId = "db18c"; /*service name of the database*/

String szOPVFile = "../../data/connections.opv";

String szOPEFile = "../../data/connections.ope";

String szSQLLoaderPath = "<YOUR_ORACLE_HOME>/bin/sqlldr";

OraclePropertyGraph opg = OraclePropertyGraph.getInstance( args, szGraphName);

opgdl = OraclePropertyGraphDataLoader.getInstance();

opgdl.loadDataWithSqlLdr(opg, szUser, szPassword, szDbId,

szOPVFile, szOPEFile,

48 /* DOP */,

true /*named pipe flag */,

szSQLLoaderPath /* SQL*Loader path: the path to

bin/sqlldr*/,

true /*rebuild index */,

"pddl=t,pdml=t" /* options */);

As with JDBC-based data loading, SQL*Loader-based data loading supports parallel data loading using a single file, multiple files, partitions, and fine-tuning.

Subtopics:

-

SQL*Loader-Based Data Loading with Multiple Files

-

SQL*Loader-Based Data Loading with Partitions

-

SQL*Loader-Based Parallel Data Loading Using Fine-Tuning

SQL*Loader-Based Data Loading with Multiple Files

SQL*Loader-based data loading supports loading vertices and edges from multiple files or input streams into the database. The following code fragment loads multiple vertex and edge files using the parallel data loading APIs. In the example, two string arrays szOPVFiles and szOPEFiles are used to hold the input files.

String szUser = "username";

String szPassword = "password";

String szDbId = "db18c"; /*service name of the database*/

String[] szOPVFiles = new String[] {"../../data/connections-p1.opv",

"../../data/connections-p2.opv"};

String[] szOPEFiles = new String[] {"../../data/connections-p1.ope",

"../../data/connections-p2.ope"};

String szSQLLoaderPath = "../../../dbhome_1/bin/sqlldr";

OraclePropertyGraph opg = OraclePropertyGraph.getInstance( args, szGraphName);

opgdl = OraclePropertyGraphDataLoader.getInstance();

opgdl. loadDataWithSqlLdr (opg, szUser, szPassword, szDbId,

szOPVFiles, szOPEFiles,

48 /* DOP */,

true /* named pipe flag */,

szSQLLoaderPath /* SQL*Loader path */,

true /* rebuild index flag */,

"pddl=t,pdml=t" /* options */);SQL*Loader-Based Data Loading with Partitions

When dealing with a large property graph, the SQL*Loader-based data loading API allows loading the graph data in Oracle flat-file format into Oracle Database using logical partitioning. Each partition represents a subset of vertices (or edges) in the graph data file of size that is approximately the number of distinct element IDs in the file divided by the number of partitions. Each partition is identified by an integer ID in the range of [0, Number of partitions – 1].

To use parallel data loading with partitions, you must specify the total number of partitions to use and the partition offset, in addition to the base parameters used in the loadDataWithSqlLdr API. To fully load a graph data file or input stream into the database, you must execute the data loading operation as many times as the defined number of partitions. For example, to load the graph data from a file using two partitions, there should be two data loading API calls using an offset of 0 and 1. Each call to the data loader can be processed using multiple threads or a separate Java client on a single system or multiple systems.

Note that this approach is intended to be used with a single vertex file (or input stream) and a single edge file (or input stream). Additionally, this option requires disabling the indexes and constraints on vertices and edges. These indexes and constraints must be rebuilt after all partitions have been loaded.

The example for JDBC-based data loading with partitions can be easily migrated to work as SQL*Loader- based loading with partitions. The only changes needed are to replace API loadData() with loadDataWithSqlLdr(), and supply some additional input parameters such as the location of SQL*Loader.

SQL*Loader-Based Parallel Data Loading Using Fine-Tuning

SQL Loader-based data loading supports fine-tuning the subset of data from a line to be loaded, as well as the ID offset to use when loading the elements into the property graph instance. You can specify the subset of data to load from a file by specifying the maximum number of lines to read from the file and the offset line number for both vertices and edges. This way, data will be loaded from the offset line number until the maximum number of lines has been read. If the maximum line number is -1, the loading process will scan the data until reaching the end of file.

Because graph data files may have some ID collisions, the SQL Loader-based data loading allows you to define a vertex and edge ID offset. This way, the ID of each loaded vertex will be obtained from the sum of the original vertex ID with the given vertex ID offset. Similarly, the ID of each loaded edge will be generated from the sum of the original edge ID with the given edge ID offset. Note that the vertices and edge files must be correlated, because the in/out vertex ID for the loaded edges will be modified with respect to the specified vertex ID offset. This operation is supported only in a data loading using a single partition.

The following code fragment loads the first 100 vertices and edges from the given graph data file. In this example, no ID offset is provided.

String szUser = "username";

String szPassword = "password";

String szDbId = "db18c"; /* service name of the database */

String szOPVFile = "../../data/connections.opv";

String szOPEFile = "../../data/connections.ope";

String szSQLLoaderPath = "../../../dbhome_1/bin/sqlldr";

// Run the data loading using fine tuning

long lVertexOffsetlines = 0;

long lEdgeOffsetlines = 0;

long lVertexMaxlines = 100;

long lEdgeMaxlines = 100;

long lVIDOffset = 0;

long lEIDOffset = 0;

OraclePropertyGraph opg = OraclePropertyGraph.getInstance( args, szGraphName);

OraclePropertyGraphDataLoader opgdl = OraclePropertyGraphDataLoader.getInstance();

opgdl.loadDataWithSqlLdr(opg, szUser, szPassword, szDbId,

szOPVFile, szOPEFile,

lVertexOffsetlines /* offset of lines to start loading

from partition, default 0*/,

lEdgeOffsetlines /* offset of lines to start loading from

partition, default 0*/,

lVertexMaxlines /* maximum number of lines to start

loading from partition, default -1

(all lines in partition)*/,

lEdgeMaxlines /* maximum number of lines to start loading

from partition, default -1 (all lines in

partition) */,

lVIDOffset /* vertex ID offset: the vertex ID will be

original vertex ID + offset, default 0 */,

lEIDOffset /* edge ID offset: the edge ID will be

original edge ID + offset, default 0 */,

48 /* DOP */,

1 /* Total number of partitions, default 1 */,

0 /* Partition to load (from 0 to totalPartitions - 1,

default 0) */,

OraclePropertyGraphDataLoader.NAMEDPIPE

/* splitter flag */,

"chunkPrefix" /* prefix */,

szSQLLoaderPath /* SQL*Loader path: the path to

bin/sqlldr*/,

true /* rebuild index */,

"pddl=t,pdml=t" /* options */);

Parent topic: Parallel Loading of Graph Data

2.5.3 Parallel Retrieval of Graph Data

The parallel property graph query provides a simple Java API to perform parallel scans on vertices (or edges). Parallel retrieval is an optimized solution taking advantage of the distribution of the data across table partitions, so each partition is queried using a separate database connection.

Parallel retrieval will produce an array where each element holds all the vertices (or edges) from a specific partition (split). The subset of shards queried will be separated by the given start split ID and the size of the connections array provided. This way, the subset will consider splits in the range of [start, start - 1 + size of connections array]. Note that an integer ID (in the range of [0, N - 1]) is assigned to all the splits in the vertex table with N splits.

The following code loads a property graph, opens an array of connections, and executes a parallel query to retrieve all vertices and edges using the opened connections. The number of calls to the getVerticesPartitioned (getEdgesPartitioned) method is controlled by the total number of splits and the number of connections used.

OraclePropertyGraph opg = OraclePropertyGraph.getInstance(args, szGraphName);

// Clear existing vertices/edges in the property graph

opg.clearRepository();

String szOPVFile = "../../data/connections.opv";

String szOPEFile = "../../data/connections.ope";

// This object will handle parallel data loading

OraclePropertyGraphDataLoader opgdl = OraclePropertyGraphDataLoader.getInstance();

opgdl.loadData(opg, szOPVFile, szOPEFile, dop);

// Create connections used in parallel query

Oracle[] oracleConns = new Oracle[dop];

Connection[] conns = new Connection[dop];

for (int i = 0; i < dop; i++) {

oracleConns[i] = opg.getOracle().clone();

conns[i] = oracleConns[i].getConnection();

}

long lCountV = 0;

// Iterate over all the vertices’ partitionIDs to count all the vertices

for (int partitionID = 0; partitionID < opg.getVertexPartitionsNumber();

partitionID += dop) {

Iterable<Vertex>[] iterables

= opg.getVerticesPartitioned(conns /* Connection array */,

true /* skip store to cache */,

partitionID /* starting partition */);

lCountV += consumeIterables(iterables); /* consume iterables using

threads */

}

// Count all vertices

System.out.println("Vertices found using parallel query: " + lCountV);

long lCountE = 0;

// Iterate over all the edges’ partitionIDs to count all the edges

for (int partitionID = 0; partitionID < opg.getEdgeTablePartitionIDs();

partitionID += dop) {

Iterable<Edge>[] iterables

= opg.getEdgesPartitioned(conns /* Connection array */,

true /* skip store to cache */,

partitionID /* starting partitionID */);

lCountE += consumeIterables(iterables); /* consume iterables using

threads */

}

// Count all edges

System.out.println("Edges found using parallel query: " + lCountE);

// Close the connections to the database after completed

for (int idx = 0; idx < conns.length; idx++) {

conns[idx].close();

}

Parent topic: Using Java APIs for Property Graph Data

2.5.4 Using an Element Filter Callback for Subgraph Extraction

Oracle Spatial and Graph provides support for an easy subgraph extraction using user-defined element filter callbacks. An element filter callback defines a set of conditions that a vertex (or an edge) must meet in order to keep it in the subgraph. Users can define their own element filtering by implementing the VertexFilterCallback and EdgeFilterCallback API interfaces.

The following code fragment implements a VertexFilterCallback that validates if a vertex does not have a political role and its origin is the United States.

/**

* VertexFilterCallback to retrieve a vertex from the United States

* that does not have a political role

*/

private static class NonPoliticianFilterCallback

implements VertexFilterCallback

{

@Override

public boolean keepVertex(OracleVertexBase vertex)

{

String country = vertex.getProperty("country");

String role = vertex.getProperty("role");

if (country != null && country.equals("United States")) {

if (role == null || !role.toLowerCase().contains("political")) {

return true;

}

}

return false;

}

public static NonPoliticianFilterCallback getInstance()

{

return new NonPoliticianFilterCallback();

}

}

The following code fragment implements an EdgeFilterCallback that uses the VertexFilterCallback to keep only edges connected to the given input vertex, and whose connections are not politicians and come from the United States.

/**

* EdgeFilterCallback to retrieve all edges connected to an input

* vertex with "collaborates" label, and whose vertex is from the

* United States with a role different than political

*/

private static class CollaboratorsFilterCallback

implements EdgeFilterCallback

{

private VertexFilterCallback m_vfc;

private Vertex m_startV;

public CollaboratorsFilterCallback(VertexFilterCallback vfc,

Vertex v)

{

m_vfc = vfc;

m_startV = v;

}

@Override

public boolean keepEdge(OracleEdgeBase edge)

{

if ("collaborates".equals(edge.getLabel())) {

if (edge.getVertex(Direction.IN).equals(m_startV) &&

m_vfc.keepVertex((OracleVertex)

edge.getVertex(Direction.OUT))) {

return true;

}

else if (edge.getVertex(Direction.OUT).equals(m_startV) &&

m_vfc.keepVertex((OracleVertex)

edge.getVertex(Direction.IN))) {

return true;

}

}

return false;

}

public static CollaboratorsFilterCallback

getInstance(VertexFilterCallback vfc, Vertex v)

{

return new CollaboratorsFilterCallback(vfc, v);

}

}

Using the filter callbacks previously defined, the following code fragment loads a property graph, creates an instance of the filter callbacks and later gets all of Barack Obama’s collaborators who are not politicians and come from the United States.

OraclePropertyGraph opg = OraclePropertyGraph.getInstance( args, szGraphName); // Clear existing vertices/edges in the property graph opg.clearRepository(); String szOPVFile = "../../data/connections.opv"; String szOPEFile = "../../data/connections.ope"; // This object will handle parallel data loading OraclePropertyGraphDataLoader opgdl = OraclePropertyGraphDataLoader.getInstance(); opgdl.loadData(opg, szOPVFile, szOPEFile, dop); // VertexFilterCallback to retrieve all people from the United States // who are not politicians NonPoliticianFilterCallback npvfc = NonPoliticianFilterCallback.getInstance(); // Initial vertex: Barack Obama Vertex v = opg.getVertices("name", "Barack Obama").iterator().next(); // EdgeFilterCallback to retrieve all collaborators of Barack Obama // from the United States who are not politicians CollaboratorsFilterCallback cefc = CollaboratorsFilterCallback.getInstance(npvfc, v); Iterable<<Edge> obamaCollabs = opg.getEdges((String[])null /* Match any of the properties */, cefc /* Match the EdgeFilterCallback */ ); Iterator<<Edge> iter = obamaCollabs.iterator(); System.out.println("\n\n--------Collaborators of Barack Obama from " + " the US and non-politician\n\n"); long countV = 0; while (iter.hasNext()) { Edge edge = iter.next(); // get the edge // check if obama is the IN vertex if (edge.getVertex(Direction.IN).equals(v)) { System.out.println(edge.getVertex(Direction.OUT) + "(Edge ID: " + edge.getId() + ")"); // get out vertex } else { System.out.println(edge.getVertex(Direction.IN)+ "(Edge ID: " + edge.getId() + ")"); // get in vertex } countV++; }

By default, all reading operations such as get all vertices, get all edges (and parallel approaches) will use the filter callbacks associated with the property graph using the methods opg.setVertexFilterCallback(vfc) and opg.setEdgeFilterCallback(efc). If there is no filter callback set, then all the vertices (or edges) and edges will be retrieved.

The following code fragment uses the default edge filter callback set on the property graph to retrieve the edges.

// VertexFilterCallback to retrieve all people from the United States // who are not politicians NonPoliticianFilterCallback npvfc = NonPoliticianFilterCallback.getInstance(); // Initial vertex: Barack Obama Vertex v = opg.getVertices("name", "Barack Obama").iterator().next(); // EdgeFilterCallback to retrieve all collaborators of Barack Obama // from the United States who are not politicians CollaboratorsFilterCallback cefc = CollaboratorsFilterCallback.getInstance(npvfc, v); opg.setEdgeFilterCallback(cefc); Iterable<Edge> obamaCollabs = opg.getEdges(); Iterator<Edge> iter = obamaCollabs.iterator(); System.out.println("\n\n--------Collaborators of Barack Obama from " + " the US and non-politician\n\n"); long countV = 0; while (iter.hasNext()) { Edge edge = iter.next(); // get the edge // check if obama is the IN vertex if (edge.getVertex(Direction.IN).equals(v)) { System.out.println(edge.getVertex(Direction.OUT) + "(Edge ID: " + edge.getId() + ")"); // get out vertex } else { System.out.println(edge.getVertex(Direction.IN)+ "(Edge ID: " + edge.getId() + ")"); // get in vertex } countV++; }

Parent topic: Using Java APIs for Property Graph Data

2.5.5 Using Optimization Flags on Reads over Property Graph Data

Oracle Spatial and Graph provides support for optimization flags to improve graph iteration performance. Optimization flags allow processing vertices (or edges) as objects with none or minimal information, such as ID, label, and/or incoming/outgoing vertices. This way, the time required to process each vertex (or edge) during iteration is reduced.

The following table shows the optimization flags available when processing vertices (or edges) in a property graph.

| Optimization Flag | Description |

|---|---|

| DO_NOT_CREATE_OBJECT | Use a predefined constant object when processing vertices or edges. |

| JUST_EDGE_ID | Construct edge objects with ID only when processing edges. |

| JUST_LABEL_EDGE_ID | Construct edge objects with ID and label only when processing edges. |

| JUST_LABEL_VERTEX_EDGE_ID | Construct edge objects with ID, label, and in/out vertex IDs only when processing edges |

| JUST_VERTEX_EDGE_ID | Construct edge objects with just ID and in/out vertex IDs when processing edges. |

| JUST_VERTEX_ID | Construct vertex objects with ID only when processing vertices. |

The following code fragment uses a set of optimization flags to retrieve only all the IDs from the vertices and edges in the property graph. The objects retrieved by reading all vertices and edges will include only the IDs and no Key/Value properties or additional information.

import oracle.pg.common.OraclePropertyGraphBase.OptimizationFlag; OraclePropertyGraph opg = OraclePropertyGraph.getInstance( args, szGraphName); // Clear existing vertices/edges in the property graph opg.clearRepository(); String szOPVFile = "../../data/connections.opv"; String szOPEFile = "../../data/connections.ope"; // This object will handle parallel data loading OraclePropertyGraphDataLoader opgdl = OraclePropertyGraphDataLoader.getInstance(); opgdl.loadData(opg, szOPVFile, szOPEFile, dop); // Optimization flag to retrieve only vertices IDs OptimizationFlag optFlagVertex = OptimizationFlag.JUST_VERTEX_ID; // Optimization flag to retrieve only edges IDs OptimizationFlag optFlagEdge = OptimizationFlag.JUST_EDGE_ID; // Print all vertices Iterator<Vertex> vertices = opg.getVertices((String[])null /* Match any of the properties */, null /* Match the VertexFilterCallback */, optFlagVertex /* optimization flag */ ).iterator(); System.out.println("----- Vertices IDs----"); long vCount = 0; while (vertices.hasNext()) { OracleVertex v = vertices.next(); System.out.println((Long) v.getId()); vCount++; } System.out.println("Vertices found: " + vCount); // Print all edges Iterator<Edge> edges = opg.getEdges((String[])null /* Match any of the properties */, null /* Match the EdgeFilterCallback */, optFlagEdge /* optimization flag */ ).iterator(); System.out.println("----- Edges ----"); long eCount = 0; while (edges.hasNext()) { Edge e = edges.next(); System.out.println((Long) e.getId()); eCount++; } System.out.println("Edges found: " + eCount);

By default, all reading operations such as get all vertices, get all edges (and parallel approaches) will use the optimization flag associated with the property graph using the method opg.setDefaultVertexOptFlag(optFlagVertex) and opg.setDefaultEdgeOptFlag(optFlagEdge). If the optimization flags for processing vertices and edges are not defined, then all the information about the vertices and edges will be retrieved.

The following code fragment uses the default optimization flags set on the property graph to retrieve only all the IDs from its vertices and edges.

import oracle.pg.common.OraclePropertyGraphBase.OptimizationFlag; // Optimization flag to retrieve only vertices IDs OptimizationFlag optFlagVertex = OptimizationFlag.JUST_VERTEX_ID; // Optimization flag to retrieve only edges IDs OptimizationFlag optFlagEdge = OptimizationFlag.JUST_EDGE_ID; opg.setDefaultVertexOptFlag(optFlagVertex); opg.setDefaultEdgeOptFlag(optFlagEdge); Iterator<Vertex> vertices = opg.getVertices().iterator(); System.out.println("----- Vertices IDs----"); long vCount = 0; while (vertices.hasNext()) { OracleVertex v = vertices.next(); System.out.println((Long) v.getId()); vCount++; } System.out.println("Vertices found: " + vCount); // Print all edges Iterator<Edge> edges = opg.getEdges().iterator(); System.out.println("----- Edges ----"); long eCount = 0; while (edges.hasNext()) { Edge e = edges.next(); System.out.println((Long) e.getId()); eCount++; } System.out.println("Edges found: " + eCount);

Parent topic: Using Java APIs for Property Graph Data

2.5.6 Adding and Removing Attributes of a Property Graph Subgraph

Oracle Spatial and Graph supports updating attributes (key/value pairs) to a subgraph of vertices and/or edges by using a user-customized operation callback. An operation callback defines a set of conditions that a vertex (or an edge) must meet in order to update it (either add or remove the given attribute and value).

You can define your own attribute operations by implementing the VertexOpCallback and EdgeOpCallback API interfaces. You must override the needOp method, which defines the conditions to be satisfied by the vertices (or edges) to be included in the update operation, as well as the getAttributeKeyName and getAttributeKeyValue methods, which return the key name and value, respectively, to be used when updating the elements.

The following code fragment implements a VertexOpCallback that operates over the obamaCollaborator attribute associated only with Barack Obama collaborators. The value of this property is specified based on the role of the collaborators.

private static class CollaboratorsVertexOpCallback implements VertexOpCallback { private OracleVertexBase m_obama; private List<Vertex> m_obamaCollaborators; public CollaboratorsVertexOpCallback(OraclePropertyGraph opg) { // Get a list of Barack Obama'sCollaborators m_obama = (OracleVertexBase) opg.getVertices("name", "Barack Obama") .iterator().next(); Iterable<Vertex> iter = m_obama.getVertices(Direction.BOTH, "collaborates"); m_obamaCollaborators = OraclePropertyGraphUtils.listify(iter); } public static CollaboratorsVertexOpCallback getInstance(OraclePropertyGraph opg) { return new CollaboratorsVertexOpCallback(opg); } /** * Add attribute if and only if the vertex is a collaborator of Barack * Obama */ @Override public boolean needOp(OracleVertexBase v) { return m_obamaCollaborators != null && m_obamaCollaborators.contains(v); } @Override public String getAttributeKeyName(OracleVertexBase v) { return "obamaCollaborator"; } /** * Define the property's value based on the vertex role */ @Override public Object getAttributeKeyValue(OracleVertexBase v) { String role = v.getProperty("role"); role = role.toLowerCase(); if (role.contains("political")) { return "political"; } else if (role.contains("actor") || role.contains("singer") || role.contains("actress") || role.contains("writer") || role.contains("producer") || role.contains("director")) { return "arts"; } else if (role.contains("player")) { return "sports"; } else if (role.contains("journalist")) { return "journalism"; } else if (role.contains("business") || role.contains("economist")) { return "business"; } else if (role.contains("philanthropist")) { return "philanthropy"; } return " "; } }

The following code fragment implements an EdgeOpCallback that operates over the obamaFeud attribute associated only with Barack Obama feuds. The value of this property is specified based on the role of the collaborators.