4 Tables and Table Clusters

This chapter provides an introduction to schema objects and discusses tables, which are the most common types of schema objects.

This chapter contains the following sections:

Introduction to Schema Objects

A database schema is a logical container for data structures, called schema objects. Examples of schema objects are tables and indexes. You create and manipulate schema objects with SQL.

This section contains the following topics:

See Also:

Oracle Database Security Guide to learn more about users and privileges

About Common and Local User Accounts

A database user account has a password and specific database privileges.

User Accounts and Schemas

Each user account owns a single schema, which has the same name as the user. The schema contains the data for the user owning the schema. For example, the hr user account owns the hr schema, which contains schema objects such as the employees table. In a production database, the schema owner usually represents a database application rather than a person.

Within a schema, each schema object of a particular type has a unique name. For example, hr.employees refers to the table employees in the hr schema. The following figure depicts a schema owner named hr and schema objects within the hr schema.

Common and Local User Accounts

If a user account owns objects that define the database, then this user account is common. User accounts that are not Oracle-supplied are either local or common. A CDB common user is a common user that is created in the CDB root. An application common user is a user that is created in an application root, and is common only within this application container.

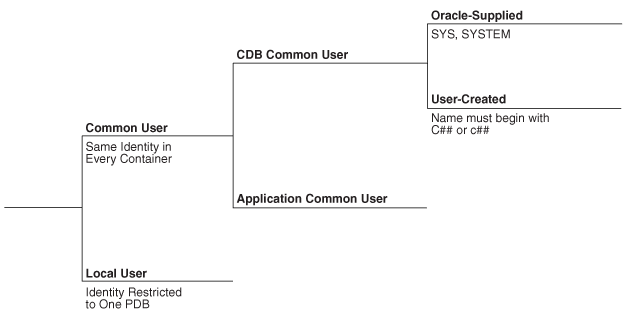

The following graphic shows the possible user account types in a CDB.

A CDB common user can connect to any container in the CDB to which it has sufficient privileges. In contrast, an application common user can only connect to the application root in which it was created, or a PDB that is plugged in to this application root, depending on its privileges.

Common User Accounts

Within the context of either the system container (CDB) or an application container, a common user is a database user that has the same identity in the root and in every existing and future PDB within this container.

Every common user can connect to and perform operations within the root of its container, and within any PDB in which it has sufficient privileges. Some administrative tasks must be performed by a common user. Examples include creating a PDB and unplugging a PDB.

For example, SYSTEM is a CDB common user with DBA privileges. Thus, SYSTEM can connect to the CDB root and any PDB in the database. You might create a common user saas_sales_admin in the saas_sales application container. In this case, the saas_sales_admin user could only connect to the saas_sales application root or to an application PDB within the saas_sales application container.

Every common user is either Oracle-supplied or user-created. Examples of Oracle-supplied common users are SYS and SYSTEM. Every user-created common user is either a CDB common user, or an application common user.

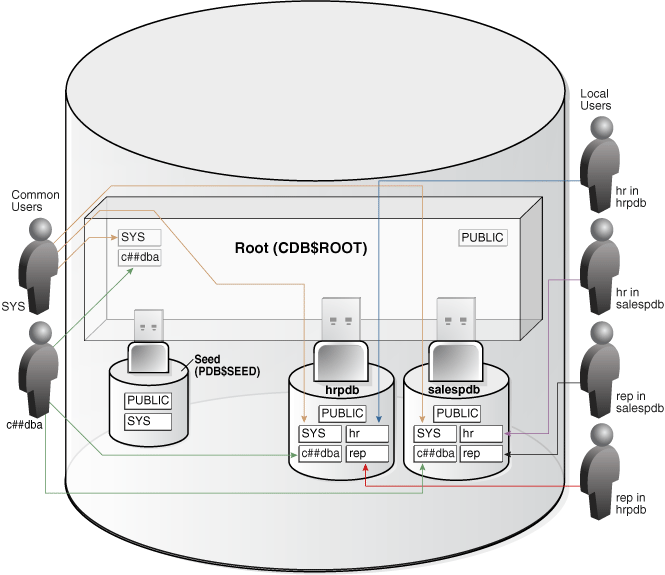

The following figure shows sample users and schemas in two PDBs: hrpdb and salespdb. SYS and c##dba are CDB common users who have schemas in CDB$ROOT, hrpdb, and salespdb. Local users hr and rep exist in hrpdb. Local users hr and rep also exist in salespdb.

Common users have the following characteristics:

- A common user can log in to any container (including

CDB$ROOT) in which it has theCREATE SESSIONprivilege.A common user need not have the same privileges in every container. For example, the

c##dbauser may have the privilege to create a session inhrpdband in the root, but not to create a session insalespdb. Because a common user with the appropriate privileges can switch between containers, a common user in the root can administer PDBs - An application common user does not have the

CREATE SESSIONprivilege in any container outside its own application container.Thus, an application common user is restricted to its own application container. For example, the application common user created in the

saas_salesapplication can connect only to the application root and the PDBs in thesaas_salesapplication container. - The names of user-created CDB common users must follow the naming rules for other database users. Additionally, the names must begin with the characters specified by the

COMMON_USER_PREFIXinitialization parameter, which arec##orC##by default. Oracle-supplied common user names and user-created application common user names do not have this restriction.No local user name may begin with the characters

c##orC##. - Every common user is uniquely named across all PDBs within the container (either the system container or a specific application container) in which it was created.

A CDB common user is defined in the CDB root, but must be able to connect to every PDB with the same identity. An application common user resides in the application root, and may connect to every application PDB in its container with the same identity.

Characteristics of Common Users

Every common user is either Oracle-supplied or user-created.

Common user accounts have the following characteristics:

-

A common user can log in to any container (including

CDB$ROOT) in which it has theCREATE SESSIONprivilege.A common user need not have the same privileges in every container. For example, the

c##dbauser may have the privilege to create a session inhrpdband in the root, but not to create a session insalespdb. Because a common user with the appropriate privileges can switch between containers, a common user in the root can administer PDBs. -

An application common user does not have the

CREATE SESSIONprivilege in any container outside its own application container.Thus, an application common user is restricted to its own application container. For example, the application common user created in the

saas_salesapplication can connect only to the application root and the PDBs in thesaas_salesapplication container. -

The names of user-created CDB common users must follow the naming rules for other database users. Additionally, the names must begin with the characters specified by the

COMMON_USER_PREFIXinitialization parameter, which arec##orC##by default. Oracle-supplied common user names and user-created application common user names do not have this restriction.No local user name may begin with the characters

c##orC##. -

Every common user is uniquely named across all PDBs within the container (either the system container or a specific application container) in which it was created.

A CDB common user is defined in the CDB root, but must be able to connect to every PDB with the same identity. An application common user resides in the application root, and may connect to every application PDB in its container with the same identity.

The following figure shows sample users and schemas in two PDBs: hrpdb and salespdb. SYS and c##dba are CDB common users who have schemas in CDB$ROOT, hrpdb, and salespdb. Local users hr and rep exist in hrpdb. Local users hr and rep also exist in salespdb.

See Also:

-

Oracle Database Security Guide to learn about common user accounts

-

Oracle Database Reference to learn about

COMMON_USER_PREFIX

SYS and SYSTEM Accounts

All Oracle databases include default common user accounts with administrative privileges.

Administrative accounts are highly privileged and are intended only for DBAs authorized to perform tasks such as starting and stopping the database, managing memory and storage, creating and managing database users, and so on.

The SYS common user account is automatically created when a database is created. This account can perform all database administrative functions. The SYS schema stores the base tables and views for the data dictionary. These base tables and views are critical for the operation of Oracle Database. Tables in the SYS schema are manipulated only by the database and must never be modified by any user.

The SYSTEM administrative account is also automatically created when a database is created. The SYSTEM schema stores additional tables and views that display administrative information, and internal tables and views used by various Oracle Database options and tools. Never use the SYSTEM schema to store tables of interest to nonadministrative users.

See Also:

-

Oracle Database Security Guide to learn about user accounts

-

Oracle Database Administrator’s Guide to learn about

SYS,SYSTEM, and other administrative accounts

Local User Accounts

A local user is a database user that is not common and can operate only within a single PDB.

Local users have the following characteristics:

-

A local user is specific to a PDB and may own a schema in this PDB.

In the example shown in "Characteristics of Common Users", local user

hronhrpdbowns thehrschema. Onsalespdb, local userrepowns therepschema, and local userhrowns thehrschema. -

A local user can administer a PDB, including opening and closing it.

A common user with

SYSDBAprivileges can grantSYSDBAprivileges to a local user. In this case, the privileged user remains local. -

A local user in one PDB cannot log in to another PDB or to the CDB root.

For example, when local user

hrconnects tohrpdb,hrcannot access objects in theshschema that reside in thesalespdbdatabase without using a database link. In the same way, when local usershconnects to thesalespdbPDB,shcannot access objects in thehrschema that resides inhrpdbwithout using a database link. -

The name of a local user must not begin with the characters

c##orC##. -

The name of a local user must only be unique within its PDB.

The user name and the PDB in which that user schema is contained determine a unique local user. "Characteristics of Common Users" shows that a local user and schema named

repexist onhrpdb. A completely independent local user and schema namedrepexist on thesalespdbPDB.

The following table describes a scenario involving the CDB in "Characteristics of Common Users". Each row describes an action that occurs after the action in the preceding row. Common user SYSTEM creates local users in two PDBs.

Table 4-1 Local Users in a CDB

| Operation | Description |

|---|---|

|

|

|

|

|

The |

|

|

|

|

|

The |

See Also:

Oracle Database Security Guide to learn about local user accounts

Common and Local Objects

A common object is defined in either the CDB root or an application root, and can be referenced using metadata links or object links. A local object is every object that is not a common object.

Database-supplied common objects are defined in CDB$ROOT and cannot be changed. Oracle Database does not support creation of common objects in CDB$ROOT.

You can create most schema objects—such as tables, views, PL/SQL and Java program units, sequences, and so on—as common objects in an application root. If the object exists in an application root, then it is called an application common object.

A local user can own a common object. Also, a common user can own a local object, but only when the object is not data-linked or metadata-linked, and is also neither a metadata link nor a data link.

See Also:

Oracle Database Security Guide to learn more about privilege management for common objects

Schema Object Types

Oracle SQL enables you to create and manipulate many other types of schema objects.

The principal types of schema objects are shown in the following table.

Table 4-2 Schema Objects

| Object | Description | To Learn More |

|---|---|---|

|

Table |

A table stores data in rows. Tables are the most important schema objects in a relational database. |

|

|

Indexes |

Indexes are schema objects that contain an entry for each indexed row of the table or table cluster and provide direct, fast access to rows. Oracle Database supports several types of index. An index-organized table is a table in which the data is stored in an index structure. |

|

|

Partitions |

Partitions are pieces of large tables and indexes. Each partition has its own name and may optionally have its own storage characteristics. |

|

|

Views |

Views are customized presentations of data in one or more tables or other views. You can think of them as stored queries. Views do not actually contain data. |

|

|

Sequences |

A sequence is a user-created object that can be shared by multiple users to generate integers. Typically, you use sequences to generate primary key values. |

|

|

Dimensions |

A dimension defines a parent-child relationship between pairs of column sets, where all the columns of a column set must come from the same table. Dimensions are commonly used to categorize data such as customers, products, and time. |

|

|

Synonyms |

A synonym is an alias for another schema object. Because a synonym is simply an alias, it requires no storage other than its definition in the data dictionary. |

|

|

PL/SQL subprograms and packages |

PL/SQL is the Oracle procedural extension of SQL. A PL/SQL subprogram is a named PL/SQL block that can be invoked with a set of parameters. A PL/SQL package groups logically related PL/SQL types, variables, and subprograms. |

Other types of objects are also stored in the database and can be created and manipulated with SQL statements but are not contained in a schema. These objects include database user account, roles, contexts, and dictionary objects.

See Also:

-

Oracle Database Administrator’s Guide to learn how to manage schema objects

-

Oracle Database SQL Language Reference for more about schema objects and database objects

Schema Object Storage

Some schema objects store data in a type of logical storage structure called a segment. For example, a nonpartitioned heap-organized table or an index creates a segment.

Other schema objects, such as views and sequences, consist of metadata only. This topic describes only schema objects that have segments.

Oracle Database stores a schema object logically within a tablespace. There is no relationship between schemas and tablespaces: a tablespace can contain objects from different schemas, and the objects for a schema can be contained in different tablespaces. The data of each object is physically contained in one or more data files.

The following figure shows a possible configuration of table and index segments, tablespaces, and data files. The data segment for one table spans two data files, which are both part of the same tablespace. A segment cannot span multiple tablespaces.

Figure 4-5 Segments, Tablespaces, and Data Files

Description of "Figure 4-5 Segments, Tablespaces, and Data Files"

See Also:

-

"Logical Storage Structures" to learn about tablespaces and segments

-

Oracle Database Administrator’s Guide to learn how to manage storage for schema objects

Schema Object Dependencies

Some schema objects refer to other objects, creating a schema object dependency.

For example, a view contains a query that references tables or views, while a PL/SQL subprogram invokes other subprograms. If the definition of object A references object B, then A is a dependent object on B, and B is a referenced object for A.

Oracle Database provides an automatic mechanism to ensure that a dependent object is always up to date with respect to its referenced objects. When you create a dependent object, the database tracks dependencies between the dependent object and its referenced objects. When a referenced object changes in a way that might affect a dependent object, the database marks the dependent object invalid. For example, if a user drops a table, no view based on the dropped table is usable.

An invalid dependent object must be recompiled against the new definition of a referenced object before the dependent object is usable. Recompilation occurs automatically when the invalid dependent object is referenced.

As an illustration of how schema objects can create dependencies, the following sample script creates a table test_table and then a procedure that queries this table:

CREATE TABLE test_table ( col1 INTEGER, col2 INTEGER );

CREATE OR REPLACE PROCEDURE test_proc

AS

BEGIN

FOR x IN ( SELECT col1, col2 FROM test_table )

LOOP

-- process data

NULL;

END LOOP;

END;

/

The following query of the status of procedure test_proc shows that it is valid:

SQL> SELECT OBJECT_NAME, STATUS FROM USER_OBJECTS WHERE OBJECT_NAME = 'TEST_PROC';

OBJECT_NAME STATUS

----------- -------

TEST_PROC VALID

After adding the col3 column to test_table, the procedure is still valid because the procedure has no dependencies on this column:

SQL> ALTER TABLE test_table ADD col3 NUMBER;

Table altered.

SQL> SELECT OBJECT_NAME, STATUS FROM USER_OBJECTS WHERE OBJECT_NAME = 'TEST_PROC';

OBJECT_NAME STATUS

----------- -------

TEST_PROC VALID

However, changing the data type of the col1 column, which the test_proc procedure depends on, invalidates the procedure:

SQL> ALTER TABLE test_table MODIFY col1 VARCHAR2(20);

Table altered.

SQL> SELECT OBJECT_NAME, STATUS FROM USER_OBJECTS WHERE OBJECT_NAME = 'TEST_PROC';

OBJECT_NAME STATUS

----------- -------

TEST_PROC INVALID

Running or recompiling the procedure makes it valid again, as shown in the following example:

SQL> EXECUTE test_proc

PL/SQL procedure successfully completed.

SQL> SELECT OBJECT_NAME, STATUS FROM USER_OBJECTS WHERE OBJECT_NAME = 'TEST_PROC';

OBJECT_NAME STATUS

----------- -------

TEST_PROC VALIDSee Also:

Oracle Database Administrator’s Guide and Oracle Database Development Guide to learn how to manage schema object dependencies

Sample Schemas

An Oracle database may include sample schemas, which are a set of interlinked schemas that enable Oracle documentation and Oracle instructional materials to illustrate common database tasks.

The hr sample schema contains information about employees, departments and locations, work histories, and so on. The following illustration depicts an entity-relationship diagram of the tables in hr. Most examples in this manual use objects from this schema.

See Also:

Oracle Database Sample Schemas to learn how to install the sample schemas

Overview of Tables

A table is the basic unit of data organization in an Oracle database.

A table describes an entity, which is something of significance about which information must be recorded. For example, an employee could be an entity.

Oracle Database tables fall into the following basic categories:

-

Relational tables

Relational tables have simple columns and are the most common table type. Example 4-1 shows a

CREATE TABLEstatement for a relational table. -

Object tables

The columns correspond to the top-level attributes of an object type. See "Overview of Object Tables".

You can create a relational table with the following organizational characteristics:

-

A heap-organized table does not store rows in any particular order. The

CREATE TABLEstatement creates a heap-organized table by default. -

An index-organized table orders rows according to the primary key values. For some applications, index-organized tables enhance performance and use disk space more efficiently. See "Overview of Index-Organized Tables".

-

An external table is a read-only table whose metadata is stored in the database but whose data is stored outside the database. See "Overview of External Tables".

A table is either permanent or temporary. A permanent table definition and data persist across sessions. A temporary table definition persists in the same way as a permanent table definition, but the data exists only for the duration of a transaction or session. Temporary tables are useful in applications where a result set must be held temporarily, perhaps because the result is constructed by running multiple operations.

This topic contains the following topics:

See Also:

Oracle Database Administrator’s Guide to learn how to manage tables

Columns

A table definition includes a table name and set of columns.

A column identifies an attribute of the entity described by the table. For example, the column employee_id in the employees table refers to the employee ID attribute of an employee entity.

In general, you give each column a column name, a data type, and a width when you create a table. For example, the data type for employee_id is NUMBER(6), indicating that this column can only contain numeric data up to 6 digits in width. The width can be predetermined by the data type, as with DATE.

Virtual Columns

A table can contain a virtual column, which unlike a nonvirtual column does not consume disk space.

The database derives the values in a virtual column on demand by computing a set of user-specified expressions or functions. For example, the virtual column income could be a function of the salary and commission_pct columns.

See Also:

Oracle Database Administrator’s Guide to learn how to manage virtual columns

Invisible Columns

An invisible column is a user-specified column whose values are only visible when the column is explicitly specified by name. You can add an invisible column to a table without affecting existing applications, and make the column visible if necessary.

In general, invisible columns help migrate and evolve online applications. A use case might be an application that queries a three-column table with a SELECT * statement. Adding a fourth column to the table would break the application, which expects three columns of data. Adding a fourth invisible column makes the application function normally. A developer can then alter the application to handle a fourth column, and make the column visible when the application goes live.

The following example creates a table products with an invisible column count, and then makes the invisible column visible:

CREATE TABLE products ( prod_id INT, count INT INVISIBLE );

ALTER TABLE products MODIFY ( count VISIBLE );See Also:

-

Oracle Database Administrator’s Guide to learn how to manage invisible columns

-

Oracle Database SQL Language Reference for more information about invisible columns

Rows

A row is a collection of column information corresponding to a record in a table.

For example, a row in the employees table describes the attributes of a specific employee: employee ID, last name, first name, and so on. After you create a table, you can insert, query, delete, and update rows using SQL.

Example: CREATE TABLE and ALTER TABLE Statements

The Oracle SQL statement to create a table is CREATE TABLE.

Example 4-1 CREATE TABLE employees

The following example shows the CREATE TABLE statement for the employees table in the hr sample schema. The statement specifies columns such as employee_id, first_name, and so on, specifying a data type such as NUMBER or DATE for each column.

CREATE TABLE employees

( employee_id NUMBER(6)

, first_name VARCHAR2(20)

, last_name VARCHAR2(25)

CONSTRAINT emp_last_name_nn NOT NULL

, email VARCHAR2(25)

CONSTRAINT emp_email_nn NOT NULL

, phone_number VARCHAR2(20)

, hire_date DATE

CONSTRAINT emp_hire_date_nn NOT NULL

, job_id VARCHAR2(10)

CONSTRAINT emp_job_nn NOT NULL

, salary NUMBER(8,2)

, commission_pct NUMBER(2,2)

, manager_id NUMBER(6)

, department_id NUMBER(4)

, CONSTRAINT emp_salary_min

CHECK (salary > 0)

, CONSTRAINT emp_email_uk

UNIQUE (email)

) ;

Example 4-2 ALTER TABLE employees

The following example shows an ALTER TABLE statement that adds integrity constraints to the employees table. Integrity constraints enforce business rules and prevent the entry of invalid information into tables.

ALTER TABLE employees

ADD ( CONSTRAINT emp_emp_id_pk

PRIMARY KEY (employee_id)

, CONSTRAINT emp_dept_fk

FOREIGN KEY (department_id)

REFERENCES departments

, CONSTRAINT emp_job_fk

FOREIGN KEY (job_id)

REFERENCES jobs (job_id)

, CONSTRAINT emp_manager_fk

FOREIGN KEY (manager_id)

REFERENCES employees

) ;

Example 4-3 Rows in the employees Table

The following sample output shows 8 rows and 6 columns of the hr.employees table.

EMPLOYEE_ID FIRST_NAME LAST_NAME SALARY COMMISSION_PCT DEPARTMENT_ID

----------- ----------- ------------- ------- -------------- -------------

100 Steven King 24000 90

101 Neena Kochhar 17000 90

102 Lex De Haan 17000 90

103 Alexander Hunold 9000 60

107 Diana Lorentz 4200 60

149 Eleni Zlotkey 10500 .2 80

174 Ellen Abel 11000 .3 80

178 Kimberely Grant 7000 .15

The preceding output illustrates some of the following important characteristics of tables, columns, and rows:

-

A row of the table describes the attributes of one employee: name, salary, department, and so on. For example, the first row in the output shows the record for the employee named Steven King.

-

A column describes an attribute of the employee. In the example, the

employee_idcolumn is the primary key, which means that every employee is uniquely identified by employee ID. Any two employees are guaranteed not to have the same employee ID. -

A non-key column can contain rows with identical values. In the example, the salary value for employees 101 and 102 is the same:

17000. -

A foreign key column refers to a primary or unique key in the same table or a different table. In this example, the value of

90indepartment_idcorresponds to thedepartment_idcolumn of thedepartmentstable. -

A field is the intersection of a row and column. It can contain only one value. For example, the field for the department ID of employee 103 contains the value

60. -

A field can lack a value. In this case, the field is said to contain a null value. The value of the

commission_pctcolumn for employee 100 is null, whereas the value in the field for employee 149 is.2. A column allows nulls unless aNOTNULLor primary key integrity constraint has been defined on this column, in which case no row can be inserted without a value for this column.

See Also:

Oracle Database SQL Language Reference for CREATE TABLE syntax and semantics

Oracle Data Types

Each column has a data type, which is associated with a specific storage format, constraints, and valid range of values. The data type of a value associates a fixed set of properties with the value.

These properties cause Oracle Database to treat values of one data type differently from values of another. For example, you can multiply values of the NUMBER data type, but not values of the RAW data type.

When you create a table, you must specify a data type for each of its columns. Each value subsequently inserted in a column assumes the column data type.

Oracle Database provides several built-in data types. The most commonly used data types fall into the following categories:

Other important categories of built-in types include raw, large objects (LOBs), and collections. PL/SQL has data types for constants and variables, which include BOOLEAN, reference types, composite types (records), and user-defined types.

See Also:

-

Oracle Database SecureFiles and Large Objects Developer's Guide

-

Oracle Database SQL Language Reference to learn about built-in SQL data types

-

Oracle Database PL/SQL Packages and Types Reference to learn about PL/SQL data types

-

Oracle Database Development Guide to learn how to use the built-in data types

Character Data Types

Character data types store alphanumeric data in strings. The most common character data type is VARCHAR2, which is the most efficient option for storing character data.

The byte values correspond to the character encoding scheme, generally called a character set. The database character set is established at database creation. Examples of character sets are 7-bit ASCII, EBCDIC, and Unicode UTF-8.

The length semantics of character data types are measurable in bytes or characters. The treatment of strings as a sequence of bytes is called byte semantics. This is the default for character data types. The treatment of strings as a sequence of characters is called character semantics. A character is a code point of the database character set.

See Also:

-

Oracle Database Globalization Support Guide to learn more about character sets

-

Oracle Database 2 Day Developer's Guide for a brief introduction to data types

-

Oracle Database Development Guide to learn how to choose a character data type

VARCHAR2 and CHAR Data Types

VARCHAR2 data type stores variable-length character literals. A literal is a fixed data value. For example, 'LILA', 'St. George Island', and '101' are all character literals; 5001 is a numeric literal. Character literals are enclosed in single quotation marks so that the database can distinguish them from schema object names.

Note:

This manual uses the terms text literal, character literal, and string interchangeably.

When you create a table with a VARCHAR2 column, you specify a maximum string length. In Example 4-1, the last_name column has a data type of VARCHAR2(25), which means that any name stored in the column has a maximum of 25 bytes.

For each row, Oracle Database stores each value in the column as a variable-length field unless a value exceeds the maximum length, in which case the database returns an error. For example, in a single-byte character set, if you enter 10 characters for the last_name column value in a row, then the column in the row piece stores only 10 characters (10 bytes), not 25. Using VARCHAR2 reduces space consumption.

In contrast to VARCHAR2, CHAR stores fixed-length character strings. When you create a table with a CHAR column, the column requires a string length. The default is 1 byte. The database uses blanks to pad the value to the specified length.

Oracle Database compares VARCHAR2 values using nonpadded comparison semantics and compares CHAR values using blank-padded comparison semantics.

See Also:

Oracle Database SQL Language Reference for details about blank-padded and nonpadded comparison semantics

NCHAR and NVARCHAR2 Data Types

NCHAR and NVARCHAR2 data types store Unicode character data.

Unicode is a universal encoded character set that can store information in any language using a single character set. NCHAR stores fixed-length character strings that correspond to the national character set, whereas NVARCHAR2 stores variable length character strings.

You specify a national character set when creating a database. The character set of NCHAR and NVARCHAR2 data types must be either AL16UTF16 or UTF8. Both character sets use Unicode encoding.

When you create a table with an NCHAR or NVARCHAR2 column, the maximum size is always in character length semantics. Character length semantics is the default and only length semantics for NCHAR or NVARCHAR2.

See Also:

Oracle Database Globalization Support Guide for information about Oracle's globalization support feature

Numeric Data Types

The Oracle Database numeric data types store fixed and floating-point numbers, zero, and infinity. Some numeric types also store values that are the undefined result of an operation, which is known as "not a number" or NaN.

Oracle Database stores numeric data in variable-length format. Each value is stored in scientific notation, with 1 byte used to store the exponent. The database uses up to 20 bytes to store the mantissa, which is the part of a floating-point number that contains its significant digits. Oracle Database does not store leading and trailing zeros.

NUMBER Data Type

The NUMBER data type stores fixed and floating-point numbers. The database can store numbers of virtually any magnitude. This data is guaranteed to be portable among different operating systems running Oracle Database. The NUMBER data type is recommended for most cases in which you must store numeric data.

You specify a fixed-point number in the form NUMBER(p,s), where p and s refer to the following characteristics:

-

Precision

The precision specifies the total number of digits. If a precision is not specified, then the column stores the values exactly as provided by the application without any rounding.

-

Scale

The scale specifies the number of digits from the decimal point to the least significant digit. Positive scale counts digits to the right of the decimal point up to and including the least significant digit. Negative scale counts digits to the left of the decimal point up to but not including the least significant digit. If you specify a precision without a scale, as in

NUMBER(6), then the scale is 0.

In Example 4-1, the salary column is type NUMBER(8,2), so the precision is 8 and the scale is 2. Thus, the database stores a salary of 100,000 as 100000.00.

Floating-Point Numbers

Oracle Database provides two numeric data types exclusively for floating-point numbers: BINARY_FLOAT and BINARY_DOUBLE.

These types support all of the basic functionality provided by the NUMBER data type. However, whereas NUMBER uses decimal precision, BINARY_FLOAT and BINARY_DOUBLE use binary precision, which enables faster arithmetic calculations and usually reduces storage requirements.

BINARY_FLOAT and BINARY_DOUBLE are approximate numeric data types. They store approximate representations of decimal values, rather than exact representations. For example, the value 0.1 cannot be exactly represented by either BINARY_DOUBLE or BINARY_FLOAT. They are frequently used for scientific computations. Their behavior is similar to the data types FLOAT and DOUBLE in Java and XMLSchema.

See Also:

Oracle Database SQL Language Reference to learn about precision, scale, and other characteristics of numeric types

Datetime Data Types

The datetime data types are DATE and TIMESTAMP. Oracle Database provides comprehensive time zone support for time stamps.

DATE Data Type

The DATE data type stores date and time. Although datetimes can be represented in character or number data types, DATE has special associated properties.

The database stores dates internally as numbers. Dates are stored in fixed-length fields of 7 bytes each, corresponding to century, year, month, day, hour, minute, and second.

Note:

Dates fully support arithmetic operations, so you add to and subtract from dates just as you can with numbers.

The database displays dates according to the specified format model. A format model is a character literal that describes the format of a datetime in a character string. The standard date format is DD-MON-RR, which displays dates in the form 01-JAN-11.

RR is similar to YY (the last two digits of the year), but the century of the return value varies according to the specified two-digit year and the last two digits of the current year. Assume that in 1999 the database displays 01-JAN-11. If the date format uses RR, then 11 specifies 2011, whereas if the format uses YY, then 11 specifies 1911. You can change the default date format at both the database instance and session level.

Oracle Database stores time in 24-hour format—HH:MI:SS. If no time portion is entered, then by default the time in a date field is 00:00:00 A.M. In a time-only entry, the date portion defaults to the first day of the current month.

See Also:

-

Oracle Database Development Guide for more information about centuries and date format masks

-

Oracle Database SQL Language Reference for information about datetime format codes

-

Oracle Database Development Guide to learn how to perform arithmetic operations with datetime data types

TIMESTAMP Data Type

The TIMESTAMP data type is an extension of the DATE data type.

TIMESTAMP stores fractional seconds in addition to the information stored in the DATE data type. The TIMESTAMP data type is useful for storing precise time values, such as in applications that must track event order.

The DATETIME data types TIMESTAMP WITH TIME ZONE and TIMESTAMP WITH LOCAL TIME ZONE are time-zone aware. When a user selects the data, the value is adjusted to the time zone of the user session. This data type is useful for collecting and evaluating date information across geographic regions.

See Also:

Oracle Database SQL Language Reference for details about the syntax of creating and entering data in time stamp columns

Rowid Data Types

Every row stored in the database has an address. Oracle Database uses a ROWID data type to store the address (rowid) of every row in the database.

Rowids fall into the following categories:

-

Physical rowids store the addresses of rows in heap-organized tables, table clusters, and table and index partitions.

-

Logical rowids store the addresses of rows in index-organized tables.

-

Foreign rowids are identifiers in foreign tables, such as DB2 tables accessed through a gateway. They are not standard Oracle Database rowids.

A data type called the universal rowid, or urowid, supports all types of rowids.

Use of Rowids

A B-tree index, which is the most common type, contains an ordered list of keys divided into ranges. Each key is associated with a rowid that points to the associated row's address for fast access.

End users and application developers can also use rowids for several important functions:

-

Rowids are the fastest means of accessing particular rows.

-

Rowids provide the ability to see how a table is organized.

-

Rowids are unique identifiers for rows in a given table.

You can also create tables with columns defined using the ROWID data type. For example, you can define an exception table with a column of data type ROWID to store the rowids of rows that violate integrity constraints. Columns defined using the ROWID data type behave like other table columns: values can be updated, and so on.

ROWID Pseudocolumn

Every table in an Oracle database has a pseudocolumn named ROWID.

A pseudocolumn behaves like a table column, but is not actually stored in the table. You can select from pseudocolumns, but you cannot insert, update, or delete their values. A pseudocolumn is also similar to a SQL function without arguments. Functions without arguments typically return the same value for every row in the result set, whereas pseudocolumns typically return a different value for each row.

Values of the ROWID pseudocolumn are strings representing the address of each row. These strings have the data type ROWID. This pseudocolumn is not evident when listing the structure of a table by executing SELECT or DESCRIBE, nor does the pseudocolumn consume space. However, the rowid of each row can be retrieved with a SQL query using the reserved word ROWID as a column name.

The following example queries the ROWID pseudocolumn to show the rowid of the row in the employees table for employee 100:

SQL> SELECT ROWID FROM employees WHERE employee_id = 100;

ROWID

------------------

AAAPecAAFAAAABSAAASee Also:

-

Oracle Database Development Guide to learn how to identify rows by address

-

Oracle Database SQL Language Reference to learn about rowid types

Format Models and Data Types

A format model is a character literal that describes the format of datetime or numeric data stored in a character string. A format model does not change the internal representation of the value in the database.

When you convert a character string into a date or number, a format model determines how the database interprets the string. In SQL, you can use a format model as an argument of the TO_CHAR and TO_DATE functions to format a value to be returned from the database or to format a value to be stored in the database.

The following statement selects the salaries of the employees in Department 80 and uses the TO_CHAR function to convert these salaries into character values with the format specified by the number format model '$99,990.99':

SQL> SELECT last_name employee, TO_CHAR(salary, '$99,990.99') AS "SALARY"

2 FROM employees

3 WHERE department_id = 80 AND last_name = 'Russell';

EMPLOYEE SALARY

------------------------- -----------

Russell $14,000.00

The following example updates a hire date using the TO_DATE function with the format mask 'YYYY MM DD' to convert the string '1998 05 20' to a DATE value:

SQL> UPDATE employees

2 SET hire_date = TO_DATE('1998 05 20','YYYY MM DD')

3 WHERE last_name = 'Hunold';See Also:

Oracle Database SQL Language Reference to learn more about format models

Integrity Constraints

An integrity constraint is a named rule that restrict the values for one or more columns in a table.

Data integrity rules prevent invalid data entry into tables. Also, constraints can prevent the deletion of a table when certain dependencies exist.

If a constraint is enabled, then the database checks data as it is entered or updated. Oracle Database prevents data that does not conform to the constraint from being entered. If a constraint is disabled, then Oracle Database allows data that does not conform to the constraint to enter the database.

In Example 4-1, the CREATE TABLE statement specifies NOT NULL constraints for the last_name, email, hire_date, and job_id columns. The constraint clauses identify the columns and the conditions of the constraint. These constraints ensure that the specified columns contain no null values. For example, an attempt to insert a new employee without a job ID generates an error.

You can create a constraint when or after you create a table. You can temporarily disable constraints if needed. The database stores constraints in the data dictionary.

See Also:

-

"Data Integrity" to learn about integrity constraints

-

"Overview of the Data Dictionary" to learn about the data dictionary

-

Oracle Database SQL Language Reference to learn about SQL constraint clauses

Table Storage

Oracle Database uses a data segment in a tablespace to hold table data.

A segment contains extents made up of data blocks. The data segment for a table (or cluster data segment, for a table cluster) is located in either the default tablespace of the table owner or in a tablespace named in the CREATE TABLE statement.

See Also:

"User Segments" to learn about the types of segments and how they are created

Table Organization

By default, a table is organized as a heap, which means that the database places rows where they fit best rather than in a user-specified order. Thus, a heap-organized table is an unordered collection of rows.

Note:

Index-organized tables use a different principle of organization.

As users add rows, the database places the rows in the first available free space in the data segment. Rows are not guaranteed to be retrieved in the order in which they were inserted.

The hr.departments table is a heap-organized table. It has columns for department ID, name, manager ID, and location ID. As rows are inserted, the database stores them wherever they fit. A data block in the table segment might contain the unordered rows shown in the following example:

50,Shipping,121,1500

120,Treasury,,1700

70,Public Relations,204,2700

30,Purchasing,114,1700

130,Corporate Tax,,1700

10,Administration,200,1700

110,Accounting,205,1700The column order is the same for all rows in a table. The database usually stores columns in the order in which they were listed in the CREATE TABLE statement, but this order is not guaranteed. For example, if a table has a column of type LONG, then Oracle Database always stores this column last in the row. Also, if you add a new column to a table, then the new column becomes the last column stored.

A table can contain a virtual column, which unlike normal columns does not consume space on disk. The database derives the values in a virtual column on demand by computing a set of user-specified expressions or functions. You can index virtual columns, collect statistics on them, and create integrity constraints. Thus, virtual columns are much like nonvirtual columns.

See Also:

-

Oracle Database SQL Language Reference to learn about virtual columns

Row Storage

The database stores rows in data blocks. Each row of a table containing data for less than 256 columns is contained in one or more row pieces.

If possible, Oracle Database stores each row as one row piece. However, if all of the row data cannot be inserted into a single data block, or if an update to an existing row causes the row to outgrow its data block, then the database stores the row using multiple row pieces.

Rows in a table cluster contain the same information as rows in nonclustered tables. Additionally, rows in a table cluster contain information that references the cluster key to which they belong.

See Also:

"Data Block Format" to learn about the components of a data block

Rowids of Row Pieces

A rowid is effectively a 10-byte physical address of a row.

Every row in a heap-organized table has a rowid unique to this table that corresponds to the physical address of a row piece. For table clusters, rows in different tables that are in the same data block can have the same rowid.

Oracle Database uses rowids internally for the construction of indexes. For example, each key in a B-tree index is associated with a rowid that points to the address of the associated row for fast access. Physical rowids provide the fastest possible access to a table row, enabling the database to retrieve a row in as little as a single I/O.

See Also:

-

"Rowid Format" to learn about the structure of a rowid

-

"Overview of B-Tree Indexes" to learn about the types and structure of B-tree indexes

Storage of Null Values

A null is the absence of a value in a column. Nulls indicate missing, unknown, or inapplicable data.

Nulls are stored in the database if they fall between columns with data values. In these cases, they require 1 byte to store the length of the column (zero). Trailing nulls in a row require no storage because a new row header signals that the remaining columns in the previous row are null. For example, if the last three columns of a table are null, then no data is stored for these columns.

See Also:

Oracle Database SQL Language Reference to learn more about null values

Table Compression

The database can use table compression to reduce the amount of storage required for the table.

Compression saves disk space, reduces memory use in the database buffer cache, and in some cases speeds query execution. Table compression is transparent to database applications.

Basic Table Compression and Advanced Row Compression

Dictionary-based table compression provides good compression ratios for heap-organized tables.

Oracle Database supports the following types of dictionary-based table compression:

-

Basic table compression

This type of compression is intended for bulk load operations. The database does not compress data modified using conventional DML. You must use direct path INSERT operations,

ALTER TABLE . . . MOVEoperations, or online table redefinition to achieve basic table compression. -

Advanced row compression

This type of compression is intended for OLTP applications and compresses data manipulated by any SQL operation. The database achieves a competitive compression ratio while enabling the application to perform DML in approximately the same amount of time as DML on an uncompressed table.

For the preceding types of compression, the database stores compressed rows in row major format. All columns of one row are stored together, followed by all columns of the next row, and so on. The database replaces duplicate values with a short reference to a symbol table stored at the beginning of the block. Thus, information that the database needs to re-create the uncompressed data is stored in the data block itself.

Compressed data blocks look much like normal data blocks. Most database features and functions that work on regular data blocks also work on compressed blocks.

You can declare compression at the tablespace, table, partition, or subpartition level. If specified at the tablespace level, then all tables created in the tablespace are compressed by default.

Example 4-4 Table-Level Compression

The following statement applies advanced row compression to the orders table:

ALTER TABLE oe.orders ROW STORE COMPRESS ADVANCED;

Example 4-5 Partition-Level Compression

The following example of a partial CREATE TABLE statement specifies advanced row compression for one partition and basic table compression for the other partition:

CREATE TABLE sales (

prod_id NUMBER NOT NULL,

cust_id NUMBER NOT NULL, ... )

PCTFREE 5 NOLOGGING NOCOMPRESS

PARTITION BY RANGE (time_id)

( partition sales_2013 VALUES LESS THAN(TO_DATE(...)) ROW STORE COMPRESS BASIC,

partition sales_2014 VALUES LESS THAN (MAXVALUE) ROW STORE COMPRESS ADVANCED );See Also:

-

"Row Format" to learn how values are stored in a row

-

"Data Block Compression" to learn about the format of compressed data blocks

-

Oracle Database Utilities to learn about using SQL*Loader for direct path loads

-

Oracle Database Administrator’s Guide and Oracle Database Performance Tuning Guide to learn about table compression

Hybrid Columnar Compression

With Hybrid Columnar Compression, the database stores the same column for a group of rows together. The data block does not store data in row-major format, but uses a combination of both row and columnar methods.

Storing column data together, with the same data type and similar characteristics, dramatically increases the storage savings achieved from compression. The database compresses data manipulated by any SQL operation, although compression levels are higher for direct path loads. Database operations work transparently against compressed objects, so no application changes are required.

Note:

Hybrid Column Compression and In-Memory Column Store (IM column store) are closely related. The primary difference is that Hybrid Column Compression optimizes disk storage, whereas the IM column store optimizes memory storage.

See Also:

"In-Memory Area" to learn more about the IM column store

Types of Hybrid Columnar Compression

If your underlying storage supports Hybrid Columnar Compression, then you can specify different types of compression, depending on your requirements.

The compression options are:

-

Warehouse compression

This type of compression is optimized to save storage space, and is intended for data warehouse applications.

-

Archive compression

This type of compression is optimized for maximum compression levels, and is intended for historical data and data that does not change.

Hybrid Columnar Compression is optimized for data warehousing and decision support applications on Oracle Exadata storage. Oracle Exadata maximizes the performance of queries on tables that are compressed using Hybrid Columnar Compression, taking advantage of the processing power, memory, and Infiniband network bandwidth that are integral to the Oracle Exadata storage server.

Other Oracle storage systems support Hybrid Columnar Compression, and deliver the same space savings as on Oracle Exadata storage, but do not deliver the same level of query performance. For these storage systems, Hybrid Columnar Compression is ideal for in-database archiving of older data that is infrequently accessed.

Compression Units

Hybrid Columnar Compression uses a logical construct called a compression unit to store a set of rows.

When you load data into a table, the database stores groups of rows in columnar format, with the values for each column stored and compressed together. After the database has compressed the column data for a set of rows, the database fits the data into the compression unit.

For example, you apply Hybrid Columnar Compression to a daily_sales table. At the end of every day, you populate the table with items and the number sold, with the item ID and date forming a composite primary key. The following table shows a subset of the rows in daily_sales.

Table 4-3 Sample Table daily_sales

| Item_ID | Date | Num_Sold | Shipped_From | Restock |

|---|---|---|---|---|

|

1000 |

01-JUN-18 |

2 |

WAREHOUSE1 |

Y |

|

1001 |

01-JUN-18 |

0 |

WAREHOUSE3 |

N |

|

1002 |

01-JUN-18 |

1 |

WAREHOUSE3 |

N |

|

1003 |

01-JUN-14 |

0 |

WAREHOUSE2 |

N |

|

1004 |

01-JUN-18 |

2 |

WAREHOUSE1 |

N |

|

1005 |

01-JUN-18 |

1 |

WAREHOUSE2 |

N |

Assume that this subset of rows is stored in one compression unit. Hybrid Columnar Compression stores the values for each column together, and then uses multiple algorithms to compress each column. The database chooses the algorithms based on a variety of factors, including the data type of the column, the cardinality of the actual values in the column, and the compression level chosen by the user.

As shown in the following graphic, each compression unit can span multiple data blocks. The values for a particular column may or may not span multiple blocks.

If Hybrid Columnar Compression does not lead to space savings, then the database stores the data in the DBMS_COMPRESSION.COMP_BLOCK format. In this case, the database applies OLTP compression to the blocks, which reside in a Hybrid Columnar Compression segment.

See Also:

-

Oracle Database Licensing Information User Manual to learn about licensing requirements for Hybrid Columnar Compression

-

Oracle Database Administrator’s Guide to learn how to use Hybrid Columnar Compression

-

Oracle Database SQL Language Reference for

CREATE TABLEsyntax and semantics -

Oracle Database PL/SQL Packages and Types Reference to learn about the

DBMS_COMPRESSIONpackage

DML and Hybrid Columnar Compression

Hybrid Columnar Compression has implications for row locking in different types of DML operations.

Direct Path Loads and Conventional Inserts

When loading data into a table that uses Hybrid Columnar Compression, you can use either conventional inserts or direct path loads. Direct path loads lock the entire table, which reduces concurrency.

Starting with Oracle Database 12c Release 2 (12.2), support is added for conventional array inserts into the Hybrid Columnar Compression format. The advantages of conventional array inserts are:

-

Inserted rows use row-level locks, which increases concurrency.

-

Automatic Data Optimization (ADO) and Heat Map support Hybrid Columnar Compression for row-level policies. Thus, the database can use Hybrid Columnar Compression for eligible blocks even when DML activity occurs on other parts of the segment.

When the application uses conventional array inserts, Oracle Database stores the rows in compression units when the following conditions are met:

-

The table is stored in an ASSM tablespace.

-

The compatibility level is 12.2.0.1 or later.

-

The table definition satisfies the existing Hybrid Columnar Compression table constraints, including no columns of type

LONG, and no row dependencies.

Conventional inserts generate redo and undo. Thus, compression units created by conventional DML statement are rolled back or committed along with the DML. The database automatically performs index maintenance, just as for rows that are stored in conventional data blocks.

Updates and Deletes

By default, the database locks all rows in the compression unit if an update or delete is applied to any row in the unit. To avoid this issue, you can choose to enable row-level locking for a table. In this case, the database only locks rows that are affected by the update or delete operation.

See Also:

-

Oracle Database Administrator’s Guide to learn how to perform conventional inserts

-

Oracle Database SQL Language Reference to learn about the

INSERTstatement

Overview of Table Clusters

A table cluster is a group of tables that share common columns and store related data in the same blocks.

When tables are clustered, a single data block can contain rows from multiple tables. For example, a block can store rows from both the employees and departments tables rather than from only a single table.

The cluster key is the column or columns that the clustered tables have in common. For example, the employees and departments tables share the department_id column. You specify the cluster key when creating the table cluster and when creating every table added to the table cluster.

The cluster key value is the value of the cluster key columns for a particular set of rows. All data that contains the same cluster key value, such as department_id=20, is physically stored together. Each cluster key value is stored only once in the cluster and the cluster index, no matter how many rows of different tables contain the value.

For an analogy, suppose an HR manager has two book cases: one with boxes of employee folders and the other with boxes of department folders. Users often ask for the folders for all employees in a particular department. To make retrieval easier, the manager rearranges all the boxes in a single book case. She divides the boxes by department ID. Thus, all folders for employees in department 20 and the folder for department 20 itself are in one box; the folders for employees in department 100 and the folder for department 100 are in another box, and so on.

Consider clustering tables when they are primarily queried (but not modified) and records from the tables are frequently queried together or joined. Because table clusters store related rows of different tables in the same data blocks, properly used table clusters offer the following benefits over nonclustered tables:

-

Disk I/O is reduced for joins of clustered tables.

-

Access time improves for joins of clustered tables.

-

Less storage is required to store related table and index data because the cluster key value is not stored repeatedly for each row.

Typically, clustering tables is not appropriate in the following situations:

-

The tables are frequently updated.

-

The tables frequently require a full table scan.

-

The tables require truncating.

Overview of Indexed Clusters

An index cluster is a table cluster that uses an index to locate data. The cluster index is a B-tree index on the cluster key. A cluster index must be created before any rows can be inserted into clustered tables.

Example 4-6 Creating a Table Cluster and Associated Index

Assume that you create the cluster employees_departments_cluster with the cluster key department_id, as shown in the following example:

CREATE CLUSTER employees_departments_cluster

(department_id NUMBER(4))

SIZE 512;

CREATE INDEX idx_emp_dept_cluster

ON CLUSTER employees_departments_cluster;Because the HASHKEYS clause is not specified, employees_departments_cluster is an indexed cluster. The preceding example creates an index named idx_emp_dept_cluster on the cluster key department_id.

Example 4-7 Creating Tables in an Indexed Cluster

You create the employees and departments tables in the cluster, specifying the department_id column as the cluster key, as follows (the ellipses mark the place where the column specification goes):

CREATE TABLE employees ( ... )

CLUSTER employees_departments_cluster (department_id);

CREATE TABLE departments ( ... )

CLUSTER employees_departments_cluster (department_id);

Assume that you add rows to the employees and departments tables. The database physically stores all rows for each department from the employees and departments tables in the same data blocks. The database stores the rows in a heap and locates them with the index.

Figure 4-8 shows the employees_departments_cluster table cluster, which contains employees and departments. The database stores rows for employees in department 20 together, department 110 together, and so on. If the tables are not clustered, then the database does not ensure that the related rows are stored together.

The B-tree cluster index associates the cluster key value with the database block address (DBA) of the block containing the data. For example, the index entry for key 20 shows the address of the block that contains data for employees in department 20:

20,AADAAAA9d

The cluster index is separately managed, just like an index on a nonclustered table, and can exist in a separate tablespace from the table cluster.

See Also:

-

Oracle Database Administrator’s Guide to learn how to create and manage indexed clusters

-

Oracle Database SQL Language Reference for

CREATE CLUSTERsyntax and semantics

Overview of Hash Clusters

A hash cluster is like an indexed cluster, except the index key is replaced with a hash function. No separate cluster index exists. In a hash cluster, the data is the index.

With an indexed table or indexed cluster, Oracle Database locates table rows using key values stored in a separate index. To find or store a row in an indexed table or table cluster, the database must perform at least two I/Os:

-

One or more I/Os to find or store the key value in the index

-

Another I/O to read or write the row in the table or table cluster

To find or store a row in a hash cluster, Oracle Database applies the hash function to the cluster key value of the row. The resulting hash value corresponds to a data block in the cluster, which the database reads or writes on behalf of the issued statement.

Hashing is an optional way of storing table data to improve the performance of data retrieval. Hash clusters may be beneficial when the following conditions are met:

-

A table is queried much more often than modified.

-

The hash key column is queried frequently with equality conditions, for example,

WHERE department_id=20. For such queries, the cluster key value is hashed. The hash key value points directly to the disk area that stores the rows. -

You can reasonably guess the number of hash keys and the size of the data stored with each key value.

Hash Cluster Creation

To create a hash cluster, you use the same CREATE CLUSTER statement as for an indexed cluster, with the addition of a hash key. The number of hash values for the cluster depends on the hash key.

The cluster key, like the key of an indexed cluster, is a single column or composite key shared by the tables in the cluster. A hash key value is an actual or possible value inserted into the cluster key column. For example, if the cluster key is department_id, then hash key values could be 10, 20, 30, and so on.

Oracle Database uses a hash function that accepts an infinite number of hash key values as input and sorts them into a finite number of buckets. Each bucket has a unique numeric ID known as a hash value. Each hash value maps to the database block address for the block that stores the rows corresponding to the hash key value (department 10, 20, 30, and so on).

In the following example, the number of departments that are likely to exist is 100, so HASHKEYS is set to 100:

CREATE CLUSTER employees_departments_cluster

(department_id NUMBER(4))

SIZE 8192 HASHKEYS 100;

After you create employees_departments_cluster, you can create the employees and departments tables in the cluster. You can then load data into the hash cluster just as in the indexed cluster.

See Also:

-

Oracle Database Administrator’s Guide to learn how to create and manage hash clusters

Hash Cluster Queries

In queries of a hash cluster, the database determines how to hash the key values input by the user.

For example, users frequently execute queries such as the following, entering different department ID numbers for p_id:

SELECT *

FROM employees

WHERE department_id = :p_id;

SELECT *

FROM departments

WHERE department_id = :p_id;

SELECT *

FROM employees e, departments d

WHERE e.department_id = d.department_id

AND d.department_id = :p_id;

If a user queries employees in department_id=20, then the database might hash this value to bucket 77. If a user queries employees in department_id=10, then the database might hash this value to bucket 15. The database uses the internally generated hash value to locate the block that contains the employee rows for the requested department.

The following illustration depicts a hash cluster segment as a horizontal row of blocks. As shown in the graphic, a query can retrieve data in a single I/O.

Figure 4-9 Retrieving Data from a Hash Cluster

Description of "Figure 4-9 Retrieving Data from a Hash Cluster"

A limitation of hash clusters is the unavailability of range scans on nonindexed cluster keys. Assume no separate index exists for the hash cluster created in Hash Cluster Creation. A query for departments with IDs between 20 and 100 cannot use the hashing algorithm because it cannot hash every possible value between 20 and 100. Because no index exists, the database must perform a full scan.

See Also:

Hash Cluster Variations

A single-table hash cluster is an optimized version of a hash cluster that supports only one table at a time. A one-to-one mapping exists between hash keys and rows.

A single-table hash cluster can be beneficial when users require rapid access to a table by primary key. For example, users often look up an employee record in the employees table by employee_id.

A sorted hash cluster stores the rows corresponding to each value of the hash function in such a way that the database can efficiently return them in sorted order. The database performs the optimized sort internally. For applications that always consume data in sorted order, this technique can mean faster retrieval of data. For example, an application might always sort on the order_date column of the orders table.

See Also:

Oracle Database Administrator’s Guide to learn how to create single-table and sorted hash clusters

Hash Cluster Storage

Oracle Database allocates space for a hash cluster differently from an indexed cluster.

In the example in Hash Cluster Creation, HASHKEYS specifies the number of departments likely to exist, whereas SIZE specifies the size of the data associated with each department. The database computes a storage space value based on the following formula:

HASHKEYS * SIZE / database_block_size

Thus, if the block size is 4096 bytes in the example shown in Hash Cluster Creation, then the database allocates at least 200 blocks to the hash cluster.

Oracle Database does not limit the number of hash key values that you can insert into the cluster. For example, even though HASHKEYS is 100, nothing prevents you from inserting 200 unique departments in the departments table. However, the efficiency of the hash cluster retrieval diminishes when the number of hash values exceeds the number of hash keys.

To illustrate the retrieval issues, assume that block 100 in Figure 4-9 is completely full with rows for department 20. A user inserts a new department with department_id 43 into the departments table. The number of departments exceeds the HASHKEYS value, so the database hashes department_id 43 to hash value 77, which is the same hash value used for department_id 20. Hashing multiple input values to the same output value is called a hash collision.

When users insert rows into the cluster for department 43, the database cannot store these rows in block 100, which is full. The database links block 100 to a new overflow block, say block 200, and stores the inserted rows in the new block. Both block 100 and 200 are now eligible to store data for either department. As shown in Figure 4-10, a query of either department 20 or 43 now requires two I/Os to retrieve the data: block 100 and its associated block 200. You can solve this problem by re-creating the cluster with a different HASHKEYS value.

Figure 4-10 Retrieving Data from a Hash Cluster When a Hash Collision Occurs

Description of "Figure 4-10 Retrieving Data from a Hash Cluster When a Hash Collision Occurs"

See Also:

Oracle Database Administrator’s Guide to learn how to manage space in hash clusters

Overview of Attribute-Clustered Tables

An attribute-clustered table is a heap-organized table that stores data in close proximity on disk based on user-specified clustering directives. The directives specify columns in single or multiple tables.

The directives are as follows:

-

The

CLUSTERING ... BY LINEAR ORDERdirective orders data in a table according to specified columns.Consider using

BY LINEAR ORDERclustering, which is the default, when queries qualify the prefix of columns specified in the clustering clause. For example, if queries ofsh.salesoften specify either a customer ID or both customer ID and product ID, then you could cluster data in the table using the linear column ordercust_id,prod_id. -

The

CLUSTERING ... BY INTERLEAVED ORDERdirective orders data in one or more tables using a special algorithm, similar to a Z-order function, that permits multicolumn I/O reduction.Consider using

BY INTERLEAVED ORDERclustering when queries specify a variety of column combinations. For example, if queries ofsh.salesspecify different dimensions in different orders, then you can cluster data in thesalestable according to columns in these dimensions.

Attribute clustering is only available for direct path INSERT operations. It is ignored for conventional DML.

This section contains the following topics:

Advantages of Attribute-Clustered Tables

The primary benefit of attribute-clustered tables is I/O reduction, which can significantly reduce the I/O cost and CPU cost of table scans. I/O reduction occurs either with zones or by reducing physical I/O through closer physical proximity on disk for the clustered values.

An attribute-clustered table has the following advantages:

-

You can cluster fact tables based on dimension columns in star schemas.

In star schemas, most queries qualify dimension tables and not fact tables, so clustering by fact table columns is not effective. Oracle Database supports clustering on columns in dimension tables.

-

I/O reduction can occur in several different scenarios:

-

When used with Oracle Exadata Storage Indexes, Oracle In-Memory min/max pruning, or zone maps

-

In OLTP applications for queries that qualify a prefix and use attribute clustering with linear order

-

On a subset of the clustering columns for

BY INTERLEAVED ORDERclustering

-

-

Attribute clustering can improve data compression, and in this way indirectly improve table scan costs.

When the same values are close to each other on disk, the database can more easily compress them.

-

Oracle Database does not incur the storage and maintenance cost of an index.

See Also:

Oracle Database Data Warehousing Guide for more advantages of attribute-clustered tables

Join Attribute Clustered Tables

Attribute clustering that is based on joined columns is called join attribute clustering. In contrast with table clusters, join attribute clustered tables do not store data from a group of tables in the same database blocks.

For example, consider an attribute-clustered table, sales, joined with a dimension table, products. The sales table contains only rows from the sales table, but the ordering of the rows is based on the values of columns joined from products table. The appropriate join is executed during data movement, direct path insert, and CREATE TABLE AS SELECT operations. In contrast, if sales and products were in a standard table cluster, the data blocks would contain rows from both tables.

See Also:

Oracle Database Data Warehousing Guide to learn more about join attribute clustering

I/O Reduction Using Zones

A zone is a set of contiguous data blocks that stores the minimum and maximum values of relevant columns.

When a SQL statement contains predicates on columns stored in a zone, the database compares the predicate values to the minimum and maximum stored in the zone. In this way, the database determines which zones to read during SQL execution.

I/O reduction is the ability to skip table or index blocks that do not contain data that the database needs to satisfy a query. This reduction can significantly reduce the I/O and CPU cost of table scans.

Purpose of Zones

For a loose analogy of zones, consider a sales manager who uses a bookcase of pigeonholes, which are analogous to data blocks.

Each pigeonhole has receipts (rows) describing shirts sold to a customer, ordered by ship date. In this analogy, a zone map is like a stack of index cards. Each card corresponds to a "zone" (contiguous range) of pigeonholes, such as pigeonholes 1-10. For each zone, the card lists the minimum and maximum ship dates for the receipts stored in the zone.

When someone wants to know which shirts shipped on a certain date, the manager flips the cards until she comes to the date range that contains the requested date, notes the pigeonhole zone, and then searches only pigeonholes in this zone for the requested receipts. In this way, the manager avoids searching every pigeonhole in the bookcase for the receipts.

Zone Maps

A zone map is an independent access structure that divides data blocks into zones. Oracle Database implements each zone map as a type of materialized view.

Like indexes, zone maps can reduce the I/O and CPU costs of table scans. When a SQL statement contains predicates on columns in a zone map, the database compares the predicate values to the minimum and maximum table column values stored in each zone to determine which zones to read during SQL execution.

A basic zone map is defined on a single table and maintains the minimum and maximum values of some columns of this table. A join zone map is defined on a table that has an outer join to one or more other tables and maintains the minimum and maximum values of some columns in the other tables. Oracle Database maintains both types of zone map automatically.

At most one zone map can exist on a table. In the case of a partitioned table, one zone map exists for all partitions and subpartitions. A zone map of a partitioned table also keeps track of the minimum and maximum values per zone, per partition, and per subpartition. Zone map definitions can include minimum and maximum values of dimension columns provided the table has an outer join with the dimension tables.

See Also:

Oracle Database Data Warehousing Guide for an overview of zone maps

Zone Map Creation

Basic zone maps are created either manually or automatically.

Manual Zone Maps

You can create, drop, and maintain zone maps using DDL statements.

Whenever you specify the CLUSTERING clause in a CREATE TABLE or ALTER TABLE statement, the database automatically creates a zone map on the specified clustering columns. The zone map correlates minimum and maximum values of columns with consecutive data blocks in the attribute-clustered table. Attribute-clustered tables use zone maps to perform I/O reduction.

You can also create zone maps explicitly by using the CREATE MATERIALIZED ZONEMAP statement. In this case, you can create zone maps for use with or without attribute clustering. For example, you can create a zone map on a table whose rows are naturally ordered on a set of columns, such as a stock trade table whose trades are ordered by time.

See Also:

-

Oracle Database Data Warehousing Guide to learn more how to create zone maps

Automatic Zone Maps

Oracle Database can create basic zone maps automatically. These are known as automatic zone maps.

Oracle Database can create basic zone maps automatically for both partitioned and nonpartitioned tables. A background process automatically maintains zone maps created in this way.

Use the DBMS_AUTO_ZONEMAP procedure to enable automatic zone maps:

EXEC DBMS_AUTO_ZONEMAP.CONFIGURE('AUTO_ZONEMAP_MODE','ON')See Also:

-

Oracle Database Data Warehousing Guide to learn more about managing automatic zone maps using the

DBMS_AUTO_ZONEMAPpackage -

Oracle Database PL/SQL Packages and Types Reference to learn more about the

DBMS_AUTO_ZONEMAPpackage -

Oracle Database Licensing Information User Manual for details on which features are supported for different editions and services

How a Zone Map Works: Example

This example illustrates how a zone map can prune data in a query whose predicate contains a constant.

Assume you create the following lineitem table:

CREATE TABLE lineitem

( orderkey NUMBER ,

shipdate DATE ,

receiptdate DATE ,

destination VARCHAR2(50) ,