4 Sharded Database Deployment

Create and configure a sharded database, beginning with host provisioning, and continuing through software configuration, database setup, sharding metadata creation, and schema creation. This process is known as deployment.

The following topics explain the concepts and tasks to deploy a sharded database:

- Introduction to Sharded Database Deployment

Oracle Sharding provides the capability to automatically deploy the sharded database, which includes both the shards and the replicas. - Planning Your Sharded Database Deployment

Many decisions need to be made when planning your sharded database deployment including the sharded database topology, replication method, and the sharding methodology. - Install the Oracle Database Software

Install Oracle Database on each system that will host the shard catalog, a database shard, or their replicas. - Install the Shard Director Software

Install the global service manager software on each system that you want to host a shard director. - Create the Shard Catalog Database

Use the following information and guidelines to create the shard catalog database. - Create the Shard Databases

The databases that will be used as shards should be created on their respective hosts. - Configure the Sharded Database Topology

After the databases for the shard catalog and all of the shards are configured, along with corresponding TNS listeners, you can add the sharding metadata to the shard catalog database usingGDSCTL. The sharding metadata describes the topology used for the sharded database. - Deploy the Sharding Configuration

When the sharded database topology has been fully configured withGDSCTLcommands, run theGDSCTL DEPLOYcommand to deploy the sharded database configuration. - Create and Start Global Database Services

After the shards are successfully deployed, and the correct status has been confirmed, create and start global database services on the shards to service incoming connection requests from your application. - Verify Shard Status

Once you complete the DEPLOY step in your sharding configuration deployment, verify the detailed status of a shard - Example Sharded Database Deployment

This example explains how to deploy a typical system-managed sharded database with multiple replicas, using Oracle Data Guard for high availability.

Introduction to Sharded Database Deployment

Oracle Sharding provides the capability to automatically deploy the sharded database, which includes both the shards and the replicas.

The sharded database administrator defines the topology (regions, shard hosts,

replication technology) and invokes the DEPLOY command with a

declarative specification using the GDSCTL command-line interface.

Before You Begin

Note that there are many different configurations and topologies that can be used for a sharded database. Your particular sharded database may employ a variety of Oracle software components such as Oracle Data Guard and Oracle Real Application Clusters (Oracle RAC) along with different sharding methodologies including system-managed, composite, and user-defined sharding.

Depending on your application’s particular architecture and system requirements, you may have several choices from which to choose when designing your system. Familiarize yourself with Oracle Sharding Architecture and Concepts before proceeding with deployment.

Sharded Database Deployment Roadmap

At a high level, the deployment steps are:

- Set up the components.

- Provision and configure the hosts that will be needed for the sharding configuration and topology selected (see Provision and Configure Hosts and Operating Systems).

- Install Oracle Database software on the selected catalog and shard nodes (see Install the Oracle Database Software).

- Install global service manager (GSM) software on the shard director nodes (see Install the Shard Director Software).

- Create databases needed to store the sharding metadata and the application data.

- Create a database that will become the shard catalog along with any desired replicas for disaster recovery (DR) and high availability (HA) (see Create the Shard Catalog Database).

- Create databases that will become the shards in the configuration including any standby databases needed for DR and HA (see Create the Shard Databases).

- Specify the sharding topology using some or all the following commands

from the

GDSCTLcommand line utility, among others (see Configure the Sharded Database Topology).CREATE SHARDCATALOGADD GSMSTART GSMADD SHARDGROUPADD SHARDADD INVITEDNODE

- Run

DEPLOYto deploy the sharding topology configuration (see Deploy the Sharding Configuration). - Add the global services needed to access any shard in the sharded database (see Create and Start Global Database Services).

- Verify the status of each shard (see Verify Shard Status).

When the sharded database configuration deployment is complete and successful, you can create the sharded schema objects needed for your application. See Sharded Database Schema Objects.

The topics that follow describe each of the deployment tasks in more detail along with specific requirements for various components in the system. These topics can act as a reference for the set up and configuration of each particular step in the process. However, by themselves, they will not produce a fully functional sharding configuration since they do not implement a complete sharding scenario, but only provide the requirements for each step.

Example Sharded Database Deployment walks you through a specific deployment scenario of a representative reference configurations. This section provides examples of every command needed to produce a fully functional sharded databases once all the steps are completed.

Parent topic: Sharded Database Deployment

Planning Your Sharded Database Deployment

Many decisions need to be made when planning your sharded database deployment including the sharded database topology, replication method, and the sharding methodology.

There are many different configurations and topologies that can be used for a sharded database. Your particular sharded database may employ a variety of Oracle software components such as Oracle Data Guard and Oracle Real Application Clusters (Oracle RAC) along with different sharding methodologies including system-managed, composite, and user-defined sharding.

Depending on which sharding method you choose (system, composite, or user-defined sharding), you can further refine your topology planning with decisions about considerations such as the number of chunks, shardgroups or shardspaces, regions, standbys, and open as opposed to mounted databases, and so on.

See Oracle Sharding Architecture and Concepts for information pertaining to these topology options.

- Plan the Sharded Database Configuration

To plan your Oracle Sharding configuration you need an understanding of the objects that make up a sharded database configuration, so that you can best configure and deploy them to meet your requirements. - Provision and Configure Hosts and Operating Systems

Before you install any software, review these hardware, network, and operating system requirements for Oracle Sharding. - Multi-Shard Query Coordinator Availability and Scalability

The multi-shard query coordinator, a component of the shard catalog, can be kept highly available and scaled to meet its workload with these recommendations.

Parent topic: Sharded Database Deployment

Plan the Sharded Database Configuration

To plan your Oracle Sharding configuration you need an understanding of the objects that make up a sharded database configuration, so that you can best configure and deploy them to meet your requirements.

The sharded database configuration consists of the sharding method, replication (high availability) technology, the default number of chunks to be present in the sharded database, the location and number of shard directors, the numbers of shardgroups, shardspaces, regions, and shards in the sharded database, and the global services that will be used to connect to the sharded database.

Oracle Database Global Data Services Architecture

Because the Oracle Sharding feature is built on the Oracle Database Global Data Services feature, to plan your Oracle Sharding topology you might benefit from an understanding of the Global Data Services architecture. See Introduction to Global Data Services for conceptual information about Global Data Services.

Parent topic: Planning Your Sharded Database Deployment

Provision and Configure Hosts and Operating Systems

Before you install any software, review these hardware, network, and operating system requirements for Oracle Sharding.

-

Oracle Database Enterprise Edition is required when running an Oracle Sharded Database.

-

Hardware and operating system requirements for shards are the same as those for Oracle Database. See your Oracle Database installation documentation for these requirements.

-

Hardware and operating system requirements for the shard catalog and shard directors are the same as those for the Global Data Services catalog and global service manager. See Oracle Database Global Data Services Concepts and Administration Guide for these requirements.

-

Network Low Latency GigE is strongly recommended

-

Port communication requirements are as follows.

-

Each and every shard must be able to reach each and every shard director's listener and ONS ports. The shard director listener ports and the ONS ports must also be opened to the application/client tier, all of the shards, the shard catalog, and all other shard directors.

The default listener port of the shard director is 1522, and the default ONS ports on most platforms are 6123 for the local ONS and 6234 for remote ONS.

-

Each and every shard must be able to reach the TNS Listener port (default 1521) of the shard catalog (both primary and standbys).

-

The TNS Listener port of each shard must be opened to all shard directors and the shard catalog.

-

All of the port numbers listed above are modifiable during the deployment configuration. However, the port numbers to be used must be known before setting up the host software.

-

-

Host name resolution must be successful between all of the shard catalog, shards, and shard director hosts. Operating system commands such as ‘ping’ must succeed from a given host to any other host when specifying any host names provided during sharded database configuration commands.

Number and Sizing of Host Systems

Depending on your specific configuration, the hosts that are needed may include the following:

-

Shard catalog host. The shard catalog host runs the Oracle Database that serves as the shard catalog. This database contains a small amount of sharding topology metadata and any duplicated tables that are created for your application. In addition, the shard catalog acts as a multi-shard query coordinator for cross-shard queries and services connections for applications that have not been written to be sharding-aware. In general, the transaction workload and size of this database are not particularly large.

-

Shard catalog database standbys (replicas). At least one more host to contain a replica or standby of the primary shard catalog database is recommended. This host is necessary in case of a failure of the primary catalog host. In addition, while acting as a standby database, this host can also be configured to be a query coordinator for cross-shard queries.

-

Shard director host. The shard director (global service manager) software can reside on a separate host, or it can be co-located on the same host as the shard catalog. This component of the sharding system is comprised of a network listener and several background processes used to monitor and configure a sharded configuration. If it is co-located on the same host as the catalog database, the shard director must be installed in a separate Oracle Home from the catalog database, because the installation package is different than the one used for Oracle Database.

-

Multiple shard directors. For high-availability purposes, it is recommended that you have more than one shard director running in a sharded system. Any additional shard directors can run on their own hosts or on the hosts running the standby shard catalog databases.

-

Shards. In addition to the above hosts, each shard that is configured in the system should also run on its own separate host. The hosts and their configurations chosen for this task should be sized in the same way as a typical Oracle Database host depending on how much load is put on each particular shard.

-

Shard standbys (replicas). Again, for high-availability and disaster recovery purposes, use Oracle Data Guard and replicas created for all sharded data. Additional hosts will be needed to run these replica or standby databases.

Note:

Oracle GoldenGate replication support for Oracle Sharding High Availability is deprecated in Oracle Database 21c.

When the number of hosts and capacity requirements for each host have been determined, provision your hardware resources as appropriate for your environment using whatever methodologies you choose.

Before installing any software, you must confirm that the hosts can communicate with each other though the ports as described above. Because a sharding configuration is inherently a distributed system, it is crucial that this connectivity between and among all of the hosts is confirmed before moving on to the next steps in the deployment process. Failure to set up port access correctly will lead to failures in subsequent commands.

Parent topic: Planning Your Sharded Database Deployment

Multi-Shard Query Coordinator Availability and Scalability

The multi-shard query coordinator, a component of the shard catalog, can be kept highly available and scaled to meet its workload with these recommendations.

The availability of the multi-shard coordinator impacts proxy-routing based workloads, so it is highly recommended that the coordinator be protected with Data Guard in Maximum Availability protection mode (zero data loss failover) with fast-start failover enabled. The coordinator may optionally be Oracle RAC-enabled for additional availability and scalability.

To improve the scalability and availability of multi-shard query workloads,

Oracle Active Data Guard standby shard catalog databases in read-only mode can act as

multi-shard query coordinators. For each active replica of the catalog database, a

special coordinator service, GDS$COORDINATOR.cloud_name (where cloud_name is the value

specified for the configname parameter in the GDSCTL CREATE

SHARDCATALOG command, and is oradbcloud by default) is

running and registered on all shard directors.

Clients can connect to this service on any of the replicas and perform multi-shard queries, allowing shard directors to distribute the multi-shard query workload with respect to runtime load balancing and decrease the load on in the primary shard catalog, which is the central component of the Oracle Sharding framework.

Additionally, if the database’s region is set, and the client specifies the region in the connection string, a shard director routes a connection with respect to regional affinity.

Availability of the multi-shard query coordinator has zero impact on workloads using direct routing.

Parent topic: Planning Your Sharded Database Deployment

Install the Oracle Database Software

Install Oracle Database on each system that will host the shard catalog, a database shard, or their replicas.

Aside from the requirement that the shard catalog and all of the shards in an Oracle Sharding configuration require Oracle Database Enterprise Edition, there are no other special installation considerations needed for sharding as long as the installation is successful and all post-install scripts have been run successfully.

See your platform’s installation guide at https://docs.oracle.com/en/database/oracle/oracle-database/ for information about configuring operating system users.

Parent topic: Sharded Database Deployment

Install the Shard Director Software

Install the global service manager software on each system that you want to host a shard director.

Note that this software installation is distinct from an Oracle Database installation. If you choose to co-locate the shard director software on the same host as the shard catalog database, it must be installed in a separate Oracle Home.

See Oracle Database Global Data Services Concepts and Administration Guide for information about installing the global service manager software.

Parent topic: Sharded Database Deployment

Create the Shard Catalog Database

Use the following information and guidelines to create the shard catalog database.

The shard catalog database contains a small amount of sharding topology metadata and also contains all the duplicated tables that will be created for use by your sharded application. The shard catalog database also acts as a query coordinator to run cross-shard queries that select and aggregate data from more than one shard.

From a sharding perspective, the way in which you create or provision the catalog database is irrelevant. The database can be created with the Database Configuration Assistant (DBCA), manually using SQL*Plus, or provisioned from cloud infrastructure tools.

As long as you have a running Oracle Database Enterprise Edition instance on the shard catalog host with the following characteristics, it can used as the shard catalog.

-

Create a pluggable database (PDB) for use as the shard catalog database. Using the root container (

CDB$ROOT) of a container database (CDB) as the shard catalog database is not supported. -

Your shard catalog database must use a server parameter file (

SPFILE). This is required because the sharding infrastructure uses internal database parameters to store configuration metadata, and that data needs to persist across database startup and shutdown operations.$ sqlplus / as sysdba SQL> show parameter spfile NAME TYPE VALUE -------- --------- ------------------------------------ spfile string /u01/app/oracle/dbs/spfilecat.ora -

The database character set and national character set must be the same, because it is used for all of the shard databases. This means that the character set chosen must contain all possible characters that will be inserted into the shard catalog or any of the shards.

This requirement arises from the fact that Oracle Data Pump is used internally to move transportable tablespaces from one shard to another during sharding

MOVE CHUNKcommands. A requirement of that mechanism is that character sets must match on the source and destination.$ sqlplus / as sysdba SQL> alter session set container=catalog_pdb_name; SQL> select * from nls_database_parameters 2 where parameter like '%CHARACTERSET'; PARAMETER VALUE ---------------------------------------- -------------------- NLS_NCHAR_CHARACTERSET AL16UTF16 NLS_CHARACTERSET WE8DEC -

Because the shard catalog database can run multi-shard queries which connect to shards over database links, the

OPEN_LINKSandOPEN_LINKS_PER_INSTANCEdatabase initialization parameter values must be greater than or equal to the number of shards that will be part of the sharded database configuration.$ sqlplus / as sysdba SQL> alter session set container=catalog_pdb_name; SQL> show parameter open_links NAME TYPE VALUE ------------------------------------ ----------- ------------ open_links integer 20 open_links_per_instance integer 20 -

Set the

DB_FILESdatabase initialization parameter greater than or equal to the total number of chunks and/or tablespaces in the system.Each data chunk in a sharding configuration is implemented as a tablespace partition and resides in its own operating system data file. As a result, the

DB_FILESdatabase initialization parameter must be greater than or equal to the total number of chunks (as specified on theCREATE SHARDCATALOGorADD SHARDSPACEcommands) and/or tablespaces in the system.$ sqlplus / as sysdba SQL> alter session set container=catalog_pdb_name; SQL> show parameter db_files NAME TYPE VALUE ------------------------------------ ----------- ------------ db_files integer 1024 -

To support Oracle Managed Files, which is used by the sharding chunk management infrastructure, the

DB_CREATE_FILE_DESTdatabase parameter must be set to a valid value.This location is used during chunk movement operations (for example

MOVE CHUNKor automatic rebalancing) to store the transportable tablespaces holding the chunk data. In addition, files described in Oracle Database Administrator’s Guide, "Using Oracle Managed Files," are also stored in this location as is customary for any Oracle database using Oracle Managed Files.$ sqlplus / as sysdba SQL> alter session set container=catalog_pdb_name; SQL> show parameter db_create_file_dest NAME TYPE VALUE --------------------- --------- ----------------------------- db_create_file_dest string /u01/app/oracle/oradata -

If a standby catalog database will be part of the sharding configuration, the

STANDBY_FILE_MANAGEMENTdatabase parameter should be set to in order to automatically create new database files on any standby catalog databases.If this parameter is set to

MANUAL(which is the default), then new database files created duringCREATE TABLESPACEcommands, for example, will not be created on the standby. This will cause data unavailability and application errors if the standby ever becomes a primary database.$ sqlplus / as sysdba SQL> alter session set container=catalog_pdb_name; SQL> show parameter standby_file_management NAME TYPE VALUE ------------------------------------ ----------- ------------ standby_file_management stirng AUTO -

An Oracle-provided user account named

GSMCATUSERmust be unlocked and assigned a password inside the PDB designated for the shard catalog. This account is used by the shard director processes to connect to the shard catalog database and perform administrative tasks in response to sharding commands.Note that

GSMCATUSERis a common user in the container database. As a result, its password is the same forCDB$ROOTand all PDBs in the CDB. If multiple PDBs in a single CDB are to be used as catalog databases for different sharding configurations, they will all share the sameGSMCATUSERpassword which can be a security concern. To avoid this potential security concern, configure a separate CDB to host each shard catalog. Each CDB should contain only a single shard catalog PDB so that no other PDBs in the CDB can share the commonGSMCATUSERpassword. In this way, multiple shard catalogs can be configured across several CDBs, each having differentGSMCATUSERpasswords.The password you specify is used later during sharding topology creation in any

ADD GSMcommands that are issued. It never needs to be specified again because the shard director stores it securely in an Oracle Wallet and decrypts it only when necessary.The

MODIFY GSMcommand can be used to update the stored password if it is later changed on the shard catalog database.$ sqlplus / as sysdba SQL> alter user gsmcatuser account unlock; User altered. SQL> alter user gsmcatuser identified by gsmcatuser_password; User altered. SQL> alter session set container=catalog_pdb_name; SQL> alter user gsmcatuser account unlock; User altered. -

A shard catalog administrator account must be created, assigned a password, and granted privileges inside the PDB designated as the shard catalog.

This account is the administrator account for the sharding metadata in the shard catalog database. It is used to access the shard catalog using the

GDSCTLutility when an administrator needs to makes changes to the sharded database topology or perform other administrative tasks.GDSCTLconnects as this user to the shard catalog database whenGDSCTLcommands are run. The user name and password specified are used later in theCREATE SHARDCATALOGcommand. As with theGSMCATUSERaccount above, the user name and password are stored securely in an Oracle Wallet for later use. The stored credentials can be updated by issuing an explicitCONNECTcommand fromGDSCTLto reset the values in the wallet.$ sqlplus / as sysdba SQL> alter session set container=catalog_pdb_name; SQL> create user mysdbadmin identified by mysdbadmin_password; User created. SQL> grant gsmadmin_role to mysdbadmin; Grant succeeded. -

Set up and run an Oracle Net TNS Listener at your chosen port (default is 1521) that can service incoming connection requests for the shard catalog PDB.

The TNS Listener can be created and configured in whatever way you wish. Depending on how the database was created, it may be necessary to explicitly create a database service that can allow for direct connection requests to the PDB without the need to use

ALTER SESSION SET CONTAINER.To validate that the listener is configured correctly, do the following using your newly created mysdbadmin account above and an appropriate connect string. Running

LSNRCTL SERVICESlists all services currently available using the listener.$ sqlplus mysdbadmin/mysdbadmin_password@catalog_connect_string SQL> show con_name CON_NAME ----------------------- catalog_pdb_nameOnce you confirm connectivity, make note of the catalog_connect_string above. It is used later in the configuration process in the

GDSCTL CREATE SHARDCATALOGcommand. Typically, it will be of the form host:port/service_name (for example,cathost.example.com:1521/catalog_pdb.example.com).

After all of the above requirements have been met, the newly created database can now

be the target of a GDSCTL CREATE SHARDCATALOG command.

For high availability and disaster recovery purposes, it is highly recommended that you also create one or more standby shard catalog databases. From a sharding perspective, as long as the above requirements are also met on the standby databases, and all changes to the primary shard catalog database are consistently applied to the standbys, there are no further sharding-specific configuration steps required.

Parent topic: Sharded Database Deployment

Create the Shard Databases

The databases that will be used as shards should be created on their respective hosts.

As with the shard catalog database, the way in which you create or provision the shard databases is irrelevant from a sharding perspective. The database can be created with the Database Configuration Assistant (DBCA), manually using SQL*Plus, or provisioned from cloud infrastructure tools.

As long as you have a running Oracle Database Enterprise Edition instance on each shard host, with the following characteristics, it can be used as a shard.

-

An Oracle-provided user account named

GSMROOTUSERmust be unlocked and assigned a password insideCDB$ROOTof the database designated for a shard. In addition, this user must be granted theSYSDGandSYSBACKUPsystem privileges.The

GSMROOTUSERaccount is used byGDSCTLand the shard director processes to connect to the shard database to perform administrative tasks in response to sharding commands. The password specified is used byGDSCTLduring sharding topology creation in anyADD CDBcommands that are issued. It is also be used by the shard director during theDEPLOYcommand to configure Oracle Data Guard (as necessary) on the shard databases. It never needs to be specified again by the user, becauseGDSCTLand the shard director store it securely in an Oracle Wallet and decrypt it only when necessary. TheMODIFY CDBcommand can be used to update the stored password if it is later changed on the shard database.$ sqlplus / as sysdba SQL> alter user gsmrootuser account unlock; User altered. SQL> alter user gsmrootuser identified by gsmrootuser_password; User altered. SQL> grant SYSDG, SYSBACKUP to gsmrootuser; Grant succeeded. -

Create a pluggable database (PDB) for use as the shard database. Using the root container (

CDB$ROOT) of a container database (CDB) as a shard is not supported. -

Your shard database must use a server parameter file (

SPFILE). TheSPFILEis required because the sharding infrastructure uses internal database parameters to store configuration metadata, and that data must persist through database startup and shutdown operations.$ sqlplus / as sysdba SQL> alter session set container=shard_pdb_name; SQL> show parameter spfile NAME TYPE VALUE -------- --------- ------------------------------------ spfile string /u01/app/oracle/dbs/spfileshard.ora -

The database character set and national character set of the shard database must be the same as that used for the shard catalog database and all other shard databases. This means that the character set you choose must contain all possible characters that will be inserted into the shard catalog or any of the shards.

This requirement arises from the fact that Oracle Data Pump is used internally to move transportable tablespaces from one shard to another during sharding

MOVE CHUNKcommands. A requirement of that mechanism is that character sets must match on the source and destination.$ sqlplus / as sysdba SQL> alter session set container=shard_pdb_name; SQL> select * from nls_database_parameters 2 where parameter like '%CHARACTERSET'; PARAMETER VALUE ---------------------------------------- -------------------- NLS_NCHAR_CHARACTERSET AL16UTF16 NLS_CHARACTERSET WE8DEC -

The

COMPATIBLEinitialization parameter must be set to at least 12.2.0.$ sqlplus / as sysdba SQL> alter session set container=shard_pdb_name; SQL> show parameter compatible NAME TYPE VALUE ---------------------- ----------- ----------------- compatible string 20.0.0 -

Enable Flashback Database if your sharded database will use standby shard databases.

$ sqlplus / as sysdba SQL> alter session set container=shard_pdb_name; SQL> select flashback_on from v$database; FLASHBACK_ON ------------------ YES -

FORCE LOGGINGmode must be enabled if your shard database will use standby shard databases.$ sqlplus / as sysdba SQL> alter session set container=shard_pdb_name; SQL> select force_logging from v$database; FORCE_LOGGING --------------------------------------- YES -

Set the

DB_FILESdatabase initialization parameter greater than or equal to the total number of chunks and/or tablespaces in the system.Each data chunk in a sharding configuration is implemented as a tablespace partition and resides in its own operating system datafile. As a result, the

DB_FILESdatabase initialization parameter must be greater than or equal to the total number of chunks (as specified in theCREATE SHARDCATALOGorADD SHARDSPACEcommands) and/or tablespaces in the system.$ sqlplus / as sysdba SQL> alter session set container=shard_pdb_name; SQL> show parameter db_files NAME TYPE VALUE ------------------------------------ ----------- ------------ db_files integer 1024 -

To support Oracle Managed Files, used by the sharding chunk management infrastructure, the

DB_CREATE_FILE_DESTdatabase parameter must be set to a valid value.This location is used during chunk movement operations (for example

MOVE CHUNKor automatic rebalancing) to store the transportable tablespaces holding the chunk data. In addition, files described in Oracle Database Administrator’s Guide, "Using Oracle Managed Files," are also stored in this location as is customary for any Oracle database using Oracle Managed Files.$ sqlplus / as sysdba SQL> alter session set container=shard_pdb_name; SQL> show parameter db_create_file_dest NAME TYPE VALUE --------------------- --------- ----------------------------- db_create_file_dest string /u01/app/oracle/oradata -

A directory object named

DATA_PUMP_DIRmust be created and accessible in the PDB from theGSMADMIN_INTERNALaccount.GSMADMIN_INTERNALis an Oracle-supplied account that owns all of the sharding metadata tables and PL/SQL packages. It should remain locked and is never used to login interactively. It’s only purpose is to own and control access to the sharding metadata and PL/SQL.$ sqlplus / as sysdba SQL> create or replace directory DATA_PUMP_DIR as ‘/u01/app/oracle/oradata’; Directory created. SQL> alter session set container=shard_pdb_name; SQL> grant read, write on directory DATA_PUMP_DIR to gsmadmin_internal; Grant succeeded. -

To support file movement from shard to shard, the

DB_FILE_NAME_CONVERTdatabase parameter must be set to a valid value. This location is used when standby databases are in use, as is typical with non-sharded databases, and the location can also be used during chunk movement operations. For regular file system locations, it is recommended that this parameter end with a trailing slash (/).$ sqlplus / as sysdba SQL> alter session set container=shard_pdb_name; SQL> show parameter db_file_name_convert NAME TYPE VALUE ---------------------- --------- ----------------------------- db_file_name_convert string /dbs/SHARD1/, /dbs/SHARD1S/ -

If a standby shard databases will be part of the sharding configuration, the

STANDBY_FILE_MANAGEMENTdatabase parameter should be set toAUTOto automatically create new database files on any standby shard databases.If this parameter is set to

MANUAL(which is the default), then new database files created duringCREATE TABLESPACEcommands, for example, will not be created on the standby. This will cause data unavailability and application errors if the standby ever becomes a primary database.$ sqlplus / as sysdba SQL> alter session set container=shard_pdb_name; SQL> show parameter standby_file_management NAME TYPE VALUE ------------------------------------ ----------- ------------ standby_file_management string AUTO -

An Oracle-provided user account named

GSMUSERmust be unlocked and assigned a password inside the PDB designated as the shard database. In addition, this user must be granted theSYSDGandSYSBACKUPsystem privileges.Note that

GSMUSERis a common user in the container database. As a result, its password is the same forCDB$ROOTand all PDBs in the CDB, which can be a security concern. To avoid this, host only one shard PDB per CDB, and do not unlock theGSMUSERaccount in any other PDBs.This account is used by the shard director processes to connect to the shard database and perform administrative tasks in response to sharding commands. The password specified is used later during sharding topology creation in any

ADD SHARDcommands that are issued. The password never needs to be specified again because the shard director stores it securely in an Oracle Wallet and only decrypts it when necessary. You can update the stored password using theMODIFY SHARDcommand if the password is later changed on the shard database.$ sqlplus / as sysdba SQL> alter user gsmuser account unlock; User altered. SQL> alter user gsmuser identified by gsmuser_password; User altered. SQL> alter session set container=shard_pdb_name; SQL> alter user gsmuser account unlock; User altered. SQL> grant SYSDG, SYSBACKUP to gsmuser; Grant succeeded. -

Set up and run an Oracle Net TNS Listener at your chosen port (default is 1521) that can service incoming connection requests for the shard PDB.

The TNS Listener can be created and configured in whatever way you wish. Depending on how the database was created, it may be necessary to explicitly create a database service that can allow for direct connection requests to the PDB without the need to use

ALTER SESSION SET CONTAINER.To validate that the listener is configured correctly, run the following command using your newly unlocked

GSMUSERaccount and an appropriate connect string. RunningLSNRCTL SERVICESlists all services currently available using the listener.$ sqlplus gsmuser/gsmuser_password@shard_connect_string SQL> show con_name CON_NAME ----------------------- shard_pdb_nameOnce you confirm connectivity, make note of the shard_connect_string above. It is used later in the configuration process in the

GDSCTL ADD SHARDcommand. Typically, the connect string is in the form host:port/service_name (for example,shardhost.example.com:1521/shard_pdb.example.com).

Validate the Shard Database

To validate that all of the above requirements have been met, you can

run an Oracle-supplied procedure, validateShard, that inspects the

shard database and reports any issues encountered. This procedure is read-only and

makes no changes to the database configuration.

The validateShard procedure can and should be run

against primary, mounted (unopened) standby, and Active Data Guard standby databases

that are part of the sharded database configuration. You can run

validateShard multiple times and at any time during the sharded

database life cycle, including after upgrades and patching.

To run the validateShard package, do the following:

$ sqlplus / as sysdba

SQL> alter session set container=shard_pdb_name;

SQL> set serveroutput on

SQL> execute dbms_gsm_fix.validateShard

This procedure will produce output similar to the following:

INFO: Data Guard shard validation requested.

INFO: Database role is PRIMARY.

INFO: Database name is SHARD1.

INFO: Database unique name is shard1.

INFO: Database ID is 4183411430.

INFO: Database open mode is READ WRITE.

INFO: Database in archivelog mode.

INFO: Flashback is on.

INFO: Force logging is on.

INFO: Database platform is Linux x86 64-bit.

INFO: Database character set is WE8DEC. This value must match the character set of the catalog database.

INFO: 'compatible' initialization parameter validated successfully.

INFO: Database is a multitenant container database.

INFO: Current container is SHARD1_PDB1.

INFO: Database is using a server parameter file (spfile).

INFO: db_create_file_dest set to: '/u01/app/oracle/dbs'

INFO: db_recovery_file_dest set to: '/u01/app/oracle/dbs'

INFO: db_files=1000. Must be greater than the number of chunks and/or

tablespaces to be created in the shard.

INFO: dg_broker_start set to TRUE.

INFO: remote_login_passwordfile set to EXCLUSIVE.

INFO: db_file_name_convert set to: '/dbs/SHARD1/, /dbs/SHARD1S/'

INFO: GSMUSER account validated successfully.

INFO: DATA_PUMP_DIR is '/u01/app/oracle/dbs/9830571348DFEBA8E0537517C40AF64B'.

All output lines marked INFO are for informational

purposes and should be validated as correct for your configuration.

All output lines marked ERROR must be fixed before moving on to the

next deployment steps. These issues will cause errors for certain sharding

operations if they are not resolved.

All output lines marked WARNING may or may not be applicable for

your configuration. For example, if standby databases will not be used for this

particular deployment, then any warnings related to standby databases or recovery

can be ignored. This is especially true for non-production, proof-of-concept, or

application development deployments. Review all warnings and resolve as

necessary.

Once all of the above steps have been completed, the newly created

database can now be the target of a GDSCTL ADD SHARD command.

For high availability and disaster recovery purposes, it is highly

recommended that you also create one or more standby shard databases. From a

sharding perspective, as long as the above requirements are also met on the standby

databases, and all changes to the primary shard database are applied to the

standbys, the standby database only needs to be added to the sharding configuration

with an ADD SHARD command.

Parent topic: Sharded Database Deployment

Configure the Sharded Database Topology

After the databases for the shard catalog and all of the shards are

configured, along with corresponding TNS listeners, you can add the sharding metadata to the

shard catalog database using GDSCTL. The sharding metadata describes the

topology used for the sharded database.

The sharded database topology consists of the sharding method, replication (high availability) technology, the default number of chunks to be present in the sharded database, the location and number of shard directors, the numbers of shardgroups, shardspaces, regions, and shards in the sharded database, and the global services that will be used to connect to the sharded database.

Keep the Global Data Services Control Utility (GDSCTL)

Command Reference in the Oracle Database Global

Data Services Concepts and Administration

Guide on hand for

information about usage and options for the GDSCTL commands used in

the configuration procedures.

Follow the procedures listed below, in order, to complete your sharded database topology configuration.

Run the commands from a shard director host, because the

GDSCTL command line interface is installed there as part of the

shard director (global service manager) installation.

- Create the Shard Catalog

Use theGDSCTL CREATE SHARDCATALOGcommand to create metadata describing the sharded database topology in the shard catalog database. - Add and Start Shard Directors

Add to the configuration the shard directors, which will monitor the sharding system and run background tasks in response toGDSCTLcommands and other events, and start them. - Add Shardspaces If Needed

If you are using composite or user-defined sharding, and you need to add more shardspaces to complete your desired sharding topology, use theADD SHARDSPACEcommand to add additional shardspaces. - Add Shardgoups If Needed

If your sharded database topology uses the system-managed or composite sharding method, you can add any necessary additional shardgroups for your application. - Verify the Sharding Topology

Before adding information about your shard databases to the catalog, verify that your sharding topology is correct before proceeding by using the variousGDSCTL CONFIGcommands. - Add the Shard CDBs

Add the CDBs containing the shard PDBs to the sharding configuration with theADD CDBcommand. - Add the Shard PDBs

Use theADD SHARDcommand to add the shard PDB information to the shard catalog, then verify it with theCONFIG SHARDcommand. - Add Host Metadata

Add all of the host names and IP addresses of your shard hosts to the shard catalog.

Parent topic: Sharded Database Deployment

Create the Shard Catalog

Use the GDSCTL CREATE SHARDCATALOG command to create

metadata describing the sharded database topology in the shard catalog database.

Note that once you run CREATE SHARDCATALOG, and the rest

of the sharding metadata has been created, there are several metadata properties

that cannot be modified without recreating the entire sharded database from scratch.

These include the sharding method (system-managed, composite, user-defined),

replication technology (Oracle Data Guard, Oracle GoldenGate), default number of

chunks in the database, and others. Make sure that you consult the

GDSCTL reference documentation for the complete list of

possible command options and their defaults.

Note:

Oracle GoldenGate replication support for Oracle Sharding High Availability is deprecated in Oracle Database 21c.Consult the GDSCTL documentation or run GDSCTL HELP CREATE

SHARDCATALOG for more details about the command usage.

Shard Catalog Connect String

When you run the CREATE SHARDCATALOG command,

GDSCTL connects to the shard catalog database with the user

name and connect string specified.

If your shard catalog database has an associated standby database for high availability or disaster recovery purposes, the connection string, catalog_connect_string in the examples that follow, should specify all primary and standby databases. If you don't include the standby databases in the connect string, then the shard director processes will not be able to connect to the standby if the primary shard catalog is unavailable.

Note that catalog_connect_string should

specify the PDB for the shard catalog database, not the

CDB$ROOT.

The following is a simple tnsnames.ora entry.

CATALOG_CONNECT_STRING=

(DESCRIPTION =

(ADDRESS_LIST =

(ADDRESS = (PROTOCOL = tcp)(HOST = primary_catalog)(PORT = 1521))

(ADDRESS = (PROTOCOL = tcp)(HOST = standby_catalog)(PORT = 1521))

)

(CONNECT_DATA =

(SERVICE_NAME = catpdb.example.com)

)

)Future Connections to the Shard Catalog

GDSCTL stores the credentials for the shard catalog

administrator in a wallet on the local host. However, for subsequent

GDSCTL sessions on other hosts, it may be necessary to

explicitly connect to the shard catalog in order to perform administrative tasks by

running the GDSCTL CONNECT command, as shown here.

GDSCTL> connect mysdbadmin/mysdbadmin_password@catalog_connect_stringParent topic: Configure the Sharded Database Topology

Add and Start Shard Directors

Add to the configuration the shard directors, which will monitor the

sharding system and run background tasks in response to GDSCTL commands and

other events, and start them.

The following commands must be run on the host where the shard director processes are to run. This can be the shard catalog host or a dedicated host for the shard director processes.

For later GDSCTL sessions, you might need to explicitly

specify the shard director to be administered. If an error message is shown

referencing the default GSMORA shard director, run

GDSCTL SET GSM before continuing, as shown here.

GDSCTL> set gsm -gsm sharddirector1Parent topic: Configure the Sharded Database Topology

Add Shardspaces If Needed

If you are using composite or user-defined sharding, and you need to add

more shardspaces to complete your desired sharding topology, use the ADD

SHARDSPACE command to add additional shardspaces.

Parent topic: Configure the Sharded Database Topology

Add Shardgoups If Needed

If your sharded database topology uses the system-managed or composite sharding method, you can add any necessary additional shardgroups for your application.

Each shardspace must contain at least one primary shardgroup and may contain any number or type of standby shardgroups. Shardgroups are not used in the user-defined sharding method.

Parent topic: Configure the Sharded Database Topology

Verify the Sharding Topology

Before adding information about your shard databases to the catalog, verify

that your sharding topology is correct before proceeding by using the various GDSCTL

CONFIG commands.

Once shards are added and deployed, it is no longer possible to change much of the shard catalog metadata, so validating your configuration is an important task at this point.

Parent topic: Configure the Sharded Database Topology

Add the Shard CDBs

Add the CDBs containing the shard PDBs to the sharding configuration with

the ADD CDB command.

Parent topic: Configure the Sharded Database Topology

Add the Shard PDBs

Use the ADD SHARD command to add the shard PDB information

to the shard catalog, then verify it with the CONFIG SHARD

command.

Parent topic: Configure the Sharded Database Topology

Add Host Metadata

Add all of the host names and IP addresses of your shard hosts to the shard catalog.

As part of the deployment process, the shard director contacts the shards and directs them to register with the shard director’s TNS listener process. This listener process only accepts incoming registration requests from trusted sources and will reject registration requests from unknown hosts.

If your shard hosts have multiple host names or network interfaces

assigned to them, it is possible that the incoming registration request to the shard

director may come from a host that was not automatically added during ADD

SHARD. In this case, the registration request is rejected and the shard

will not deploy correctly. The visible symptom of this problem will be that

CONFIG SHARD shows PENDING for the shard’s

Availability after DEPLOY has completed.

To avoid this issue, use the GDSCTL ADD INVITEDNODE

command to manually add all host names and IP addresses of your shard hosts to the

shard catalog metadata.

Parent topic: Configure the Sharded Database Topology

Deploy the Sharding Configuration

When the sharded database topology has been fully configured with

GDSCTL commands, run the GDSCTL DEPLOY command to

deploy the sharded database configuration.

When you run the GDSCTL DEPLOY command the output looks

like the following.

GDSCTL> deploy

deploy: examining configuration...

deploy: requesting Data Guard configuration on shards via GSM

deploy: shards configured successfully

The operation completed successfullyWhat Happens During Deployment

As you can see, when you run DEPLOY several things happen.

- GDSCTL calls a PL/SQL procedure on the shard catalog that examines the sharded database topology configuration to determine if there are any undeployed shards present that are able to be deployed.

- For shards that need to be deployed, the shard catalog sends requests to the shard director to update database parameters on the shards, populate topology metadata on the shard, and direct the shard to register with the shard director.

- If Oracle Data Guard replication is in use, and standby databases are present to deploy, then the shard director calls PL/SQL APIs on the primary shards to create a Data Guard configuration, or to validate an existing configuration on the primary and standby sets. Fast Start Failover functionality is enabled on all of the shards and, in addition, the shard director starts a Data Guard observer process on its host to monitor the Data Guard configuration.

- If new shards are being added to an existing sharded database that already contains deployed shards (called an incremental deployment), then any DDL statements that have been run previously are run on the new shards to ensure that the application schemas are identical across all of the shards.

- Finally, in the case of an incremental deployment on a sharded database using

system-managed or composite sharding methods, automatic chunk movement is scheduled

in the background, which is intended to balance the number of chunks distributed

among the shards now in the configuration. This process can be monitored using the

GDSCTL CONFIG CHUNKScommand after theDEPLOYcommand returns control toGDSCTL.

What Does a Successful Deployment Look Like?

Following a successful deployment, the output from CONFIG SHARD should

look similar to the following, if Data Guard active standby shards are in use.

GDSCTL> config shard

Name Shard Group Status State Region Availability

--------- ------------------- ------- -------- ------- ------------

cdb1_pdb1 shardgroup_primary Ok Deployed region1 ONLINE

cdb2_pdb1 shardgroup_standby Ok Deployed region2 READ ONLY

cdb3_pdb2 shardgroup_primary Ok Deployed region1 ONLINE

cdb4_pdb2 shardgroup_standby Ok Deployed region2 READ ONLY

If mounted, non-open standbys are in use, the output will be similar to the following, because the shard director is unable to log in to check the status of a mounted database.

GDSCTL> config shard

Name Shard Group Status State Region Availability

--------- ------------------ ------------- -------- ------- ------------

cdb1_pdb1 shardgroup_primary Ok Deployed region1 ONLINE

cdb2_pdb1 shardgroup_standby Uninitialized Deployed region2 -

cdb3_pdb2 shardgroup_primary Ok Deployed region1 ONLINE

cdb4_pdb2 shardgroup_standby Uninitialized Deployed region2 -

What To Do If Something Is Not Right

If any shards are showing an availability of PENDING, confirm that all

steps related to ADD INVITEDNODE and CONFIG VNCR from

the topology configuration were completed. If not, complete them now and run

GDSCTL SYNC DATABASE -database shard_name to

complete shard deployment.

Parent topic: Sharded Database Deployment

Create and Start Global Database Services

After the shards are successfully deployed, and the correct status has been confirmed, create and start global database services on the shards to service incoming connection requests from your application.

As an example, the commands in the following examples create read-write services on the primary shards in the configuration and read-only services on the standby shards. These service names can then be used in connect strings from your application to appropriately route requests to the correct shards.

Example 4-1 Add and start a global service that runs on all of the primary shards

The following commands create and start a global service named

oltp_rw_srvc that a client can use to connect to the sharded

database. The oltp_rw_srvc service runs read/write transactions on

the primary shards.

GDSCTL> add service -service oltp_rw_srvc -role primary

GDSCTL> start service -service oltp_rw_srvc

Example 4-2 Add and start a global service for the read-only workload to run on the standby shards

The oltp_ro_srvc global service is created and started

to run read-only workloads on the standby shards. This assumes that the standby

shards are Oracle Active Data Guard standby shards which are open for read-only

access. Mounted, non-open standbys cannot service read-only connections, and exist

for disaster recovery and high availability purposes only.

GDSCTL> add service -service oltp_ro_srvc -role physical_standby

GDSCTL> start service -service oltp_ro_srvc

Example 4-3 Verify the status of the global services

GDSCTL> config service

Name Network name Pool Started Preferred all

---- ------------ ---- ------- -------------

oltp_rw_srvc oltp_rw_srvc.orasdb.oracdbcloud orasdb Yes Yes

oltp_ro_srvc oltp_ro_srvc.orasdb.oracdbcloud orasdb Yes Yes

GDSCTL> status service

Service "oltp_rw_srvc.orasdb.oradbcloud" has 2 instance(s). Affinity: ANYWHERE

Instance "orasdb%1", name: "cdb1_pdb1", db: "cdb1_pdb1", region: "region1", status: ready.

Instance "orasdb%21", name: "cdb3_pdb2", db: "cdb3_pdb2", region: "region1", status: ready.

Service "oltp_ro_srvc.orasdb.oradbcloud" has 2 instance(s). Affinity: ANYWHERE

Instance "orasdb%11", name: "cdb2_pdb1", db: "cdb2_pdb1", region: "region2", status: ready.

Instance "orasdb%31", name: "cdb4_pdb2", db: "cdb4_pdb2", region: "region2", status: ready.

Parent topic: Sharded Database Deployment

Verify Shard Status

Once you complete the DEPLOY step in your sharding configuration deployment, verify the detailed status of a shard

Parent topic: Sharded Database Deployment

Example Sharded Database Deployment

This example explains how to deploy a typical system-managed sharded database with multiple replicas, using Oracle Data Guard for high availability.

To deploy a system-managed sharded database you create shardgroups

and shards, create and configure the databases to be used as shards, execute

the DEPLOY command, and create role-based global

services.

You are not required to map data to shards in system-managed sharding, because the data is automatically distributed across shards using partitioning by consistent hash. The partitioning algorithm evenly and randomly distributes data across shards. For more conceptual information about the system-managed sharded Database, see System-Managed Sharding.

- Example Sharded Database Topology

Consider the following system-managed sharded database configuration, where shardgroup sg1 contains the primary shards, while shardgroups sg2 and sg3 contain standby replicas. - Deploy the Example Sharded Database

Do the following steps to deploy the example system-managed sharded database with multiple replicas, using Oracle Data Guard for high availability.

Parent topic: Sharded Database Deployment

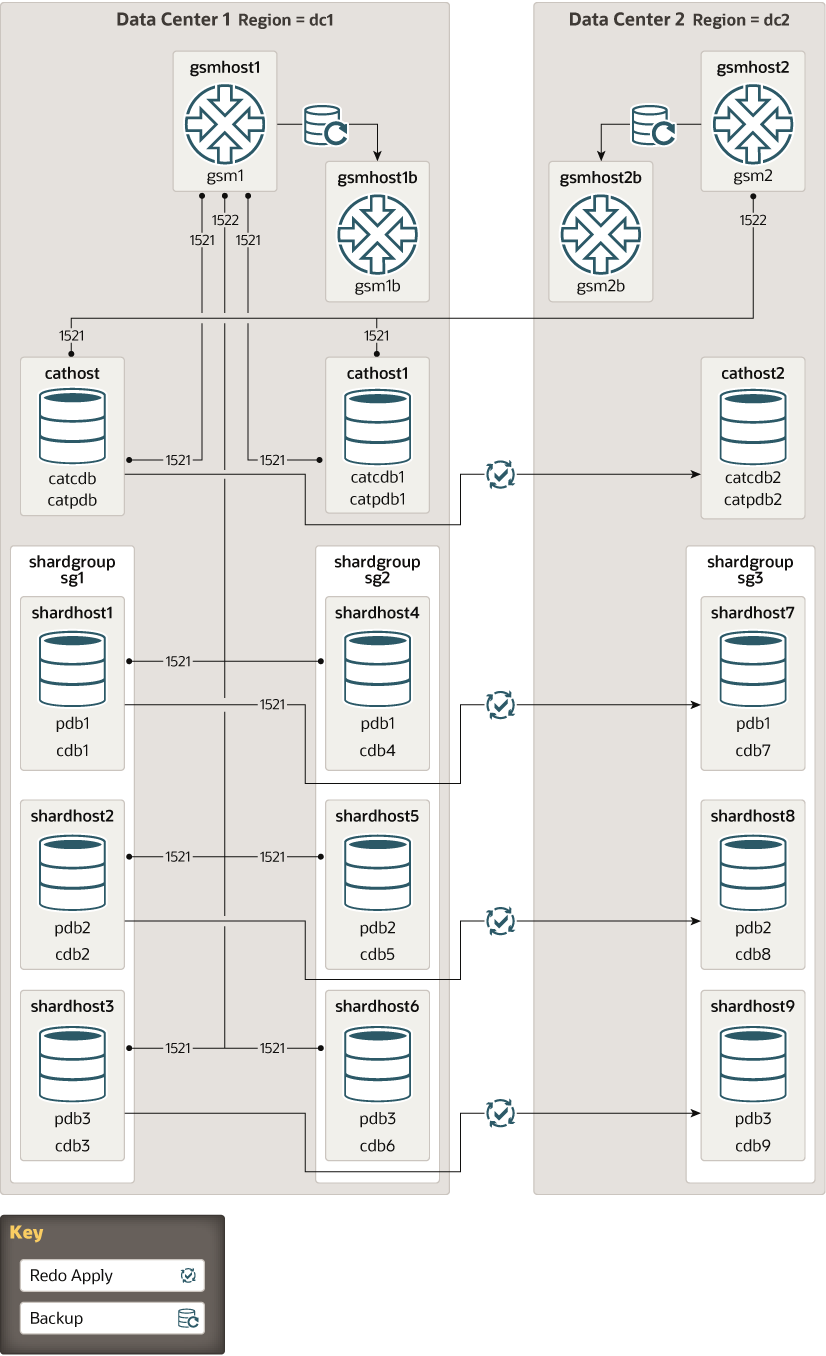

Example Sharded Database Topology

Consider the following system-managed sharded database configuration, where shardgroup sg1 contains the primary shards, while shardgroups sg2 and sg3 contain standby replicas.

In addition, let’s assume that the replicas in shardgroup sg2 are Oracle Active Data Guard standbys (that is, databases open for read-only access), while the replicas in shardgroup sg3 are mounted databases that have not been opened.

Table 4-1 Example System-Managed Topology Host Names

| Topology Object | Description |

|---|---|

| Shard Catalog Database |

Every sharded database topology requires a shard catalog. In our example, the shard catalog database has 2 standbys, one in each data center. Primary

Active Standby

Standby

|

| Regions |

Because there are two datacenters involved in this configuration, there are two corresponding regions created in the shard catalog database. Data center 1

Data center 2

|

| Shard Directors (global service managers) |

Each region requires a shard director running on a host within that data center. Data center 1

Data center 2

|

| Shardgroups |

Data center 1

Data center 2

|

| Shards |

|

Parent topic: Example Sharded Database Deployment

Deploy the Example Sharded Database

Do the following steps to deploy the example system-managed sharded database with multiple replicas, using Oracle Data Guard for high availability.

Parent topic: Example Sharded Database Deployment