12 Sharded Database Administration

Oracle Sharding provides tools and some automation for the administration of a sharded database.

Note:

A multitenant container database is the only supported architecture in Oracle Database 21c. While the documentation is being revised, legacy terminology may persist. In most cases, "database" and "non-CDB" refer to a CDB or PDB, depending on context. In some contexts, such as upgrades, "non-CDB" refers to a non-CDB from a previous release.

The following topics describe sharded database administration in detail:

- Managing the Sharding-Enabled Stack

- Managing Oracle Sharding Database Users

- Backing Up and Recovering a Sharded Database

TheGDSCTLutility lets you define a backup policy for a sharded database and restore one or more shards, or the entire sharded database, to the same point in time. Configured backups are run automatically, and you can define a schedule to run backups during off-peak hours. - Monitoring a Sharded Database

Sharded databases can be monitored using Enterprise Manager Cloud Control or GDSCTL. - Propagation of Parameter Settings Across Shards

When you configure system parameter settings at the shard catalog, they are automatically propagated to all shards of the sharded database. - Modifying a Sharded Database Schema

When making changes to duplicated tables or sharded tables in a sharded database, these changes should be done from the shard catalog database. - Managing Sharded Database Software Versions

- Shard Management

You can manage shards in your Oracle Sharding deployment with Oracle Enterprise Manager Cloud Control and GDSCTL. - Chunk Management

You can manage chunks in your Oracle Sharding deployment with Oracle Enterprise Manager Cloud Control and GDSCTL. - Shard Director Management

You can add, edit, and remove shard directors in your Oracle Sharding deployment with Oracle Enterprise Manager Cloud Control. - Region Management

You can add, edit, and remove regions in your Oracle Sharding deployment with Oracle Enterprise Manager Cloud Control. - Shardspace Management

You can add, edit, and remove shardspaces in your Oracle Sharding deployment with Oracle Enterprise Manager Cloud Control. - Shardgroup Management

You can add, edit, and remove shardgroups in your Oracle Sharding deployment with Oracle Enterprise Manager Cloud Control. - Services Management

You can manage services in your Oracle Sharding deployment with Oracle Enterprise Manager Cloud Control.

Managing the Sharding-Enabled Stack

This section describes the startup and shutdown of components in the sharded database configuration. It contains the following topics:

Parent topic: Sharded Database Administration

Starting Up the Sharding-Enabled Stack

The following is the recommended startup sequence of the sharding-enabled stack:

-

Start the shard catalog database and local listener.

-

Start the shard directors (GSMs).

-

Start up the shard databases and local listeners.

-

Start the global services.

-

Start the connection pools and clients.

Parent topic: Managing the Sharding-Enabled Stack

Shutting Down the Sharding-Enabled Stack

The following is the recommended shutdown sequence of the sharding-enabled stack:

-

Shut down the connection pools and clients.

-

Stop the global services.

-

Shut down the shard databases and local listeners.

-

Stop the shard directors (GSMs).

-

Stop the shard catalog database and local listener.

Parent topic: Managing the Sharding-Enabled Stack

Managing Oracle Sharding Database Users

This section describes the database users specific to Oracle Sharding. It contains the following topics:

- About the GSMUSER Account

TheGSMUSERaccount is used by GDSCTL and shard directors (global service managers) to connect to databases in an Oracle Sharding configuration. - About the GSMROOTUSER Account

GSMROOTUSERis a database account specific to Oracle Sharding that is only used when pluggable database (PDB) shards are present. The account is used by GDSCTL and global service managers to connect to the root container of container databases (CDBs) to perform administrative tasks.

Parent topic: Sharded Database Administration

About the GSMUSER Account

The GSMUSER account is used by GDSCTL and shard directors

(global service managers) to connect to databases in an Oracle Sharding

configuration.

This account need to be unlocked for both the CDB and PDB.

GSMUSER exists by default on any Oracle database. In an Oracle Sharding configuration, the account is used to connect to shards instead of pool databases, and it must be granted both the SYSDG and SYSBACKUP system privileges after the account has been unlocked.

The password given to the GSMUSER account is used in the gdsctl add shard command. Failure to grant SYSDG and SYSBACKUP to GSMUSER on a new shard causes gdsctl add shard to fail with an ORA-1031: insufficient privileges error.

Parent topic: Managing Oracle Sharding Database Users

About the GSMROOTUSER Account

GSMROOTUSER is a database account specific to Oracle Sharding that is only used when pluggable database (PDB) shards are present. The account is used by GDSCTL and global service managers to connect to the root container of container databases (CDBs) to perform administrative tasks.

If PDB shards are not in use, the GSMROOTUSER user should not by unlocked nor assigned a password on any database. However, in sharded configurations containing PDB shards, GSMROOTUSER must be unlocked and granted the SYSDG and SYSBACKUP privileges before a successful gdsctl add cdb command can be executed. The password for the GSMROOTUSER account can be changed after deployment if desired using the alter user SQL command in the root container of the CDB in combination with the gdsctl modify cdb -pwd command.

Parent topic: Managing Oracle Sharding Database Users

Backing Up and Recovering a Sharded Database

The GDSCTL utility lets you define a backup policy for a

sharded database and restore one or more shards, or the entire sharded database, to the same

point in time. Configured backups are run automatically, and you can define a schedule to

run backups during off-peak hours.

Enhancements to GDSCTL in Oracle Database 21c enable and

simplify the centralized management of backup policies for a sharded database, using

Oracle MAA best practices. You can create a backup schedule using an incremental scheme

that leverages the Oracle Job Scheduler. Oracle Recovery Manager (RMAN) performs the

actual backup and restore operations.

Using the GDSCTL centralized backup and restore operations,

you can configure backups, monitor backup status, list backups, validate backups, and

restore from backups.

There are two type of backups: automated backups and on-demand

backups. Automated backups are started by DBMS Scheduler jobs based on the job

schedules, and they run in the background on the database servers. The on-demand backups

are started by users from GDSCTL.

Internally, the on-demand backups are also started by DBMS Scheduler jobs on the database servers. The jobs are created on-fly when the on-demand backup commands are issued. They are temporary jobs and automatically dropped after the backups have finished.

Sharded database structural changes such as chunk move are built in to the backup and restore policy.

Supported Backup Destinations

Backups can be saved to a common disk/directory structure (NFS mount) which can be located anywhere, including the shard catalog database host.

Terminology

The following is some terminology you will encounter in the backup and restore procedures described here.

-

Target database - A database RMAN is to back up.

-

Global SCN - A common point in time for all target databases for which a restore of the entire sharded database is supported. A restore point is taken at this global SCN, and the restore point is the point to which the sharded database (including the shard catalog) can be restored.

Note that you are not prohibited from restoring the shard catalog or a specific shard to an arbitrary point in time. However, doing so may put that target in an inconsistent state with the rest of the sharded database and you may need to take corrective action outside of the restore operation.

-

Incremental backup - Captures block-level changes to a database made after a previous incremental backup.

-

Level 0 incremental backup (level 0 backup) - The incremental backup strategy starting point, which backs up blocks in the database. This backup is identical in content to a full backup; however, unlike a full backup, the level 0 backup is considered a part of the incremental backup strategy.

-

Level 1 incremental backup (level 1 backup) - A level 1 incremental backup contains only blocks changed after a previous incremental backup. If no level 0 backup exists in either the current or parent database incarnation and you run a level 1 backup, then RMAN takes a level 0 backup automatically. A level 1 incremental backup can be either cumulative or differential.

Limitations

Note the following limitations for this version of Oracle Sharding backup and restore using GDSCTL.

-

Microsoft Windows is not supported.

-

Oracle GoldenGate replicated databases are not supported.

-

You must provide for backup of Clusterware Repository if Clusterware is deployed

- Prerequisites to Configuring Centralized Backup and Restore

Before configuring backup for a sharded database, make sure the following prerequisites are met. - Configuring Automated Backups

Use theGDSCTL CONFIG BACKUPcommand to configure automated sharded database backups. - Enabling and Disabling Automated Backups

You can enable or disable backups on all shards, or specific shards, shardspaces, or shardgroups. - Backup Job Operation

Once configured and enabled, backup jobs run on the primary shard catalog database and the primary shards as scheduled. - Monitoring Backup Status

There are a few different ways to monitor the status of automated and on-demand backup jobs. - Viewing an Existing Backup Configuration

WhenGDSCTL CONFIG BACKUPis not provided with any parameters, it shows the current backup configuration. - Running On-Demand Backups

TheGDSCTL RUN BACKUPcommand lets you start backups for the shard catalog database and a list of shards. - Viewing Backup Job Status

Use GDSCTL commandSTATUS BACKUPto view the detailed state on the scheduled backup jobs in the specified shards. Command output includes the job state (enabled or disabled) and the job run details. - Listing Backups

UseGDSCTL LIST BACKUPto list backups usable to restore a sharded database or a list of shards to a specific global restore point. - Validating Backups

Run theGDSCTL VALIDATE BACKUPcommand to validate sharded database backups against a specific global restore point for a list of shards. The validation confirms that the backups to restore the databases to the specified restore point are available and not corrupted. - Deleting Backups

Use theGDSCTL DELETE BACKUPcommand to delete backups from the recovery repository. - Creating and Listing Global Restore Points

A restore point for a sharded database that we call a global restore point, actually maps to a set of normal restore points in the individual primary databases in a sharded database. - Restoring From Backup

TheGDSCTL RESTORE BACKUPcommand lets you restore sharded database shards to a specific global restore point.

Parent topic: Sharded Database Administration

Prerequisites to Configuring Centralized Backup and Restore

Before configuring backup for a sharded database, make sure the following prerequisites are met.

-

Create a recovery catalog in a dedicated database.

Before you can backup or restore a sharded database using

GDSCTL, you must have access to a recovery catalog created in a dedicated database. This recovery catalog serves as a centralized RMAN repository for the shard catalog database and all of the shard databases.Note the following:

-

The version of the recovery catalog schema in the recovery catalog database must be compatible with the sharded database version because RMAN has compatibility requirements for the RMAN client, the target databases, and the recovery catalog schema. For more information, see Oracle Database Backup and Recovery Reference, cross-referenced below.

-

The recovery catalog must not share a host database with the shard catalog because the shard catalog database is one of the target databases in the sharded database backup configuration, and RMAN does not allow the recovery catalog to reside in a target database.

-

It is recommended that you back up the recovery catalog backup periodically, following appropriate best practices.

-

The shard catalog database and all of the shard databases must be configured to use the same recovery catalog.

-

-

Configure backup destinations for the shard catalog database and all of the shard databases.

The backup destination types are either DISK or system backup to tape. The supported DISK destinations are NFS and Oracle ASM file systems.

System backup to tape destinations require additional software modules to be installed on the database host. They must be properly configured to work with RMAN.

If the shard catalog database or the shard databases are in Data Guard configurations, you can choose to back up either the primary or standby databases.

-

RMAN connects to the target databases as specific internal users to do database backup and restore with the exception of the shard catalog.

For the shard catalog, a common user in the CDB hosting the shard catalog PDB must be provided at the time when sharded database backup is configured. This user must be granted the

SYSDGandSYSBACKUPprivileges. If the CDB is configured to use local undo for its PDBs, theSYSBACKUPprivilege must also be granted commonly.For the shard databases, the internal CDB common user,

GSMROOTUSER, is used. This user must be unlocked in the shard CDB root databases and granted theSYSBACKUPprivilege in addition to other privileges that the sharded database requires forGSMROOUSER. If the CDB is configured to use local undo for its PDBs, theSYSBACKUPprivilege must be granted commonly toGSMROOTUSER, meaning theCONTAINER=ALLclause must be used when granting theSYSBACKUPprivilege. - All of the

GDSCTLcommands for sharded database backup and restore operations require the shard catalog database to be open. If the shard catalog database itself must be restored, you must manually restore it. - You are responsible for offloading backups to tape or other long-term storage media and following the appropriate data retention best practices.

Parent topic: Backing Up and Recovering a Sharded Database

Configuring Automated Backups

Use the GDSCTL CONFIG BACKUP command to configure automated

sharded database backups.

You should connect to a shard director (GSM) host to run the

GDSCTL backup commands. If the commands are run from elsewhere,

you must explicitly connect to the shard catalog database using the GDSCTL

CONNECT command.

When you run the GDSCTL backup configuration, you can

provide the following inputs.

-

A list of databases.

The databases are the shard catalog database and shard databases. Backup configuration requires that the primary databases of the specified databases be open for read and write, but the standby databases can be mounted or open.

If a database is in a Data Guard configuration when it is configured for backup, all of the databases in the Data Guard configuration are configured for backup. For a shard in Data Guard configuration, you must provide the backup destinations and start times for the primary and all of the standby shards.

This is different for the shard catalog database. The shard catalog database and all the shard catalog standby databases will share a backup destination and a start time.

-

A connect string to the recovery catalog database.

For the connect string you need a user account with privileges for RMAN, such as

RECOVERY_CATALOG_OWNERrole. -

RMAN backup destination parameters.

These parameters include backup device and channel configurations. Different backup destinations can be used for different shards.

Please note the following.

-

Backup destinations for shards in Data Guard configuration must be properly defined to ensure that the backups created from standby databases can be used to restore the primary database and conversely. See "Using RMAN to Back Up and Restore Files" in Oracle Data Guard Concepts and Administration for Data Guard RMAN support.

-

The same destination specified for the shard catalog database is used as the backup destination for the shard catalog standby databases.

-

For system backup to tape devices, the media managers for the specific system backup to tape devices are needed for RMAN to create channels to read and write data to the devices. The media manager must be installed and properly configured.

-

-

Backup target type.

Backup target type defines whether the backups for the shard catalog database and shards should be done at the primary or one of the standby databases. It can be either

PRIMARYorSTANDBY. The default backup target type isSTANDBY. For the shard catalog database or shards that are not in Data Guard configurations, the backups will be done on the shard catalog database or the shards themselves even when the backup target type isSTANDBY. -

Backup retention policy.

The backup retention policy specifies a database recovery window for the backups. It is specified as a number of days.

Obsolete backups are not deleted automatically, but a

GDSCTLcommand is provided for you to manually delete them. -

Backup schedule.

Backup schedules specify the automated backup start time and repeat intervals for the level 0 and level 1 incremental backups. Different automated backup start times can be used for the shard catalog database and individual shards. The time is a local time in the time zone in which the shard catalog database or shard is located. The backup repeat intervals for the level 0 and level 1 incremental backups are the same for the shard catalog database and all the shards in the sharded database,

-

CDB root database connect string for the shard catalog database.

The provided user account must have common

SYSBACKUPprivilege in the provided CDB.

When no parameters are provided for the CONFIG BACKUP

command, GDSCTL displays the current sharded database backup

configuration. If the backup has not been configured yet when the command is used to

show the backup configuration, it displays that the backup is not configured.

To configure a backup, run GDSCTL CONFIG BACKUP as

shown in the following example. For complete syntax, command options, and usage

notes, run HELP CONFIG BACKUP.

The following example configures a backup channel of type DISK for the

shard catalog database, two parallel channels of type DISK for each of the shards

(shard spaces dbs1 and dbs2 are used in the shard

list), the backup retention window is set to 14 days, the level 0 and level 1

incremental backup repeat intervals are set to 7 and 1 day, and the backup start

time is set to 12:00 AM, leaving the incremental backup type the default

DIFFERENTIAL, and the backup target type the default

STANDBY.

GDSCTL> config backup -rccatalog rccatalog_connect_string

-destination "CATALOG::configure channel device type disk format '/tmp/rman/backups/%d_%U'"

-destination "dbs1,dbs2:configure device type disk parallelism 2:configure channel 1 device type disk format '/tmp/rman/backups/1/%U';configure channel 2 device type disk format '/tmp/rman/backups/2/%U'"

-starttime ALL:00:00 -retention 14 -frequency 7,1 -catpwd gsmcatuser_password -cdb catcdb_connect_string;Once GDSCTL has the input it displays output similar to the following, pertaining to the current status of the configuration operation.

Configuring backup for database "v1908" ...

Updating wallet ...

The operation completed successfully

Configuring RMAN ...

new RMAN configuration parameters:

CONFIGURE CHANNEL DEVICE TYPE DISK FORMAT '/tmp/rman/backups/%d_%u';

new RMAN configuration parameters are successfully stored

starting full resync of recovery catalog

full resync complete

new RMAN configuration parameters:

CONFIGURE BACKUP OPTIMIZATION ON;

new RMAN configuration parameters are successfully stored

starting full resync of recovery catalog

full resync complete

...

Creating RMAN backup scripts ...

replaced global script full_backup

replaced global script incremental_backup

...

Creating backup scheduler jobs ...

The operation completed successfully

Creating restore point creation job ...

The operation completed successfully

Configuring backup for database "v1908b" ...

Updating wallet ...

The operation completed successfully

Configuring RMAN ...

new RMAN configuration parameters:

CONFIGURE DEVICE TYPE DISK PARALLELISM 2 BACKUP TYPE TO BACKUPSET;

new RMAN configuration parameters are successfully stored

starting full resync of recovery catalog

full resync complete

new RMAN configuration parameters:

CONFIGURE CHANNEL 1 DEVICE TYPE DISK FORMAT '/tmp/rman/backups/1/%u';

new RMAN configuration parameters are successfully stored

starting full resync of recovery catalog

full resync complete

...

Configuring backup for database "v1908d" ...

Updating wallet ...

The operation completed successfully

Configuring RMAN ...

...

Recovery Manager complete.

As shown in the CONFIG BACKUP command output above,

GDSCTL does the following steps.

-

GDSCTL updates the shard wallets.

The updated wallets will contain:

-

Connect string and authentication credentials to the RMAN catalog database.

-

Connect string and authentication credentials to the RMAN TARGET database.

-

Automated backup target type and start time.

-

-

GDSCTL sets up the RMAN backup environment for the database.

This includes the following tasks.

-

Registering the database as a target in the recovery catalog.

-

Setting up backup channels.

-

Setting up backup retention policies.

-

Enabling control file and server parameter file auto-backup.

-

Enabling block change tracking for all the target databases.

-

-

On the shard catalog, GDSCTL creates global RMAN backup scripts for level 0 and level 1 incremental backups.

-

On the shard catalog, GDSCTL creates a global restore point creation job.

-

On the shard catalog and each of the primary databases, GDSCTL

-

Creates DBMS Scheduler database backup jobs for level 0 and level 1 incremental backups

-

Schedules the jobs based on the backup repeat intervals you configure.

-

Parent topic: Backing Up and Recovering a Sharded Database

Enabling and Disabling Automated Backups

You can enable or disable backups on all shards, or specific shards, shardspaces, or shardgroups.

All backup jobs are initially disabled. They can be enabled by running the

GDSCTL ENABLE BACKUP command.

GDSCTL> ENABLE BACKUPWhen not specified, ENABLE BACKUP enables the backup on all shards.

You can optionally list specific shards, shardspaces, or shardgroups on which to

enable the backup.

GDSCTL> ENABLE BACKUP -shard dbs1The DISABLE BACKUP command disables an enabled backup.

GDSCTL> DISABLE BACKUP -shard dbs1Parent topic: Backing Up and Recovering a Sharded Database

Backup Job Operation

Once configured and enabled, backup jobs run on the primary shard catalog database and the primary shards as scheduled.

After a backup job is configured, it is initially disabled. You must enable

a backup job for it to run as scheduled. Use the GDSCTL commands ENABLE

BACKUP and DISABLE BACKUP to enable or disable the jobs.

Backup jobs are scheduled based on the backup repeat intervals you configure for the level 0 and level 1 incremental backups, and the backup start time for the shard catalog database and the shards.

Two separate jobs are created for level 0 and level 1 incremental backups.

The names of the jobs are AUTOMATED_SDB_LEVEL0_BACKUP_JOB and

AUTOMATED_SDB_LEVEL1_BACKUP_JOB. Full logging is enabled for both

jobs.

When running, the backup jobs find the configured backup target type

(PRIMARY or STANDBY), figure out the correct

target databases based on the backup target type, and then launch RMAN to back up the

target databases. RMAN uses the shard wallets updated during the backup configuration

for database connection authentication.

Note that sharded database chunk moves do not delay automated backups.

Parent topic: Backing Up and Recovering a Sharded Database

Monitoring Backup Status

There are a few different ways to monitor the status of automated and on-demand backup jobs.

Monitoring an Automated Backup Job

Because full logging is enabled for the automated backup jobs, DBMS Scheduler writes job execution details in the job log and views. The Scheduler job log and views are your basic resources and starting point for monitoring the automated backups. Note that although the DBMS Scheduler makes a list of job state change events available for email notification subscription. This capability is not used for sharded database automated backups.

You can use the GDSCTL command LIST BACKUP to view the

backups and find out whether backups are created at the configured backup job repeat

intervals.

Automated backups are not delayed by chunk movement in the sharded database, so the backup creation times should be close to the configured backup repeat intervals and the backup start time.

Monitoring an On-Demand Backup Job

Internally, on-demand backup jobs are also started by DBMS Scheduler

jobs on the database servers. The names of the temporary jobs are prefixed with tag

MANUAL_BACKUP_JOB_. On-demand backups always run in the same

session that GDSCTL uses to communicate with the database server. Failures from the

job execution are sent directly to the client.

Using DBMS Scheduler Jobs Views

The automated backup jobs only run on the primary shard catalog database

and the primary shards. To check the backup job execution details for a specific

target database, connect to the database, or its primary database if the database is

in a Data Guard configuration, using SQL*PLUS, and query the DBMS Scheduler views

*_SCHEDULER_JOB_LOG and

*_SCHEDULER_JOB_RUN_DETAILS based on the job names.

The names of the two automated backup jobs are

AUTOMATED_SDB_LEVEL0_BACKUP_JOB and

AUTOMATED_SDB_LEVEL1_BACKUP_JOB.

You can also use the GDSCTL command STATUS BACKUP to

retrieve the job state and run details from these views. See Viewing Backup Job Status for more information about running STATUS BACKUP.

The job views only contain high level information about the job execution. For job failure diagnosis, you can find more details about the job execution in the RDBMS trace files by grepping the job names.

If no errors are found in the job execution, but still no backups have been created, you can find the PIDs of the processes that the jobs have created to run RMAN for the backups in the trace files, and then look up useful information in the trace files associated with the PIDs.

Using Backup Command Output

This option is only available for on-demand backups.

When you start on-demand backups with GDSCTL RUN BACKUP,

you can specify the -sync command option. This forces all backup

tasks to run in the foreground, and the output from the internally launched RMAN on

the database servers is displayed in the GDSCTL console.

The downside of running the backup tasks in the foreground is that the tasks will be run in sequence, therefore the whole backup will take more time to complete.

See the GDSCTL reference in Oracle Database Global Data Services Concepts and Administration Guide for detailed command syntax and options.

Parent topic: Backing Up and Recovering a Sharded Database

Viewing an Existing Backup Configuration

When GDSCTL CONFIG BACKUP is not provided with any

parameters, it shows the current backup configuration.

Because the parameters -destination and -starttime

can appear more than once in CONFIG BACKUP command line for

different shards and backup configuration can be done more than once, multiple items

could be listed in each of the Backup destinations and Backup start times sections.

The items are listed in the same order as they are specified in the CONFIG BACKUP

command line and the order the command is repeatedly run.

To view an existing backup configuration, run CONFIG BACKUP, as

shown here.

GDSCTL> CONFIG BACKUP;If a sharded database backup has not been configured yet, the command output will indicate it. Otherwise the output looks like the following:

GDSCTL> config backup

Recovery catalog database user: rcadmin

Recovery catalog database connect descriptor: (DESCRIPTION=(ADDRESS=(PROTOCOL=tcp)(HOST=den02qxr)(PORT=1521))(CONNECT_DATA=(SERVICE_NAME=cdb6_pdb1.example.com)))

Catalog database root container user: gsm_admin

Catalog database root container connect descriptor: (DESCRIPTION=(ADDRESS=(PROTOCOL=tcp)(HOST=den02qxr)(PORT=1521))(CONNECT_DATA=(SERVICE_NAME=v1908.example.com)))

Backup retention policy in days: 14

Level 0 incremental backup repeat interval in minutes: 10080

Level 1 incremental backup repeat interval in minutes: 1440

Level 1 incremental backup type : DIFFERENTIAL

Backup target type: STANDBY

Backup destinations:

catalog::channel device type disk format '/tmp/rman/backups/%d_%u'

dbs1,dbs2:device type disk parallelism 2:channel 1 device type disk format '/tmp/rman/backups/1/%u';channel 2 device type disk format '/tmp/rman/backups/2/%u'

catalog::configure channel device type disk format '/tmp/rman/backups/%d_%u'

dbs1,dbs2:configure device type disk parallelism 2:configure channel 1 device type disk format '/tmp/rman/backups/1/%u';configure channel 2 device type disk format '/tmp/rman/backups/2/%u'

Backup start times:

all:00:00

Parent topic: Backing Up and Recovering a Sharded Database

Running On-Demand Backups

The GDSCTL RUN BACKUP command lets you start backups for the

shard catalog database and a list of shards.

All on-demand backups are level 0 incremental backups. On-demand backups have no impact on the automated backup schedules configured for the shard catalog database and the shards.

Internally, on-demand backups are started by DBMS Scheduler jobs on the

database servers. The jobs are created on-the-fly when the on-demand backup command

RUN BACKUP is run.

On-demand backup jobs are temporary jobs, and they are automatically dropped after the backups have finished.

The names of the temporary jobs are prefixed with tag

MANUAL_BACKUP_JOB_.

To use RUN BACKUP, you must have already set up the

backup configuration with the CONFIG BACKUP command.

The RUN BACKUP command requires the shard catalog database and any

primary shards to be backed up to be open.

GDSCTL> RUN BACKUP -shard dbs1The -shard option lets you specify a set of shards,

shardspaces or shardgroups on which to run the backup. To take an on-demand backup

on shardspace dbs1, you can run RUN BACKUP as

shown in the example above.

See the GDSCTL reference in Oracle Database Global Data Services Concepts and Administration Guide for detailed command syntax and options.

Parent topic: Backing Up and Recovering a Sharded Database

Viewing Backup Job Status

Use GDSCTL command STATUS BACKUP to view the detailed state

on the scheduled backup jobs in the specified shards. Command output includes the job state

(enabled or disabled) and the job run details.

By default, the command displays the job run details of all the runs that the automated backup jobs have had from 30 days ago in the specified shards. If the job run details for different periods are needed, options -start_time and -end_time must be used.

Run STATUS BACKUP as shown in the following examples.

The following STATUS BACKUP command example lists the job state and

all job run details from the SDB catalog and the primary shard

“rdbmsb_cdb2_pdb1”:

GDSCTL> status backup -catpwd -shard catalog,rdbmsb_cdb2_pdb1;

"GSMCATUSER" password:***

Retrieving scheduler backup job status for database "rdbms" ...

Jobs:

Incremental Level 0 backup job is enabled

Job schedule start time: 2020-07-27 00:00:00.000 -0400

Job repeat interval: freq=daily;interval=1

Incremental Level 1 backup job is enabled

Job schedule start time: 2020-07-27 00:00:00.000 -0400

Job repeat interval: freq=minutely;interval=60

Global restore point create job is enabled

Job schedule start time: 2020-07-27 23:59:55.960 -0400

Job repeat interval: freq=hourly

Run Details:

Incremental Level 1 backup job status: SUCCEEDED

Job run actual start time: 2020-07-26 14:00:00.177 -0400

Job run slave process ID: 9023

Incremental Level 1 backup job status: SUCCEEDED

Job run actual start time: 2020-07-26 22:00:01.305 -0400

Job run slave process ID: 59526

…

Global restore point create job status: SUCCEEDED

Job run actual start time: 2020-07-27 15:28:37.603 -0400

Job run slave process ID: 44227

…

Global restore point create job status: SUCCEEDED

Job run actual start time: 2020-07-27 17:28:38.251 -0400

Job run slave process ID: 57611

Retrieving scheduler backup job status for database "rdbmsb_cdb2_pdb1" ...

Jobs:

Incremental Level 0 backup job is enabled

Job schedule start time: 2020-07-28 00:00:00.000 -0400

Job repeat interval: freq=daily;interval=1

Incremental Level 1 backup job is enabled

Job schedule start time: 2020-07-28 00:00:00.000 -0400

Job repeat interval: freq=minutely;interval=60

Run Details:

Incremental Level 1 backup job status: SUCCEEDED

Job run actual start time: 2020-07-26 14:00:00.485 -0400

Job run slave process ID: 9056

…

Incremental Level 1 backup job status: SUCCEEDED

Job run actual start time: 2020-07-27 14:33:42.702 -0400

Job run slave process ID: 9056

Incremental Level 0 backup job status: SUCCEEDED

Job run actual start time: 2020-07-27 00:00:01.469 -0400

Job run slave process ID: 75176

The following command lists the scheduler backup job state and the details of the job runs in the time frame from 2020/07/26 12:00:00 to 07/27 00:00 from the SDB catalog and the primary shard “rdbmsb_cdb2_pdb1”:

GDSCTL> status backup -start_time "2020-07-26 12:00:00" -end_time "2020-07-27 00:00:00" -catpwd -shard catalog,rdbmsb_cdb2_pdb1;

"GSMCATUSER" password:***

Retrieving scheduler backup job status for database "rdbms" ...

Jobs:

Incremental Level 0 backup job is enabled

Job schedule start time: 2020-07-27 00:00:00.000 -0400

Job repeat interval: freq=daily;interval=1

Incremental Level 1 backup job is enabled

Job schedule start time: 2020-07-27 00:00:00.000 -0400

Job repeat interval: freq=minutely;interval=60

Globa1 restore point create job is enabled

Job schedule start time: 2020-07-27 23:59:55.960 -0400

Job repeat interval: freq=hourly

Run Details:

Incremental Level 1 backup job status: SUCCEEDED

Job run actual start time: 2020-07-26 14:00:00.177 -0400

Job run slave process ID: 9023

…

Incremental Level 1 backup job status: SUCCEEDED

Job run actual start time: 2020-07-26 23:50:00.293 -0400

Job run slave process ID: 74171

Globa1 restore point create job status: SUCCEEDED

Job run actual start time: 2020-07-26 14:28:38.263 -0400

Job run slave process ID: 11987

…

Globa1 restore point create job status: SUCCEEDED

Job run actual start time: 2020-07-26 23:28:37.577 -0400

Job run slave process ID: 69451

Retrieving scheduler backup job status for database "rdbmsb_cdb2_pdb1" ...

Jobs:

Incremental Level 0 backup job is enabled

Job schedule start time: 2020-07-28 00:00:00.000 -0400

Job repeat interval: freq=daily;interval=1

Incremental Level 1 backup job is enabled

Job schedule start time: 2020-07-28 00:00:00.000 -0400

Job repeat interval: freq=minutely;interval=60

Run Details:

Incremental Level 1 backup job status: SUCCEEDED

Job run actual start time: 2020-07-26 14:00:00.485 -0400

Job run slave process ID: 9056

Incremental Level 1 backup job status: SUCCEEDED

Job run actual start time: 2020-07-26 22:11:50.931 -0400

Job run slave process ID: 9056

Parent topic: Backing Up and Recovering a Sharded Database

Listing Backups

Use GDSCTL LIST BACKUP to list backups usable to restore a

sharded database or a list of shards to a specific global restore point.

The command requires the shard catalog database to be open, but the shards can be in any of the started states: nomount, mount, or open.

You can specify a list of shards to list backups for in the command. You can also list backups usable to restore the control files of the listed databases and list backups for standby shards.

The following example shows the use of the command to list the backups from shard

cdb2_pdb1 recoverable to restore point

BACKUP_BEFORE_DB_MAINTENANCE.

GDSCTL> LIST BACKUP -shard cdb2_pdb1 -restorepoint BACKUP_BEFORE_DB_MAINTENANCEIf option -controlfile is used, LIST BACKUPS will

only list the backups usable to restore the control files of the specified shards.

If option -summary is used, the backup will be listed in a summary

format.

GDSCTL> list backup -shard cat1, cat2 -controlfile -summary Parent topic: Backing Up and Recovering a Sharded Database

Validating Backups

Run the GDSCTL VALIDATE BACKUP command to validate sharded

database backups against a specific global restore point for a list of shards. The

validation confirms that the backups to restore the databases to the specified restore point

are available and not corrupted.

The shard catalog database must be open, but the shard databases can be either mounted or open. If the backup validation is for database control files, the shards can be started nomount.

The following example validates the backups of the control files from the shard

catalog databases recoverable to restore point

BACKUP_BEFORE_DB_MAINTENANCE.

GDSCTL> VALIDATE BACKUP -shard cat1,cat2 -controlfile -restorepoint BACKUP_BEFORE_DB_MAINTENANCEBackup validation for shards are done one shard a time sequentially.

Parent topic: Backing Up and Recovering a Sharded Database

Deleting Backups

Use the GDSCTL DELETE BACKUP command to delete backups from

the recovery repository.

The DELETE BACKUP command deletes the sharded database backups

identified with specific tags from the recovery repository. It deletes the records

in the recovery database for the backups identified with the provided tags, and, if

the media where the files are located is accessible, the physical files from the

backup sets from those backups. This is done for each of the target databases. You

will be prompted to confirm before the actual deletion starts.

To run this command, the shard catalog database must be open, but the shard databases can be either mounted or open.

The following is an example of deleting backups with tag

odb_200414205057124_0400 from shard

cdb2_pdb1.

GDSCTL> DELETE BACKUP -shard cdb2_pdb1 -tag ODB_200414205057124_0400

"GSMCATUSER" password:

This will delete identified backups, would you like to continue [No]?y

Deleting backups for database "cdb2_pdb1" ...Parent topic: Backing Up and Recovering a Sharded Database

Creating and Listing Global Restore Points

A restore point for a sharded database that we call a global restore point, actually maps to a set of normal restore points in the individual primary databases in a sharded database.

These restore points are created at a common SCN across all of the primary databases in the sharded database. The restore points created in the primary databases are automatically replicated to the Data Guard standby databases. When the databases are restored to this common SCN, the restored sharded database is guaranteed to be in a consistent state.

The global restore point creation must be mutually exclusive with sharded database chunk movement. When the job runs, it first checks whether any chunk moves are going on and waits for them to finish. Sometimes the chunk moves might take a long time. Also, new chunk moves can start before the previous ones have finished. In that case the global restore point creation job might wait for a very long time before there is an opportunity to generate a common SCN and create a global restore point from it. Therefore, it is not guaranteed that a global restore point will be created every hour.

To create the global restore point, run the GDSCTL command

CREATE RESTOREPOINT as shown here.

GDSCTL> CREATE RESTOREPOINT The global restore point creation job is configured on the shard catalog database.

The name of the job is AUTOMATED_SDB_RESTOREPOINT_JOB. Full logging

for this job is enabled.

You can optionally enter a name for the restore point by using the

-name option as shown here.

GDSCTL> CREATE RESTOREPOINT -name CUSTOM_SDB_RESTOREPOINT_JOBThe job is initially disabled, so you must use GDSCTL ENABLE BACKUP

to enable the job. The job runs every hour and the schedule is not configurable.

To list all global restore points, run LIST RESTOREPOINT.

GDSCTL> LIST RESTOREPOINTThis command lists all of the available global restore points in the

sharded database that were created during the specified time period with SCNs (using

the -start_scn and -end_scn options) in the

specified SCN interval (using the -start_time and

-end_time options).

The following command lists the available restore points in the sharded database with the SCN between 2600000 and 2700000.

GDSCTL> LIST RESTOREPOINT -start_scn 2600000 -end_scn 2700000The command below lists the available restore points in the sharded database that were created in the time frame from 2020/07/27 00:00:00 to 2020/07/28 00:00:00.

GDSCTL> LIST RESTOREPOINT -start_time "2020-07-27 00:00:00" -end_time "2020-07-28 00:00:00"Parent topic: Backing Up and Recovering a Sharded Database

Restoring From Backup

The GDSCTL RESTORE BACKUP command lets you restore sharded

database shards to a specific global restore point.

This command is used to restore shard database to a specific global restore point. It can also be used to restore only the shard database control files. It does not support shard catalog database restore. You must restore the shard catalog database directly using RMAN.

The typical procedure for restoring a sharded database is:

- List the available restore points.

- Select a restore point to validate the backups.

- Restore the databases to the selected restore point.

You should validate the backups for a shard against the selected restore point to verify that all the needed backups are available before you start to restore the shard to the restore point.

Note that you are not prohibited from restoring the shard catalog or a specific shard to an arbitrary point in time. However, doing so may put that target in an inconsistent state with the rest of the sharded database and you may need to take corrective action outside of the restore operation.

The database to be restored must be in NOMOUNT state. This command alters the database to MOUNT state after it has restored the control file.

The RESTORE BACKUP command requires the shard catalog database to be

open.

If the shard catalog database itself needs to be restored, you must logon to the

shard catalog database host and restore the database manually using RMAN. After the

shard catalog database has been successfully restored and opened, you then use the

RESTORE BACKUP command to restore the list of shards.

For data file restore, the shards must be in MOUNT state, but if the command is to restore the control files, the shard databases must be started in NOMOUNT state. To bring the databases to the proper states will be a manual step.

To restore the shard database control files, the database must be started in nomount mode. The control files will be restored from AUTOBACKUP. To restore the database data files, the database must be mounted. The shard catalog database must be open for this command to work.

The following example restores the control files of shard cdb2_pdb1

to restore point BACKUP_BEFORE_DB_MAINTENANCE.

GDSCTL> RESTORE BACKUP -shard cdb2_pdb1 -restorepoint BACKUP_BEFORE_DB_MAINTENANCE –controlfileThe restore operation can be done for the shards in parallel. When the restore for the shards happens in parallel, you should not close GDSCTL until the command execution is completed, because interrupting the restore operation can result in database corruption or get the sharded database into an inconsistent state.

Backup validation only logically restores the database while RESTORE

BACKUP will do both the physical database restore and the database

recovery. Therefore, after RESTORE BACKUP is done, usually the

restored the databases need to be opened with the resetlogs option.

After the database restore is completed, you should open the database and verify that the database has been restored as intended and it is in a good state.

Parent topic: Backing Up and Recovering a Sharded Database

Monitoring a Sharded Database

Sharded databases can be monitored using Enterprise Manager Cloud Control or GDSCTL.

See the following topics to use Enterprise Manager Cloud Control or GDSCTL to monitor sharded databases.

- Monitoring a Sharded Database with GDSCTL

There are numerousGDSCTL CONFIGcommands that you can use to obtain the health status of individual shards, shardgroups, shardspaces, and shard directors. - Monitoring a Sharded Database with Enterprise Manager Cloud Control

Oracle Enterprise Manager Cloud Control lets you discover, monitor, and manage the components of a sharded database. - Querying System Objects Across Shards

Use the SHARDS() clause to query Oracle-supplied tables to gather performance, diagnostic, and audit data from V$ views and DBA_* views.

Parent topic: Sharded Database Administration

Monitoring a Sharded Database with GDSCTL

There are numerous GDSCTL CONFIG commands that you can use to obtain the health status of individual shards, shardgroups, shardspaces, and shard directors.

Monitoring a shard is just like monitoring a normal database, and standard Oracle best practices should be used to monitor the individual health of a single shard. However, it is also important to monitor the overall health of the entire sharded environment. The GDSCTL commands can also be scripted and through the use of a scheduler and can be done at regular intervals to help ensure that everything is running smoothly.

See Also:

Oracle Database Global

Data Services Concepts and Administration

Guide for information about using the GDSCTL CONFIG commands

Parent topic: Monitoring a Sharded Database

Monitoring a Sharded Database with Enterprise Manager Cloud Control

Oracle Enterprise Manager Cloud Control lets you discover, monitor, and manage the components of a sharded database.

Sharded database targets are found in the All Targets page.

The target home page for a sharded database shows you a summary of the sharded database components and their statuses.

To monitor sharded database components you must first discover them. See Discovering Sharded Database Components for more information.

Summary

The Summary pane, in the top left of the page, shows the following information:

-

Sharded database name

-

Sharded database domain name

-

Shard catalog name. You can click the name to view more information about the shard catalog.

-

Shard catalog database version

-

Sharding method used to shard the database

-

Replication technology used for high availability

-

Number and status of the shard directors

-

Master shard director name. You can click the name to view more information about the master shard director.

Shard Load Map

The Shard Load Map, in the upper right of the page, shows a pictorial graph illustrating how transactions are distributed among the shards.

You can select different View Levels above the graph.

-

Database

The database view aggregates database instances in Oracle RAC cluster databases into a single cell labeled with the Oracle RAC cluster database target name. This enables you to easily compare the total database load in Oracle RAC environments.

-

Instance

The instance view displays all database instances separately, but Oracle RAC instances are grouped together as sub-cells of the Oracle RAC database target. This view is essentially a two-level tree map, where the database level is the primary division, and the instance within the database is the secondary division. This allows load comparison of instances within Oracle RAC databases; for instance, to easily spot load imbalances across instances.

-

Pluggable Database

Notice that the cells of the graph are not identical in size. Each cell corresponds to a shard target, either an instance or a cluster database. The cell size (its area) is proportional to the target database's load measured in average active sessions, so that targets with a higher load have larger cell sizes. Cells are ordered by size from left to right and top to bottom. Therefore, the target with the highest load always appears as the upper leftmost cell in the graph.

You can hover your mouse pointer over a particular cell of the graph to view the total active load (I/O to CPU ration), CPU, I/O, and wait times. Segments of the graph are colored to indicate the dominant load:

-

Green indicates that CPU time dominates the load

-

Blue indicates that I/O dominates the load

-

Yellow indicates that WAIT dominates the load

Members

The Members pane, in the lower left of the page, shows some relevant information about each of the components.

The pane is divided into tabs for each component: Shardspaces, Shardgroups, Shard Directors, and Shards. Click on a tab to view the information about each type of component

-

Shardspaces

The Shardspaces tab displays the shardspace names, status, number of chunks, and Data Guard protection mode. The shardspace names can be clicked to reveal more details about the selected shardspace.

-

Shardgroups

The Shardgroups tab displays the shardgroup names, status, the shardspace to which it belongs, the number of chunks, Data Guard role, and the region to which it belongs. You can click the shardgroup and shardspace names to reveal more details about the selected component.

-

Shard Directors

The Shard Directors tab displays the shard director names, status, region, host, and Oracle home. You can click the shard director names can be clicked to reveal more details about the selected shard director.

-

Shards

The Shards tab displays the shard names, deploy status, status, the shardspaces and shardgroups to which they belong, Data Guard roles, and the regions to which they belong. In the Names column, you can expand the Primary shards to display the information about its corresponding Standby shard. You can hover the mouse over the Deployed column icon and the deployment status details are displayed. You can click on the shard, shardspace, and shardgroup names to reveal more details about the selected component.

Services

The Services pane, in the lower right of the page, shows the names, status, and Data Guard role of the sharded database services. Above the list is shown the total number of services and an icon showing how many services are in a particular status. You can hover your mouse pointer over the icon to read a description of the status icon.

Incidents

The Incidents pane displays messages and warnings about the various components in the sharded database environment. More information about how to use this pane is in the Cloud Control online help.

Sharded Database Menu

The Sharded Database menu, located in the top left corner, provides you with access to administrate the sharded database components.

Target Navigation

The Target Navigation pane gives you easy access to more details about any of the components in the sharded database.

Clicking the navigation tree icon on the upper left corner of the page opens the Target Navigation pane. This pane shows all of the discovered components in the sharded database in tree form.

Expanding a shardspace reveals the shardgroups in them. Expanding a shardgroup reveals the shards in that shardgroup.

Any of the component names can be clicked to view more details about them.

- Discovering Sharded Database Components

In Enterprise Manager Cloud Control, you can discover the shard catalog and shard databases, then add the shard directors, sharded databases, shardspaces, and shardgroups using guided discovery.

Parent topic: Monitoring a Sharded Database

Discovering Sharded Database Components

In Enterprise Manager Cloud Control, you can discover the shard catalog and shard databases, then add the shard directors, sharded databases, shardspaces, and shardgroups using guided discovery.

As a prerequisite, you must use Cloud Control to discover the shard director hosts and the.shard catalog database. Because the catalog database and each of the shards is a database itself, you can use standard database discovery procedures.

Monitoring the shards is only possible when the individual shards are discovered using database discovery. Discovering the shards is optional to discovering a sharded database, because you can have a sharded database configuration without the shards.

When the target discovery procedure is finished, sharded database targets are added in Cloud Control. You can open the sharded database in Cloud Control to monitor and manage the components.

Querying System Objects Across Shards

Use the SHARDS() clause to query Oracle-supplied tables to gather performance, diagnostic, and audit data from V$ views and DBA_* views.

The shard catalog database can be used as the entry point for centralized diagnostic operations using the SQL SHARDS() clause. The SHARDS() clause allows you to query the same Oracle supplied objects, such as V$, DBA/USER/ALL views and dictionary objects and tables, on all of the shards and return the aggregated results.

As shown in the examples below, an object in the FROM part of the SELECT statement is wrapped in the SHARDS() clause to specify that this is not a query to local object, but to objects on all shards in the sharded database configuration. A virtual column called SHARD_ID is automatically added to a SHARDS()-wrapped object during execution of a multi-shard query to indicate the source of every row in the result. The same column can be used in predicate for pruning the query.

A query with the SHARDS() clause can only be run on the shard catalog database.

Examples

The following statement queries performance views

SQL> SELECT shard_id, callspersec FROM SHARDS(v$servicemetric)

WHERE service_name LIKE 'oltp%' AND group_id = 10;The following statement gathers statistics.

SQL> SELECT table_name, partition_name, blocks, num_rows

FROM SHARDS(dba_tab_partition) p

WHERE p.table_owner= :1;The following example statement shows how to find the SHARD_ID value for each shard.

SQL> select ORA_SHARD_ID, INSTANCE_NAME from SHARDS(sys.v_$instance);

ORA_SHARD_ID INSTANCE_NAME

------------ ----------------

1 sh1

11 sh2

21 sh3

31 sh4The following example statement shows how to use the SHARD_ID to prune a query.

SQL> select ORA_SHARD_ID, INSTANCE_NAME

from SHARDS(sys.v_$instance)

where ORA_SHARD_ID=21;

ORA_SHARD_ID INSTANCE_NAME

------------ ----------------

21 sh3

See Also:

Oracle Database SQL

Language Reference for more information about the SHARDS() clause.

Parent topic: Monitoring a Sharded Database

Propagation of Parameter Settings Across Shards

When you configure system parameter settings at the shard catalog, they are automatically propagated to all shards of the sharded database.

Oracle Sharding provides centralized management by allowing you to set parameters on the shard catalog. Then the settings are automatically propagated to all shards of the sharded database.

Propagation of system parameters happens only if done under ENABLE SHARD DDL on the shard catalog, then include SHARD=ALL in the ALTER statement.

SQL>alter session enable shard ddl;

SQL>alter system set enable_ddl_logging=true shard=all;Note:

Propagation of theenable_goldengate_replication parameter setting is not supported.

Parent topic: Sharded Database Administration

Modifying a Sharded Database Schema

When making changes to duplicated tables or sharded tables in a sharded database, these changes should be done from the shard catalog database.

Before executing any DDL operations on a sharded database, enable sharded DDL with

ALTER SESSION ENABLE SHARD DDL; This statement ensures that the DDL changes will be propagated to each shard in the sharded database.

The DDL changes that are propagated are commands that are defined as “schema

related,” which include operations such as ALTER TABLE. There are

other operations that are propagated to each shard, such as the CREATE, ALTER,

DROP user commands for simplified user management, and

TABLESPACE operations to simplify the creation of tablespaces on

multiple shards.

GRANT and REVOKE operations can be done from the shard catalog and are propagated to each shard, providing you have enabled shard DDL for the session. If more granular control is needed you can issue the command directly on each shard.

Operations such as DBMS package calls or similar operations are not propagated. For example, operations gathering statistics on the shard catalog are not propagated to each shard.

If you perform an operation that requires a lock on a table, such as adding a not null column, it is important to remember that each shard needs to obtain the lock on the table in order to perform the DDL operation. Oracle’s best practices for applying DDL in a single instance apply to sharded environments.

Multi-shard queries, which are executed on the shard catalog, issue remote queries across database connections on each shard. In this case it is important to ensure that the user has the appropriate privileges on each of the shards, whether or not the query will return data from that shard.

See Also:

Oracle Database SQL Language Reference for information about operations used with duplicated tables and sharded tables

Parent topic: Sharded Database Administration

Managing Sharded Database Software Versions

This section describes the version management of software components in the sharded database configuration. It contains the following topics:

- Patching and Upgrading a Sharded Database

Applying an Oracle patch to a sharded database environment can be done on a single shard or all shards; however, the method you use depends on the replication option used for the environment and the type of patch being applied. - Upgrading Sharded Database Components

The order in which sharded database components are upgraded is important for limiting downtime and avoiding errors as components are brought down and back online. - Post-Upgrade Steps for Oracle Sharding 21c

If you have a fully operational Oracle Sharding environment in a release earlier than 21c, no wallets exist, and no deployment will be done by Oracle Sharding after an upgrade to 21c to create them. You must perform manual steps to create the wallets. - Compatibility and Migration from Oracle Database 18c

When upgrading from an Oracle Database 18c installation which contains a single PDB shard for a given CDB, you must update the shard catalog metadata for any PDB. - Downgrading a Sharded Database

Oracle Sharding does not support downgrading.

Parent topic: Sharded Database Administration

Patching and Upgrading a Sharded Database

Applying an Oracle patch to a sharded database environment can be done on a single shard or all shards; however, the method you use depends on the replication option used for the environment and the type of patch being applied.

Oracle Sharding uses consolidated patching to update a shard director (GSM) ORACLE_HOME, so you must apply the Oracle Database release updates to the ORACLE_HOME to get security and Global Data Services fixes.

Patching a Sharded Database

Most patches can be applied to a single shard at a time; however, some patches should

be applied across all shards. Use Oracle’s best practices for applying patches to

single shards just as you would a non-sharded database, keeping in mind the

replication method that is being used with the sharded database. Oracle

opatchauto can be used to apply patches to multiple shards at a

time, and can be done in a rolling manner. Data Guard configurations are applied one

after another, and in some cases (depending on the patch) you can use Standby First

patching.

When using Oracle GoldenGate be sure to apply patches in parallel across the entire shardspace. If a patch addresses an issue with multi-shard queries, replication, or the sharding infrastructure, it should be applied to all of the shards in the sharded database.

Note:

Oracle GoldenGate replication support for Oracle Sharding High Availability is deprecated in Oracle Database 21c.Upgrading a Sharded Database

Upgrading the Oracle Sharding environment is not much different from upgrading other Oracle Database and global service manager environments; however, the components must be upgraded in a particular sequence such that the shard catalog is upgraded first, followed by the shard directors, and finally the shards.

See Also:

Oracle Database Global Data Services Concepts and Administration Guide for information about upgrading the shard directors.

Oracle Data Guard Concepts and Administration for information about patching and upgrading in an Oracle Data Guard configuration.

Parent topic: Managing Sharded Database Software Versions

Upgrading Sharded Database Components

The order in which sharded database components are upgraded is important for limiting downtime and avoiding errors as components are brought down and back online.

Before upgrading any sharded database components you must

-

Complete any pending

MOVE CHUNKoperations that are in progress. -

Do not start any new

MOVE CHUNKoperations. -

Do not add any new shards during the upgrade process.

See Also:

Oracle Data Guard Concepts and Administration for information about using DBMS_ROLLING to perform a rolling upgrade.

Oracle Data Guard Concepts and Administration for information about patching and upgrading databases in an Oracle Data Guard configuration.

Parent topic: Managing Sharded Database Software Versions

Post-Upgrade Steps for Oracle Sharding 21c

If you have a fully operational Oracle Sharding environment in a release earlier than 21c, no wallets exist, and no deployment will be done by Oracle Sharding after an upgrade to 21c to create them. You must perform manual steps to create the wallets.

Note:

The steps must be followed in EXACTLY this order.Parent topic: Managing Sharded Database Software Versions

Compatibility and Migration from Oracle Database 18c

When upgrading from an Oracle Database 18c installation which contains a single PDB shard for a given CDB, you must update the shard catalog metadata for any PDB.

Specifically, in 18c, the name of a PDB shard is the DB_UNIQUE_NAME of its CDB; however, in later Oracle

Database releases, the shard names are db_unique_name_of_CDB_pdb_name.

To update the catalog metadata to reflect this new naming methodology,

and to also support the new GSMROOTUSER account as described in

About the GSMROOTUSER Account, perform the following steps during the upgrade process as

described in Upgrading Sharded Database Components.

Parent topic: Managing Sharded Database Software Versions

Downgrading a Sharded Database

Oracle Sharding does not support downgrading.

Sharded database catalogs and shards cannot be downgraded.

Parent topic: Managing Sharded Database Software Versions

Shard Management

You can manage shards in your Oracle Sharding deployment with Oracle Enterprise Manager Cloud Control and GDSCTL.

The following topics describe shard management concepts and tasks:

- About Adding Shards

New shards can be added to an existing sharded database environment to scale out and to improve fault tolerance. - Resharding and Hot Spot Elimination

The process of redistributing data between shards, triggered by a change in the number of shards, is called resharding. Automatic resharding is a feature of the system-managed sharding method that provides elastic scalability of a sharded database. - Removing a Shard From the Pool

It may become necessary to remove a shard from the sharded database environment, either temporarily or permanently, without losing any data that resides on that shard. - Adding Standby Shards

You can add Data Guard standby shards to an Oracle Sharding environment; however there are some limitations. - Managing Shards with Oracle Enterprise Manager Cloud Control

You can manage database shards using Oracle Enterprise Manager Cloud Control - Managing Shards with GDSCTL

You can manage shards in your Oracle Sharding deployment using the GDSCTL command-line utility. - Migrating a Non-PDB Shard to a PDB

Do the following steps if you want to migrate shards from a traditional single-instance database to Oracle multitenant architecture. Also, you must migrate to a multitenant architecture before upgrading to Oracle Database 21c or later releases.

Parent topic: Sharded Database Administration

About Adding Shards

New shards can be added to an existing sharded database environment to scale out and to improve fault tolerance.

For fault tolerance, it is beneficial to have many smaller shards than a few very large ones. As an application matures and the amount of data increases, you can add an entire shard or multiple shards to the SDB to increase capacity.

When you add a shard to a sharded database, if the environment is sharded by consistent hash, then chunks from existing shards are automatically moved to the new shard to rebalance the sharded environment.

When using user-defined sharding, populating a new shard with data may require manually moving chunks from existing shards to the new shard using the GDSCTL split chunk and move chunk commands.

Oracle Enterprise Manager Cloud Control can be used to help identify chunks that would be good candidates to move, or split and move to the new shard.

When you add a shard to the environment, verify that the standby server is ready, and after the new shard is in place take backups of any shards that have been involved in a move chunk operation.

Parent topic: Shard Management

Resharding and Hot Spot Elimination

The process of redistributing data between shards, triggered by a change in the number of shards, is called resharding. Automatic resharding is a feature of the system-managed sharding method that provides elastic scalability of a sharded database.

Sometimes data in a sharded database needs to be migrated from one shard to another. Data migration across shards is required in the following cases:

-

When one or multiple shards are added to or removed from a sharded database

-

When there is skew in the data or workload distribution across shards

The unit of data migration between shards is the chunk. Migrating data in chunks guaranties that related data from different sharded tables are moved together.

When a shard is added to or removed from a sharded database, multiple chunks are migrated to maintain a balanced distribution of chunks and workload across shards.

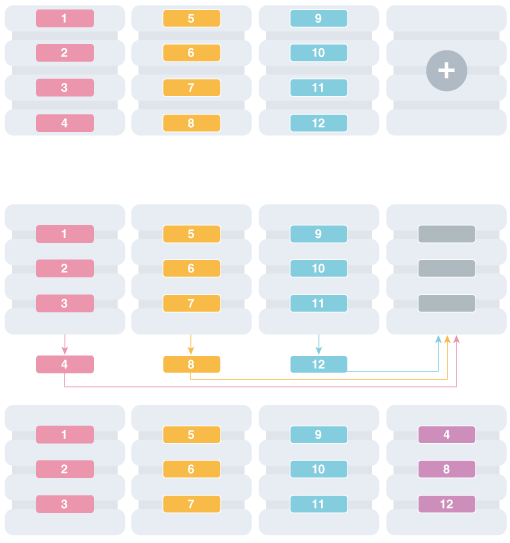

Depending on the sharding method, resharding happens automatically (system-managed) or is directed by the user (composite). The following figure shows the stages of automatic resharding when a shard is added to a sharded database with three shards.

A particular chunk can also be moved from one shard to another, when data or workload skew occurs, without any change in the number of shards. In this case, chunk migration can be initiated by the database administrator to eliminate the hot spot.

RMAN Incremental Backup, Transportable Tablespace, and Oracle Notification Service technologies are used to minimize impact of chunk migration on application availability. A chunk is kept online during chunk migration. There is a short period of time (a few seconds) when data stored in the chunk is available for read-only access only.

FAN-enabled clients receive a notification when a chunk is about to become read-only in the source shard, and again when the chunk is fully available in the destination shard on completion of chunk migration. When clients receive the chunk read-only event, they can either repeat connection attempts until the chunk migration is completed, or access the read-only chunk in the source chunk. In the latter case, an attempt to write to the chunk will result in a run-time error.

Note:

Running multi-shard queries while a sharded database is resharding can result in errors, so it is recommended that you do not deploy new shards during multi-shard workloads.

Parent topic: Shard Management

Removing a Shard From the Pool

It may become necessary to remove a shard from the sharded database environment, either temporarily or permanently, without losing any data that resides on that shard.

For example, removing a shard might become necessary if a sharded environment is scaled down after a busy holiday, or to replace a server or infrastructure within the data center. Prior to decommissioning the shard, you must move all of the chunks from the shard to other shards that will remain online. As you move them, try to maintain a balance of data and activity across all of the shards.

If the shard is only temporarily removed, keep track of the chunks moved to each shard so that they can be easily identified and moved back once the maintenance is complete.

See Also:

Oracle Database Global

Data Services Concepts and Administration

Guide for information about using the GDSCTL REMOVE SHARD command

Parent topic: Shard Management

Adding Standby Shards

You can add Data Guard standby shards to an Oracle Sharding environment; however there are some limitations.

When using Data Guard as the replication method for a sharded database, Oracle Sharding supports only the addition of a primary or physical standby shard; other types of Data Guard standby databases are not supported when adding a new standby to the sharded database. However, a shard that is already part of the sharded database can be converted from a physical standby to a snapshot standby. When converting a physical standby to a snapshot standby, the following steps should be followed:

If the database is converted back to a physical standby, the global services can be enabled and started again, and the shard becomes an active member of the sharded database.

Parent topic: Shard Management

Managing Shards with Oracle Enterprise Manager Cloud Control

You can manage database shards using Oracle Enterprise Manager Cloud Control

To manage shards using Cloud Control, they must first be discovered. Because each database shard is a database itself, you can use standard Cloud Control database discovery procedures.

The following topics describe shard management using Oracle Enterprise Manager Cloud Control:

- Validating a Shard

Validate a shard prior to adding it to your Oracle Sharding deployment. - Adding Primary Shards

Use Oracle Enterprise Manager Cloud Control to add a primary shards to your Oracle Sharding deployment. - Adding Standby Shards

Use Oracle Enterprise Manager Cloud Control to add a standby shards to your Oracle Sharding deployment. - Deploying Shards

Use Oracle Enterprise Manager Cloud Control to deploy shards that have been added to your Oracle Sharding environment.

Parent topic: Shard Management

Validating a Shard

Validate a shard prior to adding it to your Oracle Sharding deployment.

You can use Oracle Enterprise Manager Cloud Control to validate shards before adding them to your Oracle Sharding deployment. You can also validate a shard after deployment to confirm that the settings are still valid later in the shard lifecycle. For example, after a software upgrade you can validate existing shards to confirm correctness of their parameters and configuration.

To validate shards with Cloud Control, they should be existing targets that are being monitored by Cloud Control.

- From a shardgroup management page, open the Shardgroup menu, located in the top left corner of the shardgroup target page, and choose Manage Shards.

- If prompted, enter the shard catalog credentials, select the shard director to manage under Shard Director Credentials, select the shard director host credentials, and log in.

- Select a shard from the list and click Validate.

- Click OK to confirm you want to validate the shard.

- Click the link in the Information box at the top of the page to view the provisioning status of the shard.

When the shard validation script runs successfully check for errors reported in the output.

Adding Primary Shards

Use Oracle Enterprise Manager Cloud Control to add a primary shards to your Oracle Sharding deployment.

Primary shards should be existing targets that are being monitored by Cloud Control.

It is highly recommended that you validate a shard before adding it to your Oracle Sharding environment. You can either use Cloud Control to validate the shard (see Validating a Shard), or run the DBMS_GSM_FIX.validateShard procedure against the shard using SQL*Plus (see Validating a Shard).

If you did not select Deploy All Shards in the sharded database in the procedure above, deploy the shard in your Oracle Sharding deployment using the Deploying Shards task.

Adding Standby Shards

Use Oracle Enterprise Manager Cloud Control to add a standby shards to your Oracle Sharding deployment.

Standby shards should be existing targets that are being monitored by Cloud Control.

It is highly recommended that you validate a shard before adding it to your Oracle Sharding environment. You can either use Cloud Control to validate the shard (see Validating a Shard), or run the DBMS_GSM_FIX.validateShard procedure against the shard using SQL*Plus (see Validating a Shard).

If you did not select Deploy All Shards in the sharded database in the procedure above, deploy the shard in your Oracle Sharding deployment using the Deploying Shards task.

Managing Shards with GDSCTL

You can manage shards in your Oracle Sharding deployment using the GDSCTL command-line utility.

The following topics describe shard management using GDSCTL:

- Validating a Shard

Before adding a newly created shard to a sharding configuration, you must validate that the shard has been configured correctly for the sharding environment. - Adding Shards to a System-Managed Sharded Database

Adding shards to a system-managed sharded database elastically scales the sharded database. In a system-managed sharded database chunks are automatically rebalanced after the new shards are added. - Replacing a Shard

If a shard fails, or if you just want to move a shard to a new host for other reasons, you can replace it using theADD SHARD -REPLACEcommand in GDSCTL.

Parent topic: Shard Management

Validating a Shard

Before adding a newly created shard to a sharding configuration, you must validate that the shard has been configured correctly for the sharding environment.

Before you run ADD SHARD, run the validateShard procedure against the database that will be added as a shard. The validateShard procedure verifies that the target database has the initialization parameters and characteristics needed to act successfully as a shard.